Lamini

Using Supermicro GPU Servers with the AMD Instinct™ MI300X, Lamini is able to offer LLM tuning at high speed.

Free up space in your data center and deliver leadership performance for AI workloads with Supermicro rack-ready systems, powered by AMD.

As the pace—and urgency—of AI innovation accelerates, Supermicro and AMD are working together to help you stay ahead. Deploy Supermicro rack-ready servers with AMD EPYC™ processors and AMD Instinct™ accelerators to unleash performance, cost-effectiveness, and scalability for the massive AI workloads of today and tomorrow.

Together, Supermicro and AMD offer an open, robust ecosystem and the breakthrough power needed for the AI lifecycle—all with leadership performance and efficiency to meet your unique needs.

AMD EPYC processors can be used for small-scale AI models and workloads where proximity to data matters, while AMD Instinct accelerators shine for large models and dedicated AI deployments demanding very high performance. Compared to the Nvidia H100 HGX, the AMD Instinct Platform can offer a throughput increase of up to 1.6x when running inference on LLMs like BLOOM 176B.1

Discover how Supermicro H13 servers, powered by AMD Instinct M1300X accelerators, streamline deployments at scale for the largest AI models.

Explore how Supermicro H13 systems, powered by AMD Instinct M1300A APUs, combine the power of AMD Instinct accelerators and AMD EPYC processors with shared memory to supercharge your AI initiatives.

Note: Test results for white box system; please refer to www.supermicro.com/aplus for Supermicro product/platform information.

To make the most of IT budgets, many data centers are already pushing the limits of available space, power, or both. Supermicro servers with AMD EPYC processors deliver the leadership performance and efficiency to consolidate your core workloads—freeing up space, power, and cooling to support new business-critical AI workloads.

Replace 100 old 2P Intel® Xeon® Platinum 8280 CPU-based servers with 14 new AMD EPYC 9965 CPU-based servers2

Up to 87% fewer servers

Up to 71% less power

Up to 67% lower 3-yr TCO

Help lower cost and TCO AMD EPYC 9965 CPU-based servers vs Intel® Xeon® Platinum 8592+ CPU-based servers2

Up to 63% fewer servers

Up to 45% less power

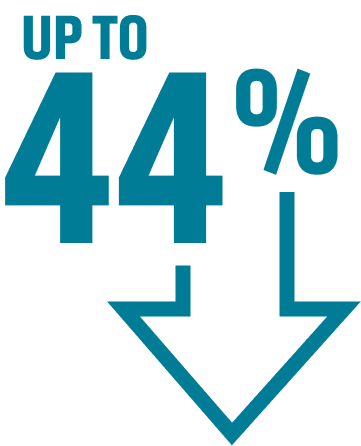

Up to 44% lower 3-yr TCO

Note: Test results for white box system; please refer to www.supermicro.com/aplus for Supermicro product/platform information.

Learn how AI is driving demand for richly configured servers—and why IDC says it’s imperative to adopt a modern technology stack now.

Experience for yourself when you test-drive your AI workloads by requesting a trial of Supermicro H14 servers powered by AMD EPYC processors.

Subscribe to Data Center Insights from AMD

Request Contact from an AMD EPYC™ and Supermicro Sales Expert