AMD Quark Quantizer for Efficient AI Model Deployment

Dec 10, 2024

Authors: Dwith Chenna, Uday Das, AI Group, AMD.

Introduction

In the realm of Deep Learning and Generative AI, AI models are widely used for tasks like image classification, object detection using CNN models, as well as generating text or images with large language models (LLMs) and diffusion models. Deploying these models efficiently on both GPUs and edge devices presents challenges due to their computational and memory requirements. Quantization provides a way to reduce model size and improve inference speed, making it essential for handling these requirements across different hardware platforms. This blog introduces the new AMD Quark Quantizer and highlights some of the features it offers, providing a foundation for exploring various other quantization techniques and mechanisms supported by (AMD) Quark.

Model Quantization

Quantization involves converting a model's weights and activations from floating-point precision to lower bit-width integers. This process reduces the model's memory footprint and computational requirements, making it suitable for deployment on resource-constrained devices.

Model quantization can be applied post-training, allowing existing models to be optimized without retraining. This process involves mapping high-precision weights/activations to a lower precision format, such as FP16/INT8, while maintaining model accuracy.

Float to Float Conversion

Pre-trained AI models are often converted to float16 or bf16, as some hardware, such as iGPUs and NPUs, and their compilers support these datatypes for optimized performance. In float16, both the exponent and mantissa are reduced to fewer bits by scaling and truncation, which lowers precision but allows for more efficient computation and memory usage. On the other hand, bf16 focuses on maintaining a wider dynamic range by keeping the 8-bit exponent of float32, while reducing the mantissa to 7 bits, providing a balance between range and precision. These formats enable AI models to run efficiently on hardware with limited precision support.

Float to Int Conversion

In float-to-int quantization, real numbers (r) are mapped to quantized integers (q) based on a linear relationship defined by scale (S) and zero-point (Z) parameters. These parameters are central to the quantization process, where scale (S) typically represents a floating-point value in fixed-point representation, and zero-point (Z) aligns with the quantized data type.

For 8-bit quantization, q is an 8-bit integer that captures the quantized representation of the original value r. Special attention is given to ensure that a real zero value in r maps to zero in q, maintaining accuracy at the zero-crossing.

The quantized and dequantized values are computed as:

q = round (r/S + Z) (1)

r = S (q- Z) (2)

Scale and zero-point values can be derived based on the distribution of r:

S = (r max - r min)/(q max - q min) (3)

Z = round (q max - r max/S) (4)

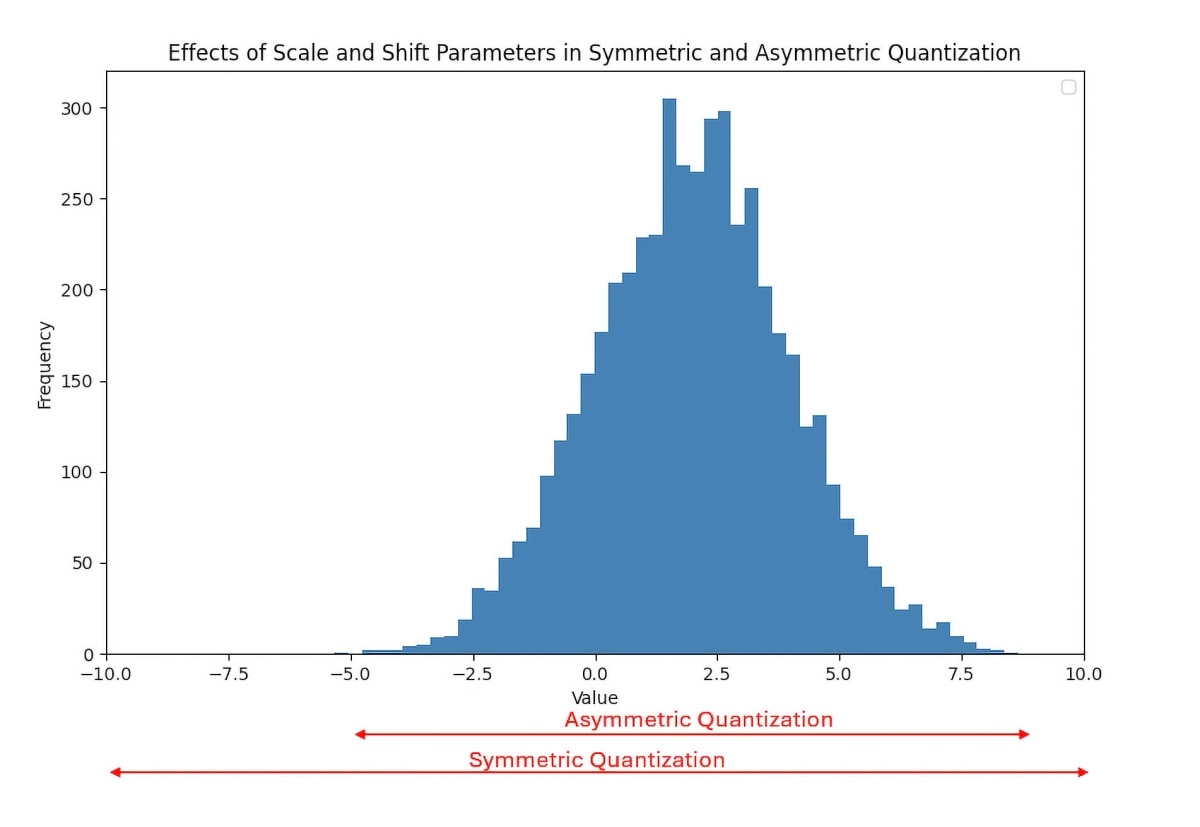

The choice of quantization parameters requires a trade-off between precision and dynamic range, with the choice driven by the target model and application. Symmetric and asymmetric quantization methods affect both scale and shift in this process, as illustrated in the below figure, with each approach impacting the resulting model’s accuracy and performance differently.

Quantization Challenges

Quantization introduces several challenges, primarily revolving around the potential drop in model accuracy. Reducing precision to lower bit-width representations can lead to significant information loss, affecting the model's ability to make accurate predictions. This accuracy loss is a critical concern, especially for applications requiring high precision.

Choosing the right quantization parameters—such as bit-width, scaling factors, and the decision between per-channel or per-tensor quantization—adds layers of complexity to the design process. Each choice uniquely impacts both performance and accuracy, careful consideration and experimentation is needed to find the optimal balance.

Moreover, effective quantization requires robust hardware and software support to manage operations with reduced precision. Ensuring compatibility and performance across diverse hardware environments remains a significant challenge, requiring collaboration between software developers and hardware manufacturers to standardize and enhance support for quantization techniques.

AMD Quark Quantizer

(AMD) Quark provides developers with a flexible, efficient, and easy-to-use toolkit featuring a rich API set for two popular deployment frameworks: PyTorch and ONNX. The APIs are designed to be beginner-friendly, offering default configurations that make it easy to get started, while also providing the flexibility to fine-tune parameters for more complex models or deployment scenarios.

The (AMD) Quark Quantization API is a framework for quantizing AI models, providing a variety of schemes and techniques to optimize models for diverse hardware targets. Table 1 summarizes the features of the (AMD) Quark Quantization API, including supported frameworks, model quantization techniques, data types, quantization schemes, advanced quantization methods, and compatible platforms. This extensive support enables (AMD) Quark to meet the diverse needs of users for model quantization and deployment.

Features |

(AMD) Quark |

Frameworks |

PyTorch, ONNX, Hugging Face etc. |

Model Quantization |

Quantization Aware Training (QAT), |

Data types |

FP16, BFP16, FP8 variants (MX6, MX9), FP4 |

Quantization Scheme |

Weights: Asymmetric/Symmetric, Activations: Asymmetric/Symmetric |

Advanced Quantization |

Fast Finetuning, Mixed Precision, Cross Layer Equalization, AdaQuant, GPTQ, AWQ, SmoothQuant, |

Platform Support |

CPU, GPU, NPU |

Table 1. Summarizes the quantization features of the (AMD) Quark API [Link]

Quantization Scheme

(AMD) Quark supports multiple quantization schemes, allowing developers to choose the best approach for their specific use case. These schemes ensure that the quantized model performs efficiently on the target hardware.

Precision: Effectiveness of quantization is in its ability to represent the learned parameters of the AI model using lower precision without much loss in accuracy. This allows users to select different levels of compression depending on the application and underlying platform. (AMD) Quark allows quantization of variety of models and cases through support for a wide range of data types including uint32, int32, float16, bfloat16, int16, uint16, int8, uint8 and bfp.

Symmetric/Asymmetric: (AMD) Quark offers flexibility to support both symmetric and asymmetric quantization strategies, enabling efficient quantization based on the distribution of values. Symmetric quantization is optimal when the distribution is centered around zero (i.e., zero point = 0), as it ensures balanced representation of positive and negative values. In contrast, asymmetric quantization introduces a non-zero offset, allowing for better representation and more efficient use of bit precision when the distribution is not centered around zero. In practice, (AMD) Quark can be used for symmetric quantization for weights, which often exhibit symmetric distributions, and asymmetric quantization for activations, which tend to be asymmetric, providing robust support for a variety of AI models.

(AMD) Quark also supports various granularity levels of quantization, such as per-tensor, per-channel, and per-group quantization, depending on how quantization parameters (scale, offset) are applied. Per-tensor quantization uses a single set of parameters for the entire tensor, while per-channel and per-group quantization offer finer granularity by applying different parameters to each channel or group of values. Moving from per-tensor to per-group quantization increases precision, reducing quantization error, but also introduces performance overheads due to the added complexity of handling a larger number of quantization parameters. (AMD) Quark provides the flexibility to choose the most appropriate granularity level, balancing precision and performance for different model requirements.

Calibration Methods

Calibration dataset is essential for computing the quantization parameters of activations, ensuring it accurately represents the input fed to the AI model. (AMD) Quark provides several calibration methods—MinMax, Percentile, Entropy, MSE, and NonOverflow—to precisely determine the necessary quantization parameters, such as scale and offset. These methods can be tailored to specific applications, effectively managing outliers that might otherwise degrade the quantization process.

MinMax: By default, (AMD) Quark uses MinMax calibration, which involves determining the minimum and maximum values of the activations to set the quantization range. This method is straightforward and effective when the data distribution is uniform. However, it can be sensitive to outliers, which may lead to suboptimal quantization if not handled properly.

Percentile: Percentile calibration addresses the outlier issue by using a specified percentile of the data to define the quantization range. By ignoring extreme values, this method provides a more robust quantization range for datasets with outliers. It is particularly useful when the data distribution is skewed or contains anomalies.

Entropy: Entropy calibration uses the concept of information entropy to determine the optimal quantization parameters. By minimizing the information loss during quantization, this method is effective for preserving the model's accuracy. It is well-suited for complex models where maintaining information fidelity is critical.

MSE: MSE calibration focuses on minimizing the mean squared error between the original and quantized activations. This method is ideal for applications where precision is paramount, as it directly optimizes for the least distortion in the quantization process. It is particularly beneficial for models where small errors can lead to significant performance degradation.

NonOverflow: The NonOverflow calibration method calculates power-of-two quantization parameters for each tensor, ensuring that the minimum and maximum values do not overflow. This method provides a safe and efficient quantization range, particularly useful in scenarios where overflow could cause critical errors.

Advanced Quantization Techniques

(AMD) Quark provides advanced quantization techniques to ensure high model performance and recover any lost accuracy during precision reduction. While basic quantization configurations work well for many models, more sophisticated techniques are often necessary for advanced and optimized models. Here, we explore a few of these advanced methods:

Cross Layer Equalization

(AMD) Quark incorporates Cross-Layer Equalization (CLE) to balance weight distributions across layers, thereby reducing quantization errors. This technique involves scaling weights and biases to ensure uniform distribution, which helps maintain model accuracy while enabling efficient quantization. CLE is especially useful for deep networks where imbalanced weight distributions can lead to significant performance drops.

Mixed Precision

(AMD) Quark supports mixed precision quantization, which combines both 8-bit and 16-bit formats to optimize model performance and efficiency. By strategically applying lower precision to certain operations, it reduces memory usage and accelerates computation without significantly impacting accuracy. This approach is particularly beneficial for models with varying sensitivity across layers, allowing for a balanced trade-off between speed and precision.

Fast Finetuning

Fast finetuning with (AMD) Quark involves adjusting a pre-trained model to enhance its accuracy post-quantization. This technique helps recover any accuracy lost during the quantization process, making the model more robust and suitable for deployment. By fine-tuning the model with a small, representative dataset, it adapts to the quantized environment, improving overall performance.

The advanced quantization techniques described above are a subset of many features offered by the (AMD) Quark API. Selecting the appropriate techniques or quantization schemes depends on the specific model and application requirements, (AMD) Quark API provides various options for model quantization, enabling users to fine-tune parameters to suit their specific applications. As demonstrated in Table 2, these techniques help recover the accuracy typically lost during quantization, allowing models like ResNet50 and MobileNetV2 to maintain accuracy loss within 1.0% of the float-point model.

Model |

Float32 |

Default Quant Config (XINT8) |

Default + CLE |

Default + Mixed Precision |

Default + Fast Finetuning |

ResNet50 (Acc@Top1/ |

80.0% / 96.1% |

77.3%/94.9% |

77.7%/95.7% |

78.9%/95.4% |

79.3%/96.2% |

(Acc@Top1/ |

71.3% / 90.6% |

64.0%/86.5%

|

62.7%/85.4% |

68.6%/88.6% |

70.5%/90.3%

|

Table 2. Summary table showing accuracy metrics on ImageNet dataset for ResNet50/MobileNetV2 models [Link]

Advanced LLM Quantization

While a detailed discussion on LLM-specific quantization is beyond the scope of this blog, it's important to mention that with the rise of LLMs and Generative AI, effective quantization of these models has become increasingly crucial. (AMD) Quark offers low-bit precision quantization techniques, incorporating cutting-edge algorithms such as SmoothQuant, AWQ (Activation Aware Quantization), and GPTQ. Additionally, (AMD) Quark supports KV Cache quantization, an emerging area in LLM application development, especially for applications requiring large context lengths, such as RAG-based systems or conversational chat agents.

Conclusion

In this blog, we’ve explored the new and powerful AMD Quark Quantizer and its foundational features that will help you dive deeper into AMD Quark Quantizer. We encourage you to explore (AMD) Quark and discover its full potential.

To get started and access additional resources

- (AMD) Quark Documentation: https://quark.docs.amd.com/latest/

- End-to-End (AMD) Quark Tutorial on Ryzen AI PC: https://github.com/amd/RyzenAI-SW/tree/main/tutorial/quark_quantization

- Advanced LLM Quantization: https://quark.docs.amd.com/latest/pytorch/example_quark_torch_llm_ptq.html