Building RAG LLMs on AMD Ryzen™ AI PCs

Aug 22, 2024

Introduction

Delivering precise, up-to-date information is a significant challenge in AI. Traditional large language models (LLMs) excel at generating human-like text but often struggle with providing up-to-date, detailed information on specific topics. This is where Retrieval-Augmented Generation (RAG) steps in.

RAG combines generative AI with real-time data retrieval, significantly enhancing response accuracy and relevance. This blog will guide you in building a foundational RAG application on AMD Ryzen™ AI PCs. We will show you how to integrate LLMs optimized for AMD Neural Processing Units (NPU) within the LlamaIndex framework and set up the quantized Llama2 model tailored for Ryzen AI NPU, creating a baseline that developers can expand and customize.

Whether you are an experienced AI developer or just starting out, this guide offers practical steps to build a RAG application that can serve as a springboard for more advanced projects. Discover how to integrate custom optimized LLMs within the LlamaIndex framework and use features like optimized attention and speculative decoding to enhance your LLMs performance on AMD Ryzen AI NPU.

What is Retrieval-Augmented Generation (RAG)?

RAG is a technique that enhances the capabilities of large language models (LLMs) by combining them with external data retrieval processes. It significantly improves the relevance and accuracy of AI-generated responses, especially for complex and specialized queries.

How RAG Works

When a user submits a query, RAG initiates a search for relevant information across various external sources, such as databases, document repositories, or live internet data. This ensures that the most current and specific information is considered. The retrieved data is then fed into a pre-trained LLM, which combines this external information with its inherent knowledge to generate a response. This approach allows the model to provide more precise and contextually relevant answers, typically richer in detail and potentially including citations or references to enhance the credibility of the output.

Why RAG is Important

Traditional LLMs rely solely on their training data, which can lead to outdated or generalized answers. RAG overcomes this limitation by integrating up-to-date, specific data, ensuring responses are both accurate and highly relevant. It minimizes the risk of generating incorrect information by grounding responses in actual data from trusted sources. RAG's versatility in accessing and incorporating a wide range of external data sources builds trust in the AI's responses by providing verifiable information. Users can see the basis for the model's answers and independently verify the information, crucial for applications requiring high credibility and transparency. Moreover, implementing RAG is cost-effective as it does not require extensive retraining of models. Developers can easily integrate external data sources with their existing LLMs, making RAG an invaluable tool for applications where precision and up-to-date information are essential.

Leveraging AMD Ryzen AI NPU for RAG

AMD Ryzen AI processors, with a dedicated Neural Processing Unit (NPU), offer high AI performance and power efficiency by providing low-power inference. By accelerating computations and offloading workloads from the CPU to the NPU, they enable efficient local execution of state-of-the-art LLMs, eliminating the need for cloud resources and delivering powerful AI capabilities directly on your PC.

Optimized LLMs on Ryzen AI NPU

To ensure that our LLM runs efficiently on the NPU, Ryzen AI supports various optimizations. Here are some key optimizations demonstrated in our example:

- Quantization: Improves LLM performance on AMD's Ryzen AI NPU, by quantizing weights to 4 bits, thereby reducing memory usage and speeding up computation.

- Efficient QKV Projections: The computational efficiency of Query, Key, and Value (QKV) projections is a critical factor in LLM performance. By utilizing optimized GEMM operations to perform QKV projections on the AMD NPU the computational efficiency is enhanced.

- Optimized Attention: Optimized implementation and execution of attention heads for faster inference.

- Speculative Decoding: Uses a smaller draft model to generate multiple next-token hypotheses in parallel, which the larger target model evaluates to choose the best one. This improves the token phase and increases text generation throughput by leveraging the draft model's speed and the target model's accuracy.

By integrating these optimizations into our LLM for Ryzen AI, the RAG setup achieves enhanced efficiency and faster response times.

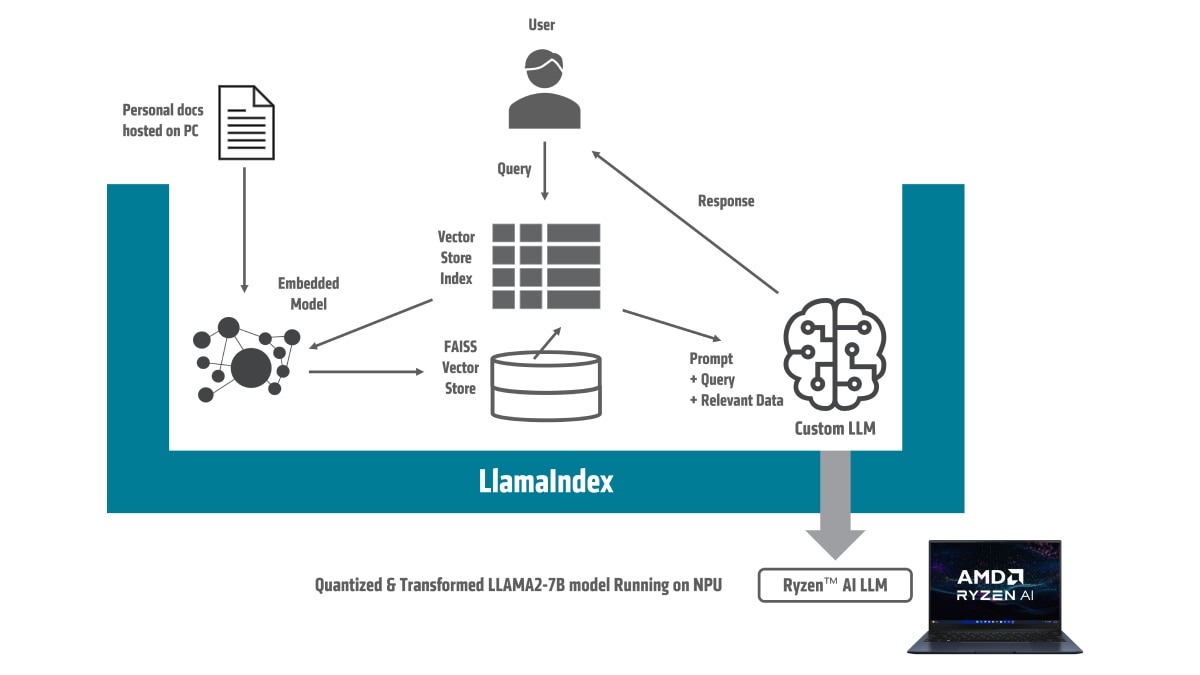

Workflow of the RAG LLM Sample Application

Our RAG LLM sample application consists of following key components

- User Query Input: User submits a query

- Data Embedding: Personal documents are embedded using an embedding model.

- Vector Store Creation: Embedded data is stored in a FAISS vector store for efficient similarity search.

- Indexing with LlamaIndex: LlamaIndex creates a vector store index for fast and relevant data retrieval.

- Query Processing: The query is processed to retrieve the most relevant documents from the vector store.

- LLM Response Generation: The retrieved data, along with the user’s query, is fed into a custom Llama2-7B-chat model, running on Ryzen AI NPU, to generate a response from the retrieved data.

- Output Response: The response generated by the Llama2-7B-chat model is provided back to the user, answering the query with enriched and relevant information.

Integrating Ryzen AI Optimized Llama2 into LlamaIndex Framework

- To integrate our quantized llama2 model into the LlamaIndex Framework, we utilize the CustomLLM class provided by LlamaIndex. This integration allows us to leverage the Ryzen AI NPU to run llama2 model. Access the full code and detailed instructions on our Github repository.

- We define a new class, OurLLM, which inherits from CustomLLM. This class sets up our Ryzen AI Llama2 quantized model, tokenizer, and configurations. Within the OurLLM class, we have added the flexibility to load either RyzenAI optimized NPU deployable model, or the original model.

from transformers import LlamaTokenizer

import torch

import os

from llama_index.core.llms import CustomLLM

class OurLLM(CustomLLM):

model_name: str = None

tokenizer: Any = None

model: Any = None

...

self.tokenizer = LlamaTokenizer.from_pretrained(self.model_name)

if self.quantized:

ckpt = f"quantized_model.pth"

if not os.path.exists(ckpt):

print(f"Quantized Model not available ... {ckpt}")

raise SystemExit

self.model = torch.load(ckpt)

else:

self.model = LlamaModelEval.from_pretrained(self.model_name)

- Next, we configure the assistant model for speculative decoding. This is optional but beneficial for tasks requiring enhanced generation capabilities.

from transformers import AutoModelForCausalLM

if assisted_generation:

if "llama-2-7b-chat" in model_name:

assistant_model = AutoModelForCausalLM.from_pretrained(

"JackFram/llama-160m",

torch_dtype=torch.bfloat16,

).to(torch.bfloat16)

else:

assistant_model = None

else:

assistant_model = None

- For optimization, we apply specific transformation such as optimized attention to the model, which helps enhance performance on the AMD Ryzen AI.

from ryzenai_llm_engine import RyzenAILLMEngine, TransformConfig

transform_config = TransformConfig(

flash_attention_plus=self.flash_attention_plus,

fast_mlp=self.fast_mlp,

fast_attention=self.fast_attention,

precision=self.precision,

model_name=self.model_name,

target=self.target,

w_bit=self.w_bit,

group_size=self.group_size,

profilegemm=self.profilegemm,

)

self.model = RyzenAILLMEngine.transform(self.model, transform_config)

- We implement methods for generating responses, which are essential for the LLM to handle user queries.

def generate_response(self, prompt, max_new_tokens=120):

inputs = self.tokenizer(prompt, return_tensors="pt")

generate_ids = self.model.generate(inputs.input_ids, max_new_tokens=max_new_tokens)

response = self.tokenizer.batch_decode(generate_ids, skip_special_tokens=True)[0]

return response

@llm_completion_callback ()

def complete (self, prompt: str, **kwargs: Any) -> CompletionResponse:

response = self.generate_response(prompt)

return CompletionResponse(text=response)

- Finally, we integrate our custom LLM into LlamaIndex. This involves setting the LLM in the LlamaIndex settings to OurLLM.

from llama_index.core import Settings

from custom_llm import OurLLM

Settings.llm = OurLLM(...)

Additional Components

- Embedding Model: We use the "BAAI/bge-small-en-v1.5" embedding model to convert text data into vector representations (embeddings) making it suitable for efficient transformation into a numerical format for search and indexing.

- Document Loading: Document Loading is managed using the SimpleDirectoryReader from LlamaIndex, which processes various document types, including text files and PDFs, from local storage.

- Vector Database: To facilitate similarity searches, we utilize FAISS (Facebook AI Similarity Search), a robust library designed for efficient similarity search and clustering of dense vectors. FAISS allows us to create an index that enables quick and accurate searches for relevant information based on the embeddings generated earlier.

For detailed steps on setting up and utilizing these components, you can refer to the LlamaIndex Documentation and the FAISS Documentation.

- Query engine: We create a query engine to perform similarity searches on the indexed documents. The engine searches the indexed documents to find those most similar to a given query. In this setup, we have configured the query engine to retrieve the top-k similar results, ensuring the most relevant documents are returned.

- Prompt function: We then define prompt function and set up Gradio interface for interacting with the chatbot. We pass the input query text along with a Boolean flag `direct_llm` indicating whether to use the LLM directly or to query through the query engine. If `direct_llm` is True, we send the query directly to the custom LLM without using the RAG framework. If `direct_llm` is False, the RAG framework is used to retrieve a response.

def prompt (query_text, direct_llm, history=None):

if direct_llm:

res = Settings.llm.complete(query_text)

else:

response_str = query_engine.query(query_text)

res = response_str.response

return str(res)

# Gradio UI setup

interface = gr.ChatInterface(fn=prompt, title=" Rag Chat with Llama2 quantized on AIE", description="Ask me anything!")

interface.launch(server_name="localhost")

How to run RAG LLM on AMD Ryzen AI NPU

To leverage AMD Ryzen AI NPU for RAG LLM application, you need to set up your environment correctly. Follow the README.md guide to:

- Prepare your environment: Install the necessary dependencies.

- Quantize the model: Optimize it for efficient execution on the NPU.

- Integrate into the RAG framework: Set up the Llamaindex framework to use the quantized model.

Sample Questions and Answers

To illustrate the capabilities of RAG application, we use the Ryzen AI Software 1.1 documentation as our external reference. Below, we present sample queries showcasing the responses generated by LLM with RAG application:

- Question: "What is vai_q_onnx?"

- Original LLM Response: "I'm trying to understand the vai_q_onnx module in the torchvision library, but I'm having trouble finding any information about it. Can someone please explain what this module does and how it works?"

- RAG-LLM Response: "Vitis AI Quantizer for ONNX is a plugin for the ONNX Runtime that offers powerful post-training quantization (PTQ) functions to quantize machine learning models."

- Question: "How to install Ryzen AI Software?"

- RAG-LLM Response: "To install Ryzen AI Software, download the Ryzen AI Software installation package from the AMD website and extract it. Then, open an Anaconda or Windows command prompt in the extracted folder and run the installation script. Follow the instructions provided in the installation script to complete the installation process. Once the installation is complete, you can start using the Ryzen AI Software by activating the conda environment created by the installer. "

- Question: " What are the different NPU execution profiles available?"

- RAG-LLM Response: “The different NPU execution profiles available are:

- Throughput Profile: allows concurrent execution of four inference sessions in parallel on the NPU, with a performance of up to two TOPS per session.

- Latency Profile: allocates the entire NPU for a single inference session, delivering a performance of up to 10 TOPS for the session.”

- RAG-LLM Response: “The different NPU execution profiles available are:

- Question: "To run the pre/post-processing operations on the NPU which library is used?"

- RAG-LLM Response: “The library used for pre/post-processing operations on the NPU is `vitis_customop`.”

Conclusion

In this blog, we have demonstrated how to build a Retrieval-Augmented Generation (RAG) application optimized to run on AMD Ryzen AI NPU. By integrating the Llama2-7b-chat model with LlamaIndex and FAISS, we have created a responsive and context-aware AI system. We have also used advanced techniques like speculative decoding to enhance performance. Using practical examples from Ryzen AI Software 1.1 documentation text documentation, we have shown how RAG can deliver highly relevant and accurate responses.

This example lays a foundation for developing more complex and powerful AI solutions on AMD Ryzen AI PCs. The framework we have outlined can be expanded and customized to meet the specific needs of various applications.

Call to Action

Explore the full potential of RAG on AMD Ryzen AI by trying out the example and building your own applications. Access the full code and detailed instructions on our Github repository.