Introducing the First AMD SLM (Small Language Model): AMD-135M Model Fuels AI Advancements

Sep 17, 2024

Introduction

The rapid development of artificial intelligence technology, especially the progress in large language models (LLMs), has attracted extensive attention and discussion. From the birth of ChatGPT to subsequent models like GPT-4 and Llama, these language models have demonstrated unprecedented capabilities in natural language processing, generation, and understanding. However, small language models are emerging as an essential counterpart in the AI model community as they offer a unique advantage for specific use cases.

Why Build Your Own Small Language Models (SLMs)

LLMs models like GPT-4 and Llama 3.1 have raised the bar for performance and capability in the current rapidly evolving field of AI. While LLMs are important, there is a compelling case for smaller language models (SLMs) as it provides a practical solution that balances performance with operational constraints. Additionally, it is well-known that training LLMs often requires an extensive array of high-end GPUs. As model scale increases, the need for data grows even more rapidly. Sometimes, even if there’s a way to get a well-trained LLM, it is difficult to run it efficiently on a client device with very limited computing resources. These factors make it challenging for many community developers to balance the pursuit of improved model performance and accuracy against the availability of sufficient computational resources and datasets. The emergence of SLM provides an alternative solution for this resource-intensive training and inference scenarios, helping significantly reduce hardware costs, memory and power consumption.

SLM AI Model Innovations

AMD is releasing its very first AMD-135M SLM with Speculative Decoding. This model was trained from scratch on AMD Instinct™ MI250 accelerators, utilizing 690B tokens and divided into two models: AMD-Llama-135M and AMD-Llama-135M-code. The training code, dataset and weights for this model are open sourced so that developers can reproduce the model and help train other SLMs and LLMs. AMD is not only contributing open-source models to the AI community but also now demonstrating leadership in model performance as compared to other similar sized models as shown in benchmark details below. This also demonstrates the commitment of AMD to an open approach to AI which will lead to more inclusive, ethical, and innovative technological progress, helping ensure that its benefits are more widely shared, and its challenges more collaboratively addressed.

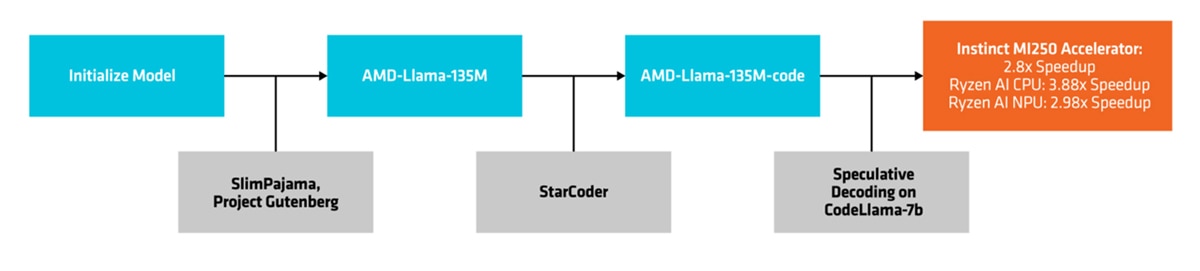

Build and Deploy the Model

Training for this model involved two major steps: first, train1 the model AMD-Llama-135M from scratch with 670B tokens of general data; followed by, running an additional training on 20B tokens of code data to obtain AMD-Llama-135M-code. We used AMD-Llama-135M-code as the draft model for CodeLlama-7b (a pretrained open-source transformer model on code generation developed by Meta and available on Hugging Face). The deployed performance achieved an average of 2-3x speedup across the AMD hardware platforms that we tested.2,3

Figure 1: AMD-135M SLM Build and Deploy Flowchart

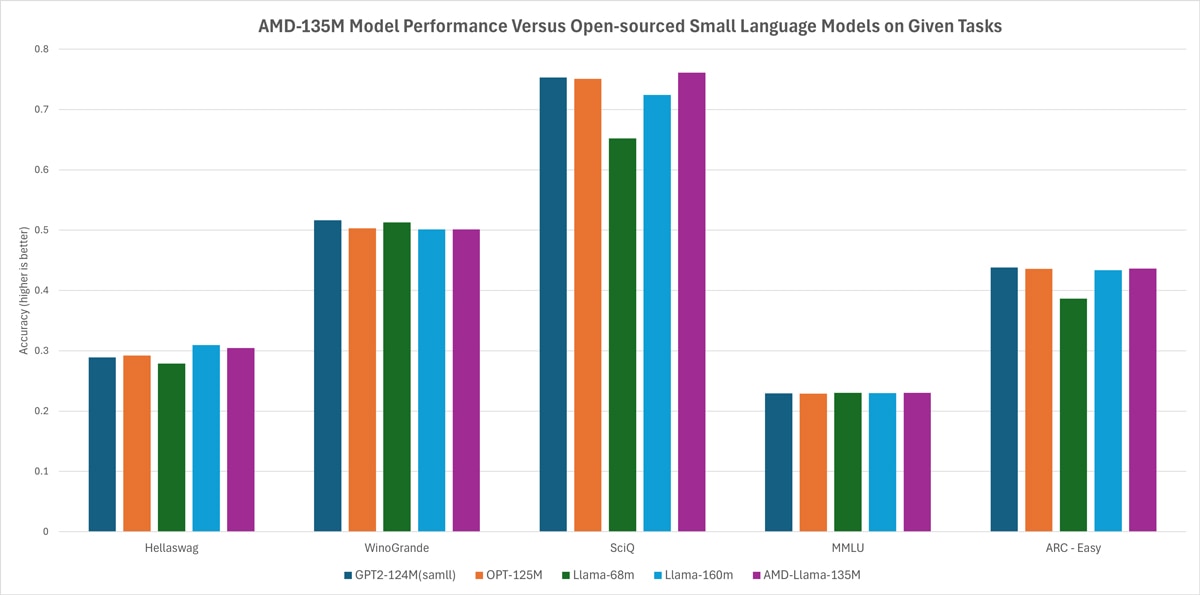

To show that the AMD-135M model has performance comparable to popular models in the market, we benchmarked with several open-source models of similar size. The result demonstrated the state-of-the-art performance of AMD-135M that exceeds Llama-68M and LLama-160M models on tasks like Hellaswag, SciQ, and ARC-Easy. Also, the performance is on par with GPT2-124M and OPT-125M for the given tasks under Hellaswag, WinoGrande, SciQ, MMLU and ARC-Easy as shown in the chart below:

Figure2: AMD-135M Model Performance Versus Open-sourced Small Language Models on Given Tasks4,5

Pretrain

AMD-Llama-135M: We trained the model from scratch on the MI250 accelerator with 670B general data and adopted the basic model architecture and vocabulary of LLaMA-2, with detailed parameters provided in the table below. It took us 6 full days to pretrain AMD-Llama-135M on four MI250 nodes that each of the node has 4xMI250 accelerators (8 virtual GPU cards, 64G memory for each).

| Model Config | Value |

| Parameter Size | 135M |

| Number of layers (blocks) | 12 |

| Hidden size | 768 |

| FFN intermediate size | 2048 |

| Number of head | 12 |

| Dimension of each head | 64 |

| Tie token embedding | False |

| Context windows size | 2048 |

| Vocab size | 32000 |

Figure 3: Model configuration of AMD-Llama-135M

Pretrain Dataset: We employed the SlimPajama and Project Gutenberg dataset to pretrain the 135M model. Project Gutenberg is a library of over 70,000 free eBooks approximately. This sums up to 670B tokens6

Code Finetune

AMD-Llama-135M-code: Building on the AMD-Llama-135M, we further fine-tuned it with 20B tokens of code data to create a specific code mode. It took 4 full days to finetune AMD-Llama-135M-code on four MI250 accelerators.

Code Dataset: We utilized the Python subset of the StarCoder dataset to fine-tune our 135M pretrained model. The StarCoder dataset comprises 783GB of code spanning 86 programming languages and includes data from GitHub Issues, Jupyter notebooks, and GitHub commits, totaling approximately 250B tokens. Our focus was specifically on the Python programming language. Consequently, we extracted the Python subset, consisting of approximately 20B tokens.

Speculative Decoding

Large language models typically use an autoregressive approach for inference. However, a major limitation of this approach is that each forward pass can only generate a single token, resulting in low memory access efficiency and affecting overall inference speed.

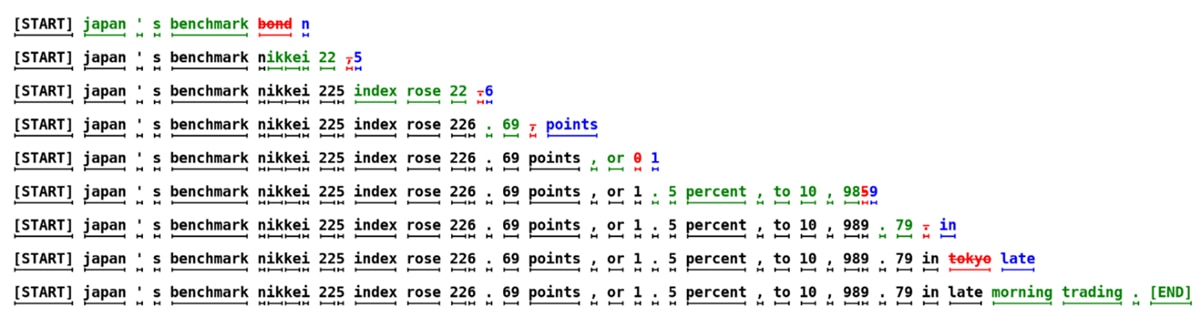

The advent of speculative decoding has solved this problem. The basic principle involves using a small draft model to generate a set of candidate tokens, which are then verified by the larger target model. This approach allows each forward pass to generate multiple tokens without compromising performance, thereby significantly reducing memory access consumption, and enabling several orders of magnitude speed improvements.

An example of the specific process is illustrated below, where each row represents a forward pass. Green indicates accepted tokens, while red and blue represent rejected and suggested tokens, respectively. In the example below, an average of four tokens can be generated per forward pass, reducing bandwidth requirements while enhancing inference speed.

Figure 4: Image from "Fast Inference from Transformers via Speculative Decoding

Inference Performance

Using AMD-Llama-135M-code as a draft model for CodeLlama-7b, we tested the performance with and without speculative decoding on the MI250 accelerator for data center, and Ryzen™ AI processor (with NPU kernel) for AI PC. All experiments were conducted on the HumanEval dataset, Using the Hugging Face assistant model for inference.

As shown in the table below, for the particular configurations that we tested using AMD-Llama-135M-code as the draft model, we saw a ~2.8x speedup on the Instinct MI250 accelerator, a ~3.88x speedup on the Ryzen AI CPU1, and a ~2.98x speedup on the Ryzen AI NPU2 versus the inference without speculative decoding.3

| Target Model Device | Draft Model Device | Do Randomly Sampling | Target model Humaneval Pass@1 | Speculative Decoding Humaneval Pass@1 | Acceptance Rate | Throughput Speedup |

| FP32 MI250 | FP32 MI250 | TRUE | 32.31% | 29.27% | 0.650355 | ~2.58x |

| FP32 MI250 | FP32 MI250 | FALSE | 31.10% | 31.10% | 0.657839 | ~2.80x |

| BF16 MI250 | BF16 MI250 | TRUE | 31.10% | 31.10% | 0.668822 | ~1.67x |

| BF16 MI250 | BF16 MI250 | FALSE | 34.15% | 33.54% | 0.665497 | ~1.75x |

| INT4 Ryzen NPU | BF16 Ryzen CPU | TRUE | 28.05% | 30.49% | 0.722913 | ~2.83x |

| INT4 Ryzen NPU | BF16 Ryzen CPU | FALSE | 28.66% | 28.66% | 0.738072 | ~2.98x |

| BF16 Ryzen CPU | BF16 Ryzen CPU | TRUE | 31.10% | 31.71% | 0.723971 | ~3.68x |

| BF16 Ryzen CPU | BF16 Ryzen CPU | FALSE | 33.54% | 33.54% | 0.727548 | ~3.88x |

| FP32 Ryzen CPU | FP32 Ryzen CPU | TRUE | 29.87% | 28.05% | 0.727214 | ~3.57x |

| FP32 Ryzen CPU | FP32 Ryzen CPU | FALSE | 31.10% | 31.10% | 0.738641 | ~3.66x |

Figure 5: AMD-Llama-135M-code Inference Performance Acceleration with Speculative Decoding on AMD Instinct MI250 Accelerator and Ryzen AI Processor5

Conclusion

AMD-135M SLM establishes an end-to-end workflow, encompassing both training and inferencing, on AMD GPU accelerators and Ryzen AI processors. This model helps ensure compliance with developer usability criteria by providing a reference implementation that adheres to best practices for model construction, pretraining, and deployment on AMD platforms to achieve optimal performance not only in the data center, but also on power-limited edge devices like AI PC. AMD is dedicated to releasing new models to the open-source community and is eager to see the innovations the community will develop.

Call to Actions

You are welcome to download and try this model on AMD platforms. To get more information about the training, inferencing and insights of this model, please visit AMD Github repository to get access to the codes, and visit Hugging Face Model Card to download the model file. As a further benefit, AMD plans to open a dedicate cloud infrastructure including the latest GPU instance to AI developers, please visit AMD Developer Cloud for specific accessing request and usage. For any questions, you may reach out to the AMD team at amd_ai_mkt@amd.com.

Footnotes

- The training code for AMD-135M is based on TinyLlama, utilizing multi-node distributed training with PyTorch FSDP.

- Test ran on AMD Ryzen 9 PRO 7940HS with Radeon 780M Graphics. The Ryzen AI APU Architecture includes CPU and NPU kernels.

- These are the configurations that we tested. You might get different results on other configurations.

- The performance had been tested on AMD Instinct MI250 + ROCmTM 6.0 using standardized tests with lm-evaluation-harness. Additionally, the model performance tests are independent of the hardware environment.

- Hellaswag is dataset and metrics that tests how well that LLMs can reason about physical situations;

WinoGrande is a dataset and codebase for evaluating natural language understanding models on a challenging task of Winograd Schema;

SciQ is a dataset of closed-domain question answering tasks with text inputs and outputs;

MMLU is a dataset of multiple-choice questions on abstract algebra topics, such as groups, rings, fields, and polynomials;

ARC-Easy is a dataset of grade-school level science questions for testing advanced question answering systems.

SlimPajama is a deduplicated version of RedPajama and sources from Commoncrawl, C4, GitHub, Books, ArXiv, Wikpedia and StackExchange. We drop the Books data from SlimPajama due to license issues;

Project Gutenberg is a library of over 70,000 free eBooks.

- Test ran on AMD Ryzen 9 PRO 7940HS w/ Radeon 780M Graphics. The Ryzen AI APU Architecture includes CPU and NPU kernels.

- The training code for AMD-135M is based on TinyLlama, utilizing multi-node distributed training with PyTorch FSDP.

- Test ran on AMD Ryzen 9 PRO 7940HS with Radeon 780M Graphics. The Ryzen AI APU Architecture includes CPU and NPU kernels.

- These are the configurations that we tested. You might get different results on other configurations.

- The performance had been tested on AMD Instinct MI250 + ROCmTM 6.0 using standardized tests with lm-evaluation-harness. Additionally, the model performance tests are independent of the hardware environment.

- Hellaswag is dataset and metrics that tests how well that LLMs can reason about physical situations;

WinoGrande is a dataset and codebase for evaluating natural language understanding models on a challenging task of Winograd Schema;

SciQ is a dataset of closed-domain question answering tasks with text inputs and outputs;

MMLU is a dataset of multiple-choice questions on abstract algebra topics, such as groups, rings, fields, and polynomials;

ARC-Easy is a dataset of grade-school level science questions for testing advanced question answering systems.

SlimPajama is a deduplicated version of RedPajama and sources from Commoncrawl, C4, GitHub, Books, ArXiv, Wikpedia and StackExchange. We drop the Books data from SlimPajama due to license issues;

Project Gutenberg is a library of over 70,000 free eBooks. - Test ran on AMD Ryzen 9 PRO 7940HS w/ Radeon 780M Graphics. The Ryzen AI APU Architecture includes CPU and NPU kernels.