A Solid Foundation for AI Innovation

AI is the defining technology shaping the next generation of computing as a new wave of AI applications evolve at a rapid pace. From creating realistic virtual environments for gaming and entertainment to helping scientists treat and cure diseases or helping humanity better prepare for climate change, AI has the potential to solve some of the world’s most important challenges. The explosion in generative AI and large language models (LLMs) coupled with the rapid pace of AI application innovation is driving enormous demand for compute resources and requiring solutions that are performant, energy efficient, pervasive, and can scale from cloud to edge and endpoints.

AMD is uniquely positioned with a broad portfolio of AI platforms. Built on innovative architectures like AMD XDNA™ (an adaptive dataflow architecture with AI Engines), AMD CDNA™ (a ground-breaking architecture for GPU acceleration in the data center), and AMD RDNA™ (an AI-accelerated architecture for gamers), AMD delivers a comprehensive foundation of CPUs, GPUs, FPGAs, adaptive SoCs, and other accelerators that can address the most demanding AI workloads with exascale-level performance.

AMD Instinct™ Accelerators

AMD has been investing in the data center accelerator market for many years. With generative AI and LLMs, the need for more compute performance is growing exponentially for both training and inference. GPUs are at the center of enabling generative AI, and today AMD Instinct™ GPUs power many of the fastest supercomputers that are using AI to accelerate cancer research or create state-of-the-art LLMs with billions of parameters, used by the global scientific community.

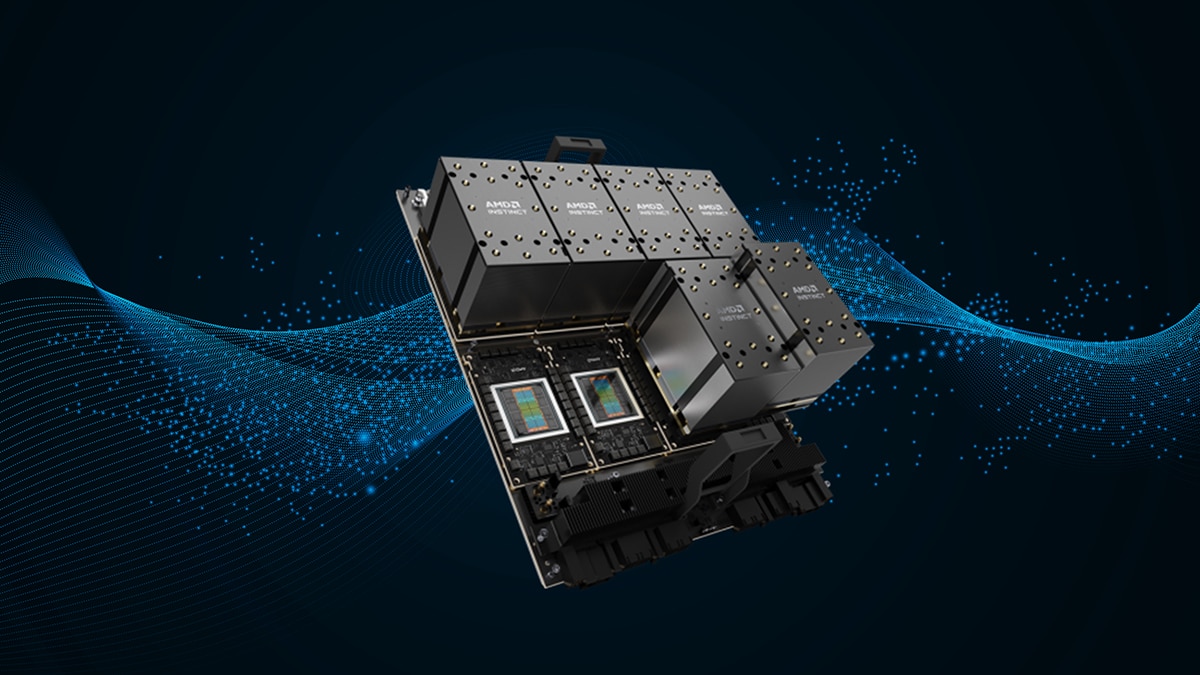

AMD Instinct accelerators are designed to significantly accelerate HPC and AI workloads. Built on the innovative AMD CDNA architecture and optimized for exascale-level performance and power efficiency, the AMD Instinct™ MI300A accelerator is the world’s first Accelerated Processing Unit (APU) for HPC and AI, delivering 24 “Zen 4” CPU cores and 128 GB of HBM3 memory shared across both CPU and GPU for incredible performance.

The AMD Instinct™ MI300X GPU is a 153 billion transistor accelerator packed with performance specifically for the future of AI computing. Based on the same platform as the AMD Instinct MI300A accelerator, the AMD Instinct MI300X GPU has been rebuilt with generative AI acceleration at its core. Removing the three AMD EPYC™ processor compute die in favor of two additional GPU chiplets, the AMD Instinct MI300X GPU increases the platform’s memory by 64 GB for an astonishing total of 192 GB of HBM3; that’s up to 2.4x the density of the competitor, and 5.2 TB/s of memory bandwidth equals up to 1.6x the bandwidth of the competitor product.1

The new AMD Instinct platform will also enable data center customers to combine up to eight AMD Instinct MI300X GPUs in an industry-standard design for a simple drop-in solution that delivers up to 1.5 TB of HBM3 memory. Powered by AMD ROCm™, an open and proven software platform that features open-source languages, compilers, libraries, and tools, the addition of AMD Instinct MI300X accelerators is a powerful AI infrastructure upgrade.

Processors for AI Acceleration

Success in AI requires multiple AI engines from cloud to edge to endpoints. In addition to the AMD Instinct accelerators, AMD EPYC processors support a broad range of AI workloads in the data center, delivering competitive performance based on industry-standard benchmarks like TPCx-AI, which measures end-to-end AI pipeline performance across 10 different use cases and a host of different algorithms.2

With up to 96 cores per 4th Gen AMD EPYC processor, off-the-shelf servers can accelerate many data center and edge applications, including customer support, retail, automotive, financial services, medical, and manufacturing.

Additional Products in the AMD AI Portfolio

AMD recently launched the AMD Ryzen™ 7040 Series processors for consumer and commercial PCs— which includes the world’s first dedicated AI engine on an x86 processor.3 Powered by AMD XDNA technology, this new AI accelerator can run up to 10 trillion AI operations per second (TOPS), providing seamless AI experiences with incredible battery life and speed, equipping the users for the future with AI.

Customers are also using AMD Alveo™ accelerators, Versal™ adaptive SoCs, and leadership FPGAs in many industries such as aerospace, where NASA’s Mars rovers can accelerate AI-based image detection, or in automotive, where power driver-assist and advanced safety features use AMD technology. AMD technology also enables AI-assisted robotics for industrial applications and drives faster and more accurate diagnoses in medical applications.

AMD Enables Open Ecosystem to Accelerate Innovation

In addition to delivering a hardware portfolio that can handle the most demanding AI workloads, AMD is working on driving AI software that is open and accessible. Developers and partners can leverage AMD software tools to optimize AI applications on AMD hardware. Today, the stack includes AMD ROCm for AMD Instinct GPU accelerators; AMD Vitis™ AI for adaptive accelerators, SoCs, and FPGAs; and AMD open-source libraries for AMD EPYC processors.

AMD is also building an AI ecosystem with hardware and open software, tools, libraries, and models that help reduce the barriers of entry for developers and researchers. Two recent examples include AMD’s work with the PyTorch Foundation and Hugging Face.

Earlier this year, PyTorch, the popular AI framework, launched its 2.0 stable version, representing a significant step forward and unlocking even higher performance for users. As a founding member of the PyTorch Foundation, AMD is delighted that the PyTorch 2.0 release includes support for AMD Instinct and AMD Radeon™ graphics, supported by the AMD ROCm open software platform.

Hugging Face, a leading open-source AI platform with more than 500,000 models and datasets available, announced a strategic collaboration with AMD to optimize models for AMD platforms, driving maximum performance and compatibility for thousands of LLMs that customers will use across personal and commercial applications. AMD will also optimize its products to enhance performance and productivity with the models offered by Hugging Face and its users. This collaboration will provide the open-source community with an excellent end-to-end choice for accelerating AI innovation and make AI model training and inferencing more broadly accessible to developers.

Summary

AMD is making benefits of AI pervasive by enabling customers to tackle AI deployment with ease, as well as delivering solutions that scale across a wide range of applications, from processors and adaptive SoCs using AI engines, to edge inferencing, to large-scale AI inference and training in data centers.

Reach out to your local AMD contact today to learn more about the new solutions that are available today and coming to market later this year.

AMD Arena

Enhance your AMD product knowledge with training on AMD Ryzen™ PRO, AMD EPYC™, AMD Instinct™, and more.

Subscribe

Get monthly updates on AMD’s latest products, training resources, and Meet the Experts webinars.

Related Articles

Related Training Courses

Related Webinars

Footnotes

- MI300-005: Calculations conducted by AMD Performance Labs as of May 17, 2023, for the AMD Instinct™ MI300X OAM accelerator 750W (192 GB HBM3) designed with AMD CDNA™ 3 5nm FinFet process technology resulted in 192 GB HBM3 memory capacity and 5.218 TFLOPS sustained peak memory bandwidth performance. MI300X memory bus interface is 8,192 and memory data rate is 5.6 Gbps for total sustained peak memory bandwidth of 5.218 TB/s (8,192 bits memory bus interface * 5.6 Gbps memory data rate/8)*0.91 delivered adjustment. The highest published results on the NVidia Hopper H100 (80GB) SXM GPU accelerator resulted in 80GB HBM3 memory capacity and 3.35 TB/s GPU memory bandwidth performance.

- SP5-005C: SPECjbb® 2015-MultiJVM Max comparison based on published results as of 11/10/2022. Configurations: 2P AMD EPYC 9654 (815459 SPECjbb®2015 MultiJVM max-jOPS, 356204 SPECjbb®2015 MultiJVM critical-jOPS, 192 total cores, http://www.spec.org/jbb2015/results/res2022q4/jbb2015-20221019-00861.html) vs. 2P AMD EPYC 7763 (420774 SPECjbb®2015 MultiJVM max-jOPS, 165211 SPECjbb®2015 MultiJVM critical-jOPS, 128 total cores, http://www.spec.org/jbb2015/results/res2021q3/jbb2015-20210701-00692.html). SPEC® and SPECrate® are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- PHX-3a: As of May 2023, AMD has the first available dedicated AI engine on an x86 Windows processor, where 'dedicated AI engine' is defined as an AI engine that has no function other than to process AI inference models and is part of the x86 processor die. For detailed information, please check: https://www.amd.com/en/technologies/xdna.html.

- MI300-005: Calculations conducted by AMD Performance Labs as of May 17, 2023, for the AMD Instinct™ MI300X OAM accelerator 750W (192 GB HBM3) designed with AMD CDNA™ 3 5nm FinFet process technology resulted in 192 GB HBM3 memory capacity and 5.218 TFLOPS sustained peak memory bandwidth performance. MI300X memory bus interface is 8,192 and memory data rate is 5.6 Gbps for total sustained peak memory bandwidth of 5.218 TB/s (8,192 bits memory bus interface * 5.6 Gbps memory data rate/8)*0.91 delivered adjustment. The highest published results on the NVidia Hopper H100 (80GB) SXM GPU accelerator resulted in 80GB HBM3 memory capacity and 3.35 TB/s GPU memory bandwidth performance.

- SP5-005C: SPECjbb® 2015-MultiJVM Max comparison based on published results as of 11/10/2022. Configurations: 2P AMD EPYC 9654 (815459 SPECjbb®2015 MultiJVM max-jOPS, 356204 SPECjbb®2015 MultiJVM critical-jOPS, 192 total cores, http://www.spec.org/jbb2015/results/res2022q4/jbb2015-20221019-00861.html) vs. 2P AMD EPYC 7763 (420774 SPECjbb®2015 MultiJVM max-jOPS, 165211 SPECjbb®2015 MultiJVM critical-jOPS, 128 total cores, http://www.spec.org/jbb2015/results/res2021q3/jbb2015-20210701-00692.html). SPEC® and SPECrate® are registered trademarks of the Standard Performance Evaluation Corporation. See www.spec.org for more information.

- PHX-3a: As of May 2023, AMD has the first available dedicated AI engine on an x86 Windows processor, where 'dedicated AI engine' is defined as an AI engine that has no function other than to process AI inference models and is part of the x86 processor die. For detailed information, please check: https://www.amd.com/en/technologies/xdna.html.