Design Flow Assistant

Interactive guide to help you create a development strategy.

Delivering Breakthrough AI Performance/Watt for Real-Time Systems

The Versal AI Edge series delivers high performance, low latency AI inference for intelligence in automated driving, predictive factory and healthcare systems, multi-mission payloads in aerospace & defense, and a breadth of other applications. More than just AI, the Versal AI Edge series accelerates the whole application from sensor to AI to real-time control, all while meeting critical safety and security requirements such as ISO 26262 and IEC 61508. As an adaptive compute acceleration platform, the Versal AI Edge series allows developers to rapidly evolve their sensor fusion and AI algorithms while leveraging this scalable device portfolio for diverse performance and power profiles from edge to endpoint.

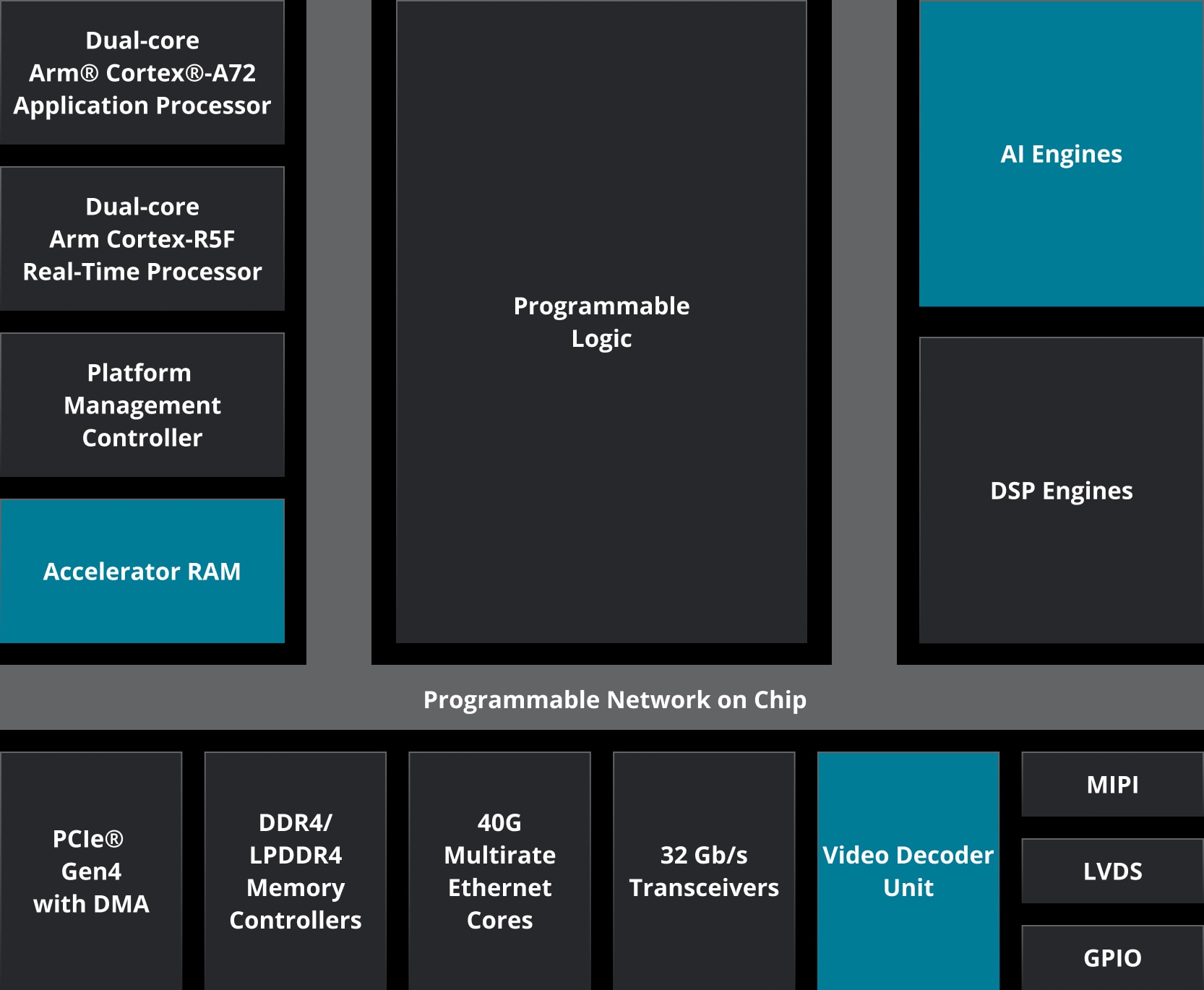

Processing systems deliver power-efficient embedded compute with the safety and security required in real-time systems. The dual-core Arm® Cortex®-A72 application processor is ideal for running Linux-class applications, while the dual-core Arm Cortex-R5F real-time processor runs safety-critical code for the highest levels of functional safety (ASIL and SIL). The platform management controller (PMC) is based on a triple-redundant processor and manages device operation, including platform boot, advanced power and thermal management, security, safety, and reliability across the platform.

At the heart of the Versal architecture’s flexibility is its programmable logic, enabling the integration of any sensor, connectivity to any interface, and the flexibility to handle any workload. Capable of both parallelism and determinism, programmable logic can implement and adapt sensor fusion algorithms, accelerate pre- and post-data processing across the pipeline, implement deterministic networking and motor control for real-time response, isolate safety-critical functions for fail-safe operation, and allow for hardware redundancies and fault resilience.

Both AI Engines and DSP Engines support a breadth of workloads common in edge applications including AI inference, image processing, and motion control. AI Engines are a breakthrough architecture based on a scalable array of vector processors and distributed memory, delivering breakthrough AI performance/watt. DSP Engines are based on the proven slice architecture in previous-generation Zynq™ adaptive SoCs, now with integrated floating-point support, and are ideal for wireless and image signal processing, data analytics, motion control, and more.

Versal adaptive SoCs were built from the ground up to meet the most stringent safety requirements in industrial and automotive applications, including ISO 26262 and IEC 61508 for safety, and IEC 62443 for security. The Versal architecture is partitioned with safety features in each domain, as well as global resources to monitor and eliminate common cause failures. New security features over previous-generation adaptive SoCs improve protection against cloning, IP theft, and cyber-attacks, including higher bandwidth AES & SHA encryption/decryption, glitch detection, and more.

The accelerator RAM features 4 MB of on-chip memory. The memory block is accessible to all compute engines and helps eliminate the need to go to external memory for critical compute functions such as AI inference. This enhances the already flexible memory hierarchy of the Versal architecture and improves AI performance/watt. The accelerator RAM is also ideal for holding safety-critical code that exceeds the capacity of the real-time processor’s OCM, improving the ability to meet ASIL-C and ASIL-D requirements.

Versal adaptive SoC’s programmable I/O allows connection to any sensor or interface, as well as the ability to scale for future interface requirements. Designers can configure the same I/O for either sensors, memory, or network connectivity, and budget device pins as needed. Different I/O types provide a wide range of speeds and voltages for both legacy and next-generation standards, e.g., 3.2 Gb/s DDR for server-class memory interfacing, 4.2 Gb/s LPDDR4x for highest memory bandwidth per pin, and native MIPI support to handle up to 8-megapixel sensors and beyond—critical to Level-2 ADAS and above.

Versal AI Edge series delivers scalability, performance, flexibility, reliability, security, and long lifecycles for the most demanding real-time AI-driven embedded systems.

AI compute performance is a key requirement for automotive tier-1s and OEMs targeting SAE Level 3 and beyond, in addition to meeting stringent thermal, reliability, security, and safety requirements. The Versal AI Edge series was architected for the highest AI performance/watt in power- and thermally-constrained systems. As a heterogeneous compute platform, Versal AI Edge adaptive SoCs match the right processing engine to the workload across the vehicle: custom I/O for any needed combination of radar, LiDAR, infrared, GPS, and vision sensors; Adaptable Engines for sensor fusion and pre-processing; AI Engines for inference and perception processing; and Scalar Engines for safety-critical decision making. Versal AI Edge adaptive SoCs are part of the AMD automotive-qualified (XA) product portfolio and are architected to meet stringent ISO 26262 requirements.

Robotics integrate precision control, deterministic communications, machine vision, responsive AI, cybersecurity, and functional safety into a single ‘system of systems.' Versal AI Edge adaptive SoCs enable a modular and scalable approach to robotics by providing a single heterogeneous device for fusion of heterogeneous sensors for robotic perception, precise and deterministic control over a scalable number of axes, isolation of safety-critical functions, accelerated motion planning, and AI to augment safety controls for dynamic, context-based execution. The Versal AI Edge series also accelerates real-time analytics with machine learning to support predictive maintenance and deliver actionable insights via cybersecure (IEC 62443) network connectivity.

The Versal AI Edge series is optimized for real-time, high-performance applications in the most demanding environments such as multi-mission drones and UAVs. A single Versal AI Edge device can support multiple inputs including comms datalinks, navigation, radar for target tracking and IFF (Identification Friend or Foe), and electro-optical sensors for visual reconnaissance. The heterogeneous engines aggregate and pre-process incoming data and sensor input, perform waveform processing and signal conditioning, and ultimately perform low latency AI for target tracking and optimization of flight paths, as well as cognitive RF to identify adversarial signals or channel attacks. The Versal AI Edge series delivers both the intelligence and low-SWaP (size, weight, and power) needed for multi-mission situationally aware UAVs.

Requirements are increasing to make medical devices smaller, portable, and battery driven, targeting more Point-of-Care applications, all without compromising patient safety while still satisfying regulatory requirements. The Versal AI Edge series accelerates parallel beamforming and real-time image processing to create higher quality images and analysis, but with the power efficiency for long life, battery-powered portable ultrasound units. As a heterogeneous compute platform, the Versal AI Edge series implements all the different structures across the pipeline. Adaptable Engines perform acquisition functions including control of the analog front-end. AI Engines accelerate advanced imaging techniques, as well as machine learning for diagnostic assistance and efficiency improvements. The Arm® subsystem hosts the Linux-class OS for orchestrating, updating, and providing infrastructure across the data pipeline. The Versal AI Edge series allows for scalability from portable, to desktop, to cart-based ultrasound solutions.

The AMD Embedded+ architecture solution combines AMD Ryzen™ Embedded processors and AMD Versal™ adaptive SoCs into a single board to deliver integrated, scalable, and power-efficient solutions that accelerate time-to-market through product offering from ODM ecosystem. Embedded+ is targeted for sensor fusion, AI inferencing, industrial networking, control, and visualization in industrial and medical PCs.

| VE2002 | VE2102 | VE2202 | VE2302 | VE1752 | VE2602 | VE2802 | |

|---|---|---|---|---|---|---|---|

| INT8 Max Sparsity | 11 | 16 | 32 | 45 | - | 202 | 405 |

| INT8 Dense | 5 | 8 | 16 | 23 | 101 | 101 | 202 |

| BFLOAT16 | 3 | 4 | 8 | 11 | - | 51 | 101 |

| VE2002 | VE2102 | VE2202 | VE2302 | VE1752 | VE2602 | VE2802 | |

|---|---|---|---|---|---|---|---|

| Application Processing Unit | Dual-core Arm® Cortex®-A72, 48 KB/32 KB L1 Cache w/ parity & ECC; 1 MB L2 Cache w/ ECC | ||||||

| Real-Time Processing Unit | Dual-core Arm Cortex-R5F, 32 KB/32 KB L1 Cache, and 256 KB TCM w/ECC | ||||||

| Memory | 256 KB On-Chip Memory w/ECC | ||||||

| Connectivity | Ethernet (x2); UART (x2); CAN-FD (x2); USB 2.0 (x1); SPI (x2); I2C (x2) | ||||||

| VE2002 | VE2102 | VE2202 | VE2302 | VE1752 | VE2602 | VE2802 | |

|---|---|---|---|---|---|---|---|

| AI Engine-ML | 8 | 12 | 24 | 34 | 0 | 152 | 304 |

| AI Engines | 0 | 0 | 0 | 0 | 304 | 0 | 0 |

| DSP Engines | 90 | 176 | 324 | 464 | 1,312 | 984 | 1,312 |

| VE2002 | VE2102 | VE2202 | VE2302 | VE1752 | VE2602 | VE2802 | |

|---|---|---|---|---|---|---|---|

| System Logic Cells (K) | 44 | 80 | 230 | 329 | 981 | 820 | 1,139 |

| LUTs | 20,000 | 36,608 | 105,000 | 150,272 | 448,512 | 375,000 | 520,704 |

| VE2002 | VE2102 | VE2202 | VE2302 | VE1752 | VE2602 | VE2802 | |

|---|---|---|---|---|---|---|---|

| Accelerator RAM (Mb) | 32 | 32 | 32 | 32 | 0 | 0 | 0 |

| Total Memory (Mb) | 46 | 54 | 86 | 103 | 253 | 243 | 263 |

| NoC Master / NoC Slave Ports | 2 | 2 | 5 | 5 | 21 | 21 | 21 |

| PCIe® w/ DMA (CPM) | - | - | - | - | 1 x Gen4x16 |

1 x Gen4x16 |

1 x Gen4x16 |

| PCI Express® | - | - | 1 x Gen4x8 | 1x Gen4x8 | 4x Gen4x8 |

4x Gen4x8 | 4x Gen4x8 |

| 40G Multirate Ethernet MAC | 0 | 0 | 1 | 1 | 2 | 2 | 2 |

| Video Decoder Engines (VDEs) | - | - | - | - | - | 2 | 4 |

| GTY Transceivers | 0 | 0 | 0 | 0 | 44 | 0 | 0 |

| GTYP Transceivers | 0 | 0 | 8 | 8 | 0 | 32 | 32 |

AMD provides a leading software development environment for designing with adaptive SoCs and FPGAs—this includes tools (compilers, simulators, etc.), IP, and solutions.

This environment can reduce development time while allowing developers to achieve high performance per watt. AMD adaptive SoCs & FPGA design tools enable all types of developers from AI scientists, application and algorithm engineers, embedded software developers, and traditional hardware developers to use AMD adaptive computing solutions.

Jump-start your design cycle and achieve fast time-to-market with the proven hardware, software support, tools, design examples, and documentation available for the kit.

Start evaluating Versal AI Edge series capabilities today with the VEK280 evaluation kit featuring VE2802 silicon. Leveraging the on-chip AI Engines optimized for ML inference, this platform is ideal for developing compute-intensive, power-sensitive ML applications.

Join the Versal adaptive SoC notification list to receive the latest news and updates.