Delivering the Modern Data Center

Jun 24, 2022

Five years ago, AMD announced our return to the datacenter market with the introduction of the first generation EPYC™ processors. We unsettled the status quo with a disruptive leap forward in CPU core count, IO capabilities, and memory. Since then, we’ve built out a compelling roadmap of server products that have established us as the innovation and performance leader in the data center and rapidly grown our share of the market.

But data center needs continue to evolve and the modern data center requires a variety of compute engines to meet the needs of an increasingly diverse set of applications and to scale performance. At our recent Financial Analyst Day, AMD shared a glimpse into this future – and how we will provide the broadest set of engines to power the data center while delivering better efficiency and world-class security.

It All Begins with EPYC™

AMD EPYC™ processors continue the journey in performance leadership. From the original “Zen” to the current “Zen 3” core architecture, AMD has increased the number of EPYC™ computing cores, created products specialized for critical workloads, and increased the number of solutions from 50 to over 1000 since launching.

Never content to rest on their laurels, our AMD teams continue to innovate. Looking ahead to the next several quarters, AMD will launch four new 4th Gen EPYC™ products based on the “Zen 4” core architecture: “Genoa”, “Genoa-X”, “Siena”, and “Bergamo”. “Genoa”, the AMD flagship 4th gen EPYC™ processor, is expected to provide leadership socket and per-core performance. With up to 96 “Zen 4” cores, a top-of-stack “Genoa” will deliver 75% or greater faster enterprise Java® performance vs. 3rd gen EPYC™ 7763 64-core CPU.¹ Set to launch in Q4 of this year, it is designed to be a step function in performance leadership.

Tailored for the growing number of scale-out cloud workloads, “Bergamo” is designed specifically for cloud native computing, “Genoa-X” will be optimized for performance leadership technical computing² and relational databases, while “Siena” is designed for intelligent edge and telco deployments.

From powerful general purpose to workload-optimized CPUs, AMD continues to offer leadership x86 EPYC™ CPUs to drive higher performance, energy efficiency, and time to value for customers. The age of EPYC™ is here and we’re just getting started.

Accelerating the Data Center

But the evolution of the modern data center is going to require scale and security capabilities that can’t be delivered by the CPU alone. For many workloads, including scientific modeling and simulation, AI, financial trading, real-time data analytics and video transcoding, accelerators are required to maximize a data center’s performance.

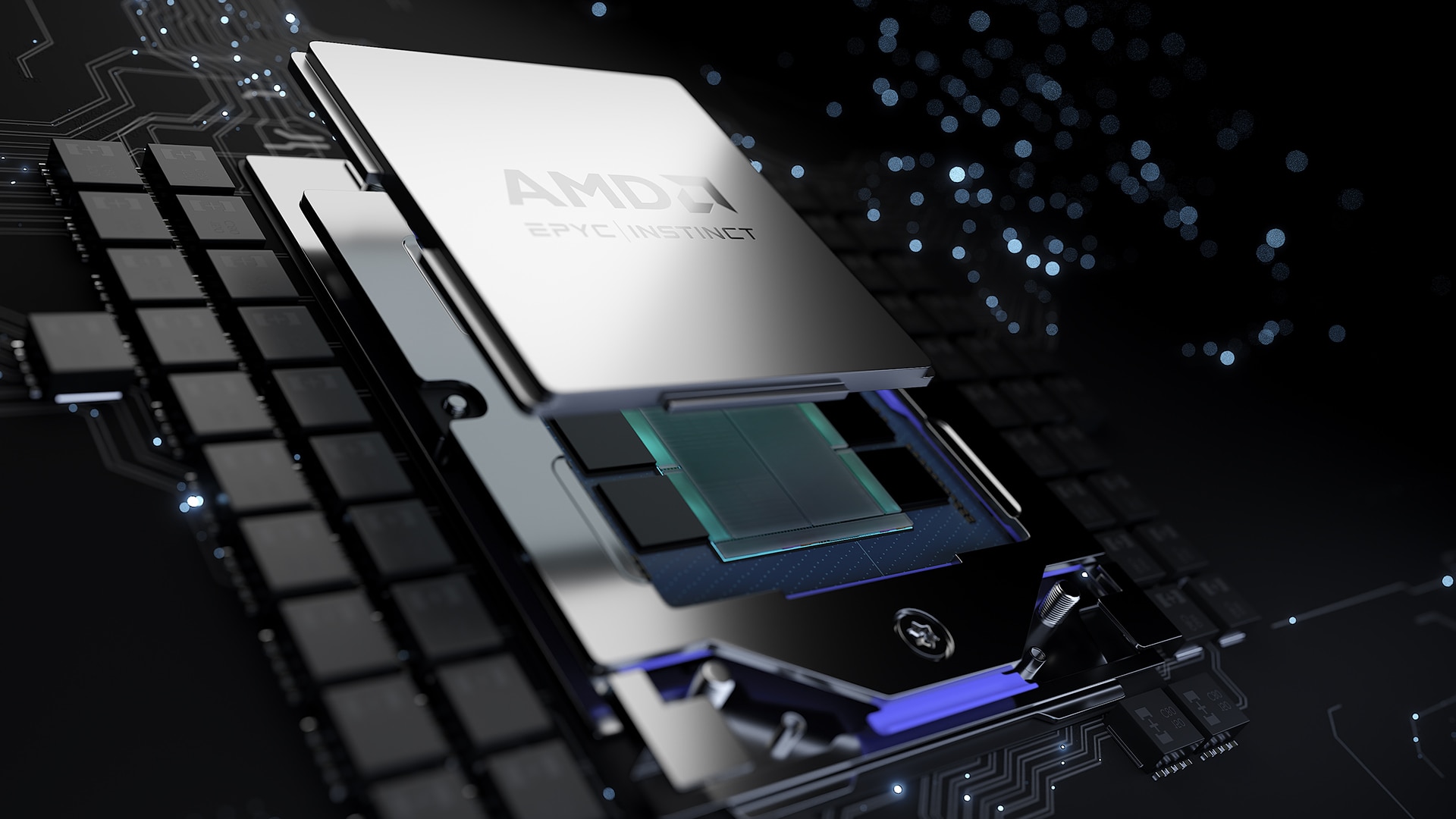

AMD Instinct™ GPU: HPC Leadership

Enter AMD Instinct™ GPUs. In November, we unveiled the MI200 series accelerators - built to run some of the most powerful HPC workloads, including scientific modeling and AI training. Last month, ORNL announced their Frontier supercomputer based on AMD EPYC™ CPUs and Instinct GPUs broke the exascale barrier – making it the fastest supercomputer in the world. Also, the Frontier Test & Development System secured the top spot on the Green500 list delivering over 62 gigaflops/watt of HPC computing, becoming the most energy efficient supercomputer in the world.

In 2023 AMD plans to launch the AMD Instinct™ MI300, the world’s first data center APU. The MI300 is expected to have 8X the AI training performance vs. the MI250X.³

Adaptive Computing

With the acquisition of Xilinx earlier this year, AMD is delivering leadership FPGAs and SoCs for the evolving workloads across the data center. From 5G deployments to adaptive platforms for network acceleration in hyperscale, Xilinx products provide customers the flexibility they need.

In addition to providing flexibility and adaptability to customers, AMD XDNA architecture will allow for pervasive AI – allowing companies to take advantage of the scale and capabilities of AI workloads. Combined with EPYC™ CPUs and Instinct GPUs, the broad AMD portfolio is poised to deliver the end-to-end AI solutions needed at leadership data center performance and TCO.

Innovating Data Center Infrastructure

The modern data center is a software-defined data center, with virtualization, software-defined storage, software-defined networking, and other technologies delivering huge advances in flexibility, agility, and TCO. But the software infrastructure that enables those benefits is itself a huge consumer of compute and a tax on IT resources. In many clouds, up to 30% of the CPU cores can be consumed by overhead required to run cloud services, reducing the resources that can run end applications. Beyond the data center itself, many workloads are moving to the edge for latency or scale reasons. As more compute happens at the edge, it becomes increasingly important that security and manageability be extended to the edge as well, further increasing the infrastructure tax.

Pensando, the newest addition to the AMD portfolio, provides turnkey products that are hugely helpful to scaling today’s software defined data centers. The Pensando “Elba” DPU allows cloud systems to offload most of the overhead and free-up cores for end-application use. It can also provide stateful firewall services on ever network port of a server, allowing for strong security capabilities at every endpoint – helping security reach out to the edge of the network.

The Future is Here

Five years ago, AMD committed to driving technology leadership in the data center with a roadmap customers can count on with “Zen” architecture. In the years ahead, we remain committed to pushing the boundaries in order to help customers solve their toughest challenges in the data center – from energy efficiency to analyzing huge data sets with AI.

AMD powers and accelerates the modern data center by providing products with leadership performance, efficiency, and security features.

Learn more about the future of the data center here.

Resources

- SP5-005: Server-side Java multiJVM workload demo comparison based on AMD measured testing as of 6/2/2022. Configurations: 2x 96-core 4th Gen AMD EPYC™ (pre-production silicon) on a reference system versus 2x 64-core EPYC™ 7763 on a reference system. Java version JDK18. OEM published scores will vary based on system configuration and use of production silicon.

- “Technical Computing” or “Technical Computing Workloads” as defined by AMD can include: electronic design automation, computational fluid dynamics, finite element analysis, seismic tomography, weather forecasting, quantum mechanics, climate research, molecular modeling, or similar workloads. GD-204

- MI300-003: Measurements by AMD Performance Labs June 4, 2022. MI250X FP16 (306.4 estimated delivered TFLOPS based on 80% of peak theoretical floating-point performance). MI300 FP8 performance based on preliminary estimates and expectations. Final performance may vary.