AMD Instinct MI350 Series and Beyond: Accelerating the Future of AI and HPC

Jun 12, 2025

At a Glance:

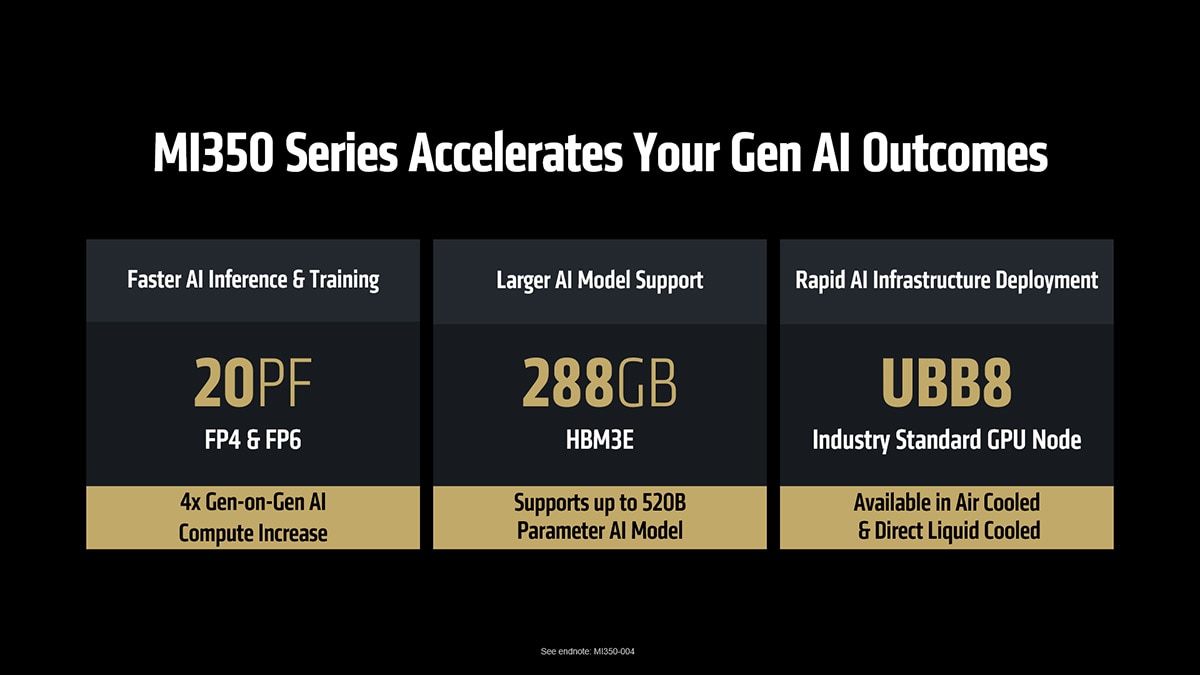

- AMD launched the AMD Instinct™ MI350 Series, delivering up to 4x generation-on-generation AI compute improvement and up to 35x leap in inferencing performance.

- AMD launched ROCm 7.0 with the preview version of the software offering up to 4x inference1 and up to 3x training performance improvement2 over ROCm 6.0

- AMD also showcased its new developer cloud to empowering AI developers with seamless access to AMD Instinct GPUs and ROCm software for their AI innovation.

- The company also previewed its next-gen “Helios” AI reference design rack integrating AMD Instinct MI400 GPUs, EPYC “Venice” CPUs, and Pensando “Vulcano” NICs for unprecedented AI compute density and scalability.

The world of AI isn’t slowing down—and neither are we. At AMD, we’re not just keeping pace, we’re setting the bar. Our customers are demanding real, deployable solutions that scale, and that’s exactly what we’re delivering with the AMD Instinct MI350 Series. With cutting-edge performance, massive memory bandwidth, and flexible, open infrastructure, we’re empowering innovators across industries to go faster, scale smarter, and build what’s next.

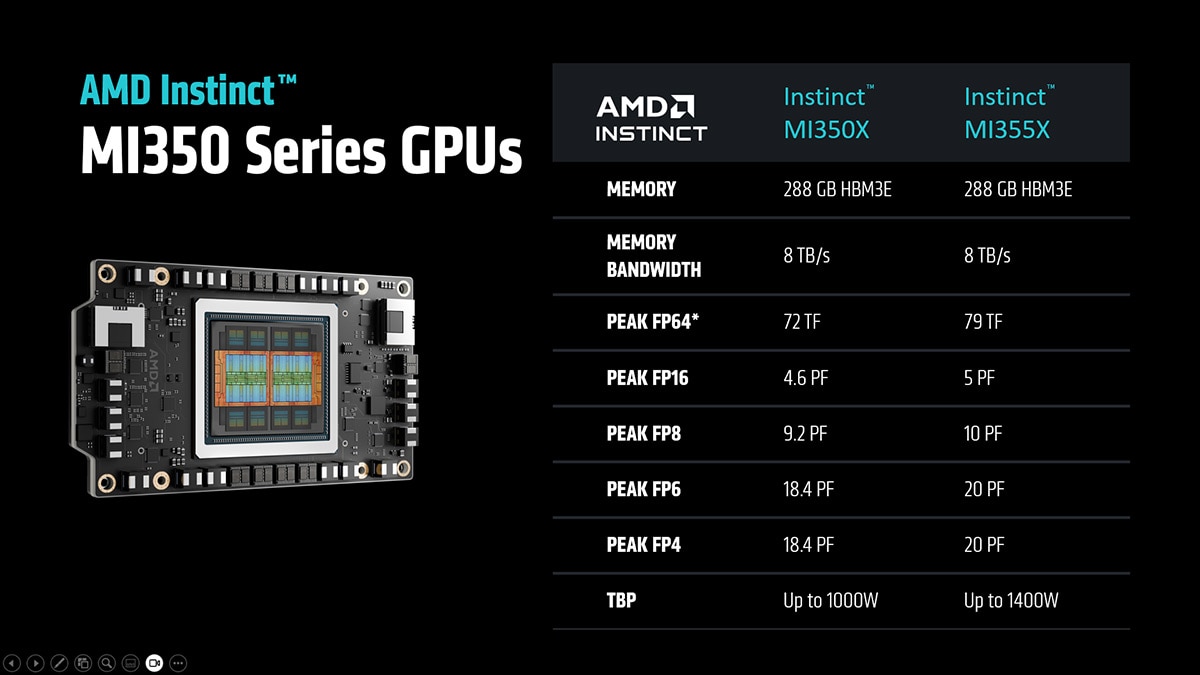

Powering Tomorrow’s AI Workloads

Built on the AMD CDNA™ 4 architecture, the AMD Instinct MI350X and MI355X GPUs are purpose-built for the demands of modern AI infrastructure. The MI350 Series, delivers up to 4x, generation-on-generation AI compute increase3 as well as up to a 35x generational leap in inferencing4, paving the way for transformative AI solutions across industries. These GPUs deliver leading memory capacity (288GB HBM3E from Micron and Samsung Electronics) and bandwidth (up to 8TB/s), ensuring exceptional throughput for inference and training alike.

With flexible air-cooled and direct liquid-cooled configurations, the AMD Instinct MI350 Series is optimized for seamless deployment, supporting up to 64 GPUs in an air-cooled rack and up to 128 GPUs in a direct liquid-cooled racks, delivering up to 1.3 exaFLOPS of MXFP4/MXFP6 performance. The result is faster time-to-AI and reduced costs in an industry standards-based infrastructure.

The Numbers That Matter: Instinct MI350 Series Specifications

| SPECIFICATIONS (PEAK THEORETICAL) |

AMD INSTINCT™ MI350X GPU | AMD INSTINCT™ MI350X PLATFORM | AMD INSTINCT™ MI355X GPU | AMD INSTINCT™ MI355X PLATFORM |

| GPUs | Instinct MI350X OAM | 8 x Instinct MI350X OAM | Instinct MI355X OAM | 8 x Instinct MI355X OAM |

| GPU Architecture | CDNA 4 | CDNA 4 | CDNA 4 | CDNA 4 |

| Dedicated Memory Size | 288 GB HBM3E | 2.3 TB HBM3E | 288 GB HBM3E | 2.3 TB HBM3E |

| Memory Bandwidth | 8 TB/s | 8 TB/s per OAM | 8 TB/s | 8 TB/s per OAM |

| FP64 Performance | 72.1 TFLOPs | 577 TFLOPs | 78.6 TFLOPs | 628.8 TFLOPs |

| FP16 Matrix Performance | 2.3 PFLOPs | 18.5 PFLOPs | 2.5 PFLOPs | 20.1 PFLOPs |

| MXFP8/ OCP-FP8 Matrix Performance | 4.6 PFLOPs | 36.9 PFLOPs | 5 PFLOPs | 80.5 PFLOPs |

| MXFP6 Matrix Performance | 9.2 PFLOPs | 73.8 PFLOPs | 10.1 PFLOPs | 80.5PFLOPs |

| MXFP4 Matrix Performance | 9.2 PFLOPs | 73.8 PFLOPs | 10.1PFLOPs | 80.5 PFLOPs |

Ecosystem Momentum Ready to Deploy

The AMD Instinct MI350 Series GPUs will be broadly available through leading cloud service providers—including major hyperscalers and next-generation neo clouds—giving customers flexible options to scale AI in the cloud. At the same time, top OEMs like Dell, HPE, and Supermicro are integrating MI350 Series solutions into their platforms, delivering powerful on-prem and hybrid AI infrastructure. Follow this link to learn how leading OEMs, CSPs and more are supporting the adoption of AMD Instinct MI350 Series GPUs.

ROCm™ 7: The Open Software Engine for AI Acceleration

AI is evolving at record speed—and AMD’s vision with ROCm software is to unlock that innovation for everyone through an open, scalable, and developer-focused platform. Over the past year, ROCm has rapidly matured, delivering leadership inference performance, expanding training capabilities, and deepening its integration with the open-source community. ROCm now powers some of the largest AI platforms in the world, supporting major models like LLaMA and DeepSeek from day one, and offering an average 3.5x inference gain in the upcoming ROCm 7 release3. With frequent updates, advanced data types like FP4, and new algorithms like FAv3, ROCm is enabling next-gen AI performance while driving open-source frameworks like vLLM and SGLang forward

As AI adoption shifts from research to real-world enterprise deployment, ROCm is evolving with it too. AMD ROCm for Enterprise AI brings a full-stack MLOps platform to the forefront, enabling secure, scalable AI with turnkey tools for fine-tuning, compliance, deployment, and integration. With over 1.8 million Hugging Face models running out of the box, industry benchmarks now in play, ROCm is not just catching up—it’s leading the open AI revolution.

Developers are at the heart of everything we do. We're deeply committed to delivering an exceptional experience—making it easier than ever to build on ROCm software with better out-of-box tools, real-time CI dashboards, rich collateral, and an active developer community. From hackathons to high-performance kernel contests, momentum is building fast. And today, we're thrilled to launch the AMD Developer Cloud, giving developers instant, barrier-free access to ROCm and AMD GPUs to accelerate innovation.

Whether optimizing large language models or scaling out inferencing platforms, ROCm 7 gives developers the tools they need to move from experimentation to production fast. Be sure to check out our blog that goes into more detail on all the benefits found within ROCm 7.

What’s Next: Previewing the AMD Instinct MI400 Series and “Helios” AI Rack

The AMD commitment to innovation doesn’t stop with Instinct MI350 Series. The company previewed its next-generation AMD Instinct MI400 Series—a new level of performance coming in 2026.

The AMD Instinct MI400 Series will represent a dramatic generational leap in performance enabling full rack level solutions for large scale training and distributed inference. Key performance innovations include:

- Up to 432GB of HBM4 memory

- 19.6TB/s memory bandwidth

- 40 PF at FP4 and 20 PF at FP8 performance

- 300GB/s Scale-Out bandwidth

The “Helios” reference design for AI rack infrastructure – coming in 2026 – is engineered from the ground up to unify AMD’s leadership silicon—AMD EPYC “Venice” CPUs, AMD Instinct MI400 series GPUs and Pensando “Vulcano” AI NICs—and ROCm software into a fully integrated solution. Helios is designed as a unified system supporting a tightly coupled scale-up domain of up to 72 MI400 series GPUs with 260 terabytes per second of scale up bandwidth with support for Ultra Accelerator Link.

Read the blog here for more information about what’s ahead with the AMD “Helios” AI rack solution.

Laying the Foundation for the Future of AI

Built on the latest AMD CDNA 4 architecture and supported by the open and optimized ROCm software stack, AMD Instinct MI350X and MI355X GPUs enable customers to deploy powerful, future-ready AI infrastructure today.

ROCm is unlocking AI innovation with open-source speed, developer-first design, and breakthrough performance. From inference to training to full-stack deployment, it’s built to scale with the future of AI.

And with ROCm 7 and AMD Developer Cloud, we’re just getting started.

As we look ahead to the next era of AI with the upcoming MI400 Series and AMD “Helios” rack architecture, the AMD Instinct MI400 Series sets a new standard—empowering organizations to move faster, scale smarter, and unlock the full potential of generative AI and high-performance computing.

Related Blogs

Footnotes

- 4x Inference Gen/Gen Claim for ROCm: (MI300-80) Testing by AMD as of May 15, 2025, measuring the inference performance in tokens per second (TPS) of AMD ROCm 6.x software, vLLM 0.3.3 vs. AMD ROCm 7.0 preview version SW, vLLM 0.8.5 on a system with (8) AMD Instinct MI300X GPUs running Llama 3.1-70B (TP2), Qwen 72B (TP2), and Deepseek-R1 (FP16) models with batch sizes of 1-256 and sequence lengths of 128-204. Stated performance uplift is expressed as an average TPS over the (3) LLMs tested. Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- 3x Training Gen/Gen Claim for ROCm: (MI300-081) Testing by AMD as of May 15, 2025, measuring the training performance (TFLOPS) of ROCm 7.0 preview version software, Megatron-LM, on (8) AMD Instinct MI300X GPUs running Llama 2-70B (4K), Qwen1.5-14B, and Llama3.1-8B models, and a custom docker container vs. a similarly configured system with AMD ROCm 6.0 software. Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- 4x Gen/Gen AI Performance Claim: MI350-005: Based on calculations by AMD Performance Labs in May 2025, for the AMD Instinct™ MI355X and MI350X GPUs to determine the peak theoretical precision performance when comparing FP16, FP8, FP6 and FP4 datatypes with Matrix vs. AMD Instinct MI325X, MI300X, MI250X and MI100 GPUs. Server manufacturers may vary configurations, yielding different results.

- 35x gen/gen performance uplift: MI350-044 Based on AMD internal testing as of 6/9/2025. Using 8 GPU AMD Instinct™ MI355X Platform measuring text generated online serving inference throughput for Llama 3.1-405B chat model (FP4) compared 8 GPU AMD Instinct™ MI300X Platform performance with (FP8). Test was performed using input length of 32768 tokens and an output length of 1024 tokens with concurrency set to best available throughput to achieve 60ms on each platform, 1 for MI300X (35.3ms) and 64 for MI355X platforms (50.6ms). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI350-044

- 520 Parameter Claim: MI350-012: Based on calculations by AMD Performance Labs as of April 17, 2025, on the published memory specifications of the AMD Instinct MI350X / MI355X GPU (288GB) vs MI300X (192GB) vs MI325X (256GB). Calculations performed with FP16 precision datatype at (2) bytes per parameter, to determine the minimum number of GPUs (based on memory size) required to run the following LLMs: OPT (130B parameters), GPT-3 (175B parameters), BLOOM (176B parameters), Gopher (280B parameters), PaLM 1 (340B parameters), Generic LM (420B, 500B, 520B, 1.047T parameters), Megatron-LM (530B parameters), LLaMA ( 405B parameters) and Samba (1T parameters). Results based on GPU memory size versus memory required by the model at defined parameters plus 10% overhead. Server manufacturers may vary configurations, yielding different results. Results may vary based on GPU memory configuration, LLM size, and potential variance in GPU memory access or the server operating environment. *All data based on FP16 datatype. For FP8 = X2. For FP4 = X4.

- 4x Gen/Gen AI performance Claim: MI350-044: Based on AMD internal testing as of 6/9/2025. Using 8 GPU AMD Instinct™ MI355X Platform measuring text generated online serving inference throughput for Llama 3.1-405B chat model (FP4) compared 8 GPU AMD Instinct™ MI300X Platform performance with (FP8). Test was performed using input length of 32768 tokens and an output length of 1024 tokens with concurrency set to best available throughput to achieve 60ms on each platform, 1 for MI300X (35.3ms) and 64 for MI355X platforms (50.6ms). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations.

- 4x Inference Gen/Gen Claim for ROCm: (MI300-80) Testing by AMD as of May 15, 2025, measuring the inference performance in tokens per second (TPS) of AMD ROCm 6.x software, vLLM 0.3.3 vs. AMD ROCm 7.0 preview version SW, vLLM 0.8.5 on a system with (8) AMD Instinct MI300X GPUs running Llama 3.1-70B (TP2), Qwen 72B (TP2), and Deepseek-R1 (FP16) models with batch sizes of 1-256 and sequence lengths of 128-204. Stated performance uplift is expressed as an average TPS over the (3) LLMs tested. Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- 3x Training Gen/Gen Claim for ROCm: (MI300-081) Testing by AMD as of May 15, 2025, measuring the training performance (TFLOPS) of ROCm 7.0 preview version software, Megatron-LM, on (8) AMD Instinct MI300X GPUs running Llama 2-70B (4K), Qwen1.5-14B, and Llama3.1-8B models, and a custom docker container vs. a similarly configured system with AMD ROCm 6.0 software. Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- 4x Gen/Gen AI Performance Claim: MI350-005: Based on calculations by AMD Performance Labs in May 2025, for the AMD Instinct™ MI355X and MI350X GPUs to determine the peak theoretical precision performance when comparing FP16, FP8, FP6 and FP4 datatypes with Matrix vs. AMD Instinct MI325X, MI300X, MI250X and MI100 GPUs. Server manufacturers may vary configurations, yielding different results.

- 35x gen/gen performance uplift: MI350-044 Based on AMD internal testing as of 6/9/2025. Using 8 GPU AMD Instinct™ MI355X Platform measuring text generated online serving inference throughput for Llama 3.1-405B chat model (FP4) compared 8 GPU AMD Instinct™ MI300X Platform performance with (FP8). Test was performed using input length of 32768 tokens and an output length of 1024 tokens with concurrency set to best available throughput to achieve 60ms on each platform, 1 for MI300X (35.3ms) and 64 for MI355X platforms (50.6ms). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI350-044

- 520 Parameter Claim: MI350-012: Based on calculations by AMD Performance Labs as of April 17, 2025, on the published memory specifications of the AMD Instinct MI350X / MI355X GPU (288GB) vs MI300X (192GB) vs MI325X (256GB). Calculations performed with FP16 precision datatype at (2) bytes per parameter, to determine the minimum number of GPUs (based on memory size) required to run the following LLMs: OPT (130B parameters), GPT-3 (175B parameters), BLOOM (176B parameters), Gopher (280B parameters), PaLM 1 (340B parameters), Generic LM (420B, 500B, 520B, 1.047T parameters), Megatron-LM (530B parameters), LLaMA ( 405B parameters) and Samba (1T parameters). Results based on GPU memory size versus memory required by the model at defined parameters plus 10% overhead. Server manufacturers may vary configurations, yielding different results. Results may vary based on GPU memory configuration, LLM size, and potential variance in GPU memory access or the server operating environment. *All data based on FP16 datatype. For FP8 = X2. For FP4 = X4.

- 4x Gen/Gen AI performance Claim: MI350-044: Based on AMD internal testing as of 6/9/2025. Using 8 GPU AMD Instinct™ MI355X Platform measuring text generated online serving inference throughput for Llama 3.1-405B chat model (FP4) compared 8 GPU AMD Instinct™ MI300X Platform performance with (FP8). Test was performed using input length of 32768 tokens and an output length of 1024 tokens with concurrency set to best available throughput to achieve 60ms on each platform, 1 for MI300X (35.3ms) and 64 for MI355X platforms (50.6ms). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations.