AMD Instinct Platforms

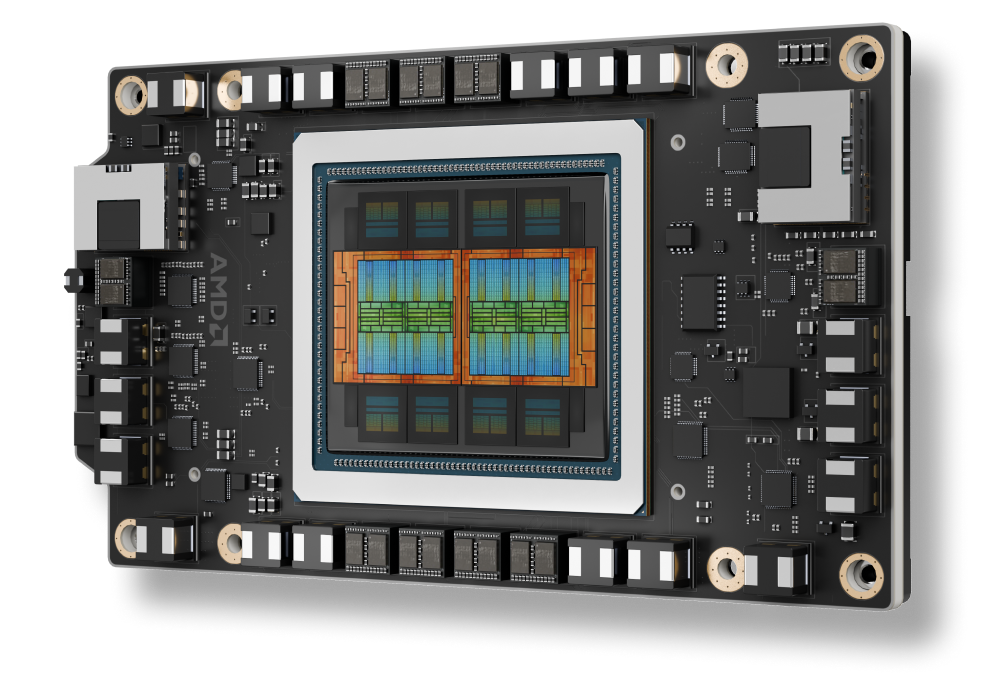

Includes eight (8) Instinct MI355X or MI350X GPUs in a single UBB board form factor for maximum compute performance

Leadership AI & HPC Performance

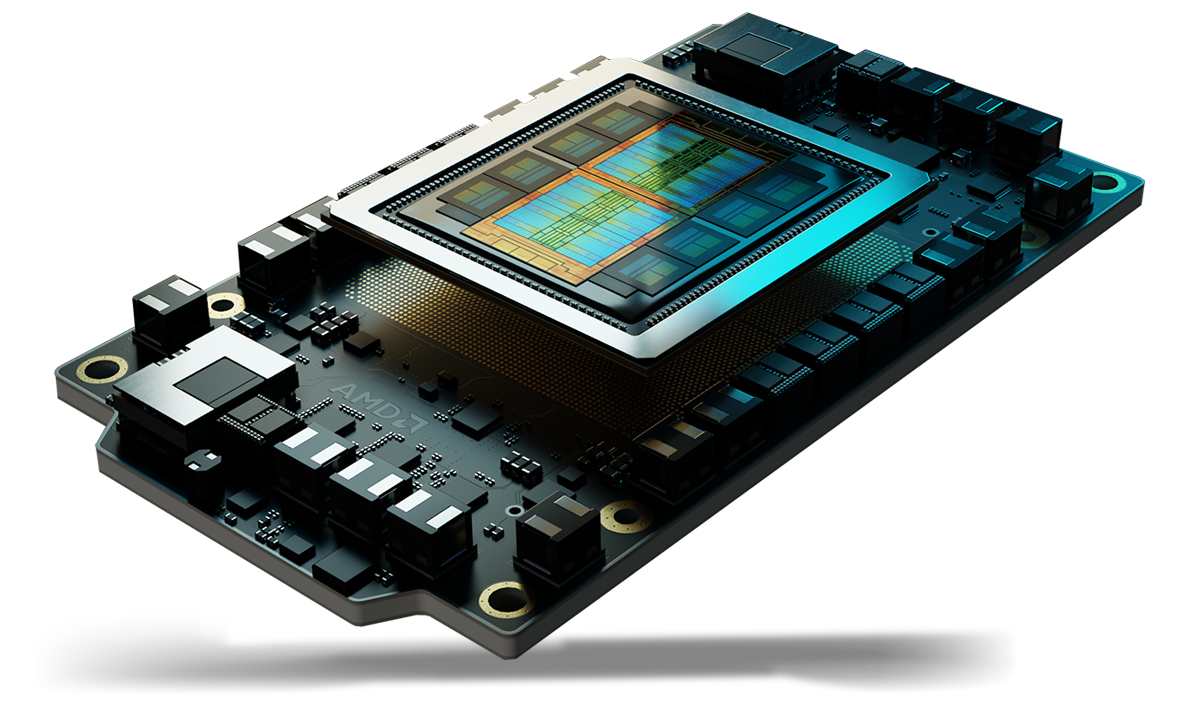

The AMD Instinct™ MI350 Series GPUs set a new standard for Generative AI and high performance computing (HPC) in data centers. Built on the new cutting-edge 4th Gen AMD CDNA™ architecture, these GPUs deliver exceptional efficiency and performance for training massive AI models, high-speed inference, and complex HPC workloads like scientific simulations, data processing, and computational modeling.

AMD Instinct™ accelerators enable leadership performance for the data center, at any scale—from single-server solutions up to the world’s largest, Exascale-class supercomputers.

They are uniquely well-suited to power even the most demanding AI and HPC workloads, offering exceptional compute performance, large memory density, high bandwidth memory, and support for specialized data formats.

AMD Instinct GPUs are built on AMD CDNA™ architecture, which offers Matrix Core Technologies and support for a broad range of datatype capabilities—from the highly efficient MXFP4 and MXFP6 (including INT8, OCP-FP8 and FP16/BF16 sparsity support for AI), to the most demanding FP64 for HPC.

AMD ROCm™ software includes a broad set of programming models, tools, compilers, libraries, and runtimes for AI models and HPC workloads targeting AMD Instinct GPUs.

Experience AMD Instinct GPUs in the Cloud

Support your AI, HPC, and software development needs with programs supported by leading cloud service providers.

AMD collaborates with leading Original Equipment Manufacturers (OEMs), and platform designers to offer a robust ecosystem of AMD Instinct GPU-powered solutions.

Sign up to receive the latest data center news and server content.