Under the Hood

AMD Instinct accelerators are built on AMD CDNA™ architecture, which offers Matrix Core Technologies and support for a broad range of precision capabilities—from the highly efficient INT8 and FP8 to the most demanding FP64 for HPC.

Meet the Series

Explore AMD Instinct MI250X, MI250, and MI210 accelerators.

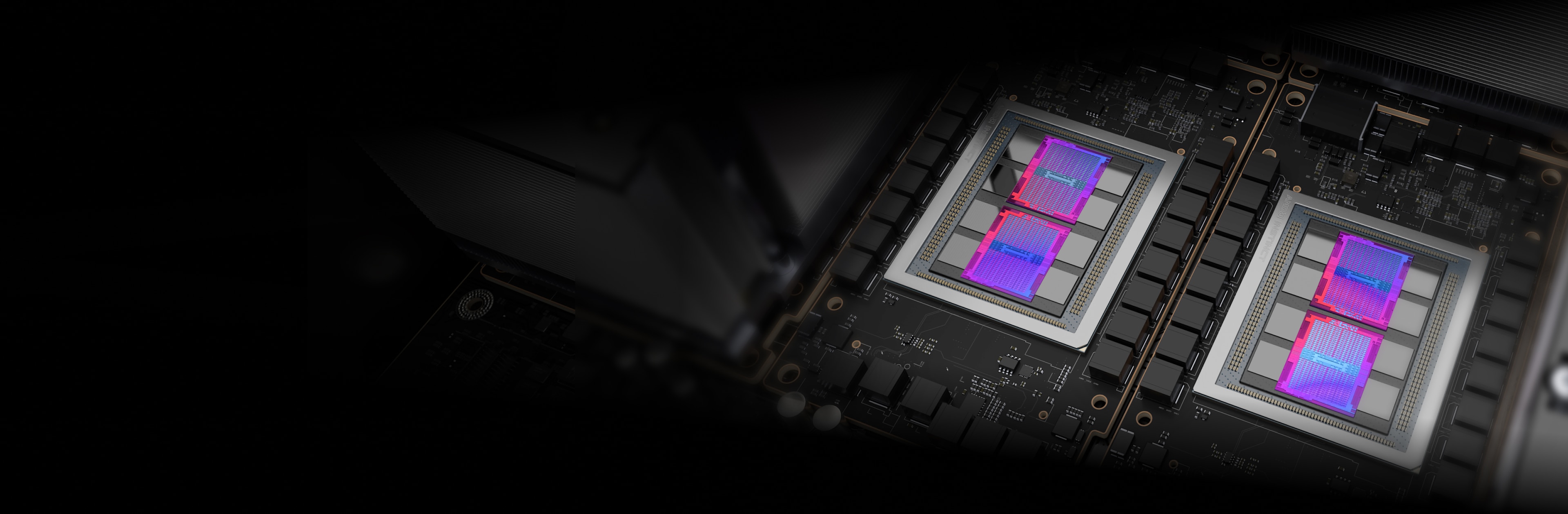

AMD Instinct MI250X Accelerators

AMD Instinct MI250X accelerators power some of the world’s top supercomputers.

220 CUs

220 GPU Compute Units

128 GB

128 GB HBM2e Memory

3.2 TB/s

3.2 TB/s Peak Memory Bandwidth

400 GB/s

400 GB/s Peak Aggregate Infinity Fabric

Specs Comparisons

Up to 383 TFLOPS peak theoretical half-precision (FP16) performance with up to 1.6X more memory capacity and bandwidth than competitive GPUs for the most demanding AI workloads2,3,4

MI250X

A100

Delivers up to 4X advantage vs. competitive GPUs, offering up to 47.9 TFLOPs FP64 and up to 95.7 TFLOPs FP64 Matrix peak theoretical performance.2

MI250X

A100

*The TF32 data format is not IEEE compliant and not included in this comparison.

AMD Instinct MI250 Accelerators

AMD Instinct MI250 accelerators drive exceptional HPC and AI performance for enterprise, research, and academia-related use cases.

208 CUs

208 GPU Compute Units

128 GB

128 GB HBM2e Memory

3.2 TB/s

3.2 TB/s Peak Memory Bandwidth

100 GB/s

100 GB/s Peak Infinity Fabric™ Link Bandwidth

Performance Benchmarks

HPCG 3.0: The High Performance Conjugate Gradients (HPCG) Benchmark is metric for ranking HPC systems. HPCG is intended as a complement to the High Performance LINPACK (HPL) benchmark, currently used to rank the TOP500 computing systems.5

Metric |

1xMI250 |

2xMI250 |

4xMI250 |

GFLOPS |

488.8 |

972.6 |

1927.7 |

HPL: HPL is a High-Performance Linpack benchmark implementation. The code solves a uniformly random system of linear equations and reports time and floating-point execution rate using a standard formula for operation count.6

Metric |

1xMI250 |

2xMI250 |

4xMI250 |

TFLOPS |

40.45 |

80.666 |

161.97 |

HPL_AI, or the High Performance LINPACK for Accelerator Introspection is a benchmark that highlights the convergence of HPC and AI workloads by solving a system of linear equations using novel, mixed-precision algorithms.7

Metric |

Test Module |

4xMI250 |

TFLOPS |

Mixed FP16/32/64 |

930.44 |

PyFR is an open-source Python based framework for solving advection-diffusion type problems on streaming architectures using the Flux Reconstruction approach of Huynh. The framework is designed to solve a range of governing systems on mixed unstructured grids containing various element types.8

Metric |

Test Module |

1xMI250 |

Simulations / day |

TGV |

41.73 |

OpenFOAM (for "Open-source Field Operation And Manipulation") is a C++ toolbox for the development of customized numerical solvers, and pre-/post-processing utilities for the solution of continuum mechanics problems, most prominently including computational fluid dynamics (CFD).9

Metric |

Test Module |

1xMI250 |

2xMI250 |

4xMI250 |

Time (sec) |

HPC Motorbike (bigger is not better) |

662.3 |

364.26 |

209.84 |

Amber is a suite of biomolecular simulation programs, the term "Amber" refers to two things. First, it is a set of molecular mechanical force fields for the simulation of biomolecules. Second, it is a package of molecular simulation programs which includes source code and demos.10

Application |

Metric |

Test Module |

Bigger Is Better |

1xMI250 |

AMBER |

ns/day |

Cellulose Production NPT 4fs |

Yes |

227.2 |

AMBER |

ns/day |

Cellulose Production NVE 4fs |

Yes |

242.4 |

AMBER |

ns/day |

FactorIX Production NPT 4fs |

Yes |

803.1 |

AMBER |

ns/day |

FactorIX Production NVE 4fs |

Yes |

855.2 |

AMBER |

ns/day |

JAC Production NPT 4fs |

Yes |

1794 |

AMBER |

ns/day |

JAC Production NVE 4fs |

Yes |

1871 |

AMBER |

ns/day |

STMV Production NPT 4fs |

Yes |

80.65 |

AMBER |

ns/day |

STMV Production NVE 4fs |

Yes |

86.7 |

GROMACS is a molecular dynamics package mainly designed for simulations of proteins, lipids, and nucleic acids. It was originally developed in the Biophysical Chemistry department of University of Groningen, and is now maintained by contributors in universities and research centers worldwide.11

Application |

Metric |

Test Module |

Bigger Is Better |

1xMI250 |

2xMI250 |

4xMI250 |

GROMACS |

ns/day |

STMV |

Yes |

34.2 |

61.812 |

89.26 |

LAMMPS is a classical molecular dynamics code with a focus on materials modeling. It's an acronym for Large-scale Atomic/Molecular Massively Parallel Simulator. LAMMPS has potentials for solid-state materials (metals, semiconductors) and soft matter (biomolecules, polymers) and coarse-grained or mesoscopic systems.12

Application |

Metric |

Test Module |

Bigger Is Better |

1xMI250 |

2xMI250 |

4xMI250 |

LAMMPS |

Atom-Time Steps/sec |

LJ |

Yes |

6E+08 |

1E+09 |

2E+09 |

LAMMPS |

Atom-Time Steps/sec |

ReaxFF |

Yes |

7E+06 |

1E+07 |

3E+07 |

LAMMPS |

Atom-Time Steps/sec |

Tersoff |

Yes |

5E+08 |

1E+09 |

2E+09 |

NAMD is a molecular dynamics package designed for simulating the movement of biomolecules over time. It is suited for large biomolecular systems, and it has been used to simulate systems with over 1 billion atoms, presenting stellar scalability on thousands of CPU cores and GPUs.13

Application |

Metric |

Test Module |

Bigger Is Better |

1xMI250 |

2xMI250 |

4xMI250 |

NAMD 3.0 |

ns/day |

APOA1_NVE |

Yes |

221.4 |

443.61 |

879.43 |

NAMD 3.0 |

ns/day |

STMV_NVE |

Yes |

19.87 |

39.545 |

77.132 |

AMD Instinct MI210 Accelerators

AMD Instinct MI210 accelerators power enterprise, research, and academic HPC and AI workloads for single-server solutions and more.

104 CUs

104 GPU Compute Units

64 GB

64 GB HBM2e Memory

1.6 TB/s

1.6 TB/s Peak Memory Bandwidth

100 GB/s

100 GB/s Peak Infinity Fabric™ Link Bandwidth

Performance Benchmarks

HPL: HPL is a High-Performance Linpack benchmark implementation. The code solves a uniformly random system of linear equations and reports time and floating-point execution rate using a standard formula for operation count.14

Metric |

Bigger is Better |

1xMI210 |

2xMI210 |

4xMI210 | 8xMI210 |

TFLOPS |

Yes |

21.07 |

40.878 |

81.097 | 159.73 |

HPL-AI: HPL_AI, or the High Performance LINPACK for Accelerator Introspection is a benchmark that highlights the convergence of HPC and AI workloads by solving a system of linear equations using novel, mixed-precision algorithms.15

Metric |

Test Module |

Bigger is Better |

4xMI210 |

8xMI210 |

TFLOPS |

Mixed FP16/32/64 |

Yes |

444.77 |

976.18 |

LAMMPS: LAMMPS is a classical molecular dynamics code with a focus on materials modeling. It's an acronym for Large-scale Atomic/Molecular Massively Parallel Simulator. LAMMPS has potentials for solid-state materials (metals, semiconductors) and soft matter (biomolecules, polymers) and coarse-grained or mesoscopic systems.16

Metric |

Test Module |

Bigger is Better |

4xMI210 | 8xMI210 |

Atom-Time Steps/sec |

ReaxFF |

Yes |

1E+07 | 3E+07 |

AMD ROCm™ Software

AMD ROCm™ software includes a broad set of programming models, tools, compilers, libraries, and runtimes for optimizing AI models and HPC workloads targeting AMD Instinct accelerators.

Case Studies

Find Solutions

Find a partner offering AMD Instinct accelerator-based solutions.

Resources

Stay Informed

Sign up to receive the latest data center news and server content.

Footnotes

- Top 500 list, June 2023

- World’s fastest data center GPU is the AMD Instinct™ MI250X. Calculations conducted by AMD Performance Labs as of Sep 15, 2021, for the AMD Instinct™ MI250X (128GB HBM2e OAM module) accelerator at 1,700 MHz peak boost engine clock resulted in 95.7 TFLOPS peak theoretical double precision (FP64 Matrix), 47.9 TFLOPS peak theoretical double precision (FP64), 95.7 TFLOPS peak theoretical single precision matrix (FP32 Matrix), 47.9 TFLOPS peak theoretical single precision (FP32), 383.0 TFLOPS peak theoretical half precision (FP16), and 383.0 TFLOPS peak theoretical Bfloat16 format precision (BF16) floating-point performance. Calculations conducted by AMD Performance Labs as of Sep 18, 2020 for the AMD Instinct™ MI100 (32GB HBM2 PCIe® card) accelerator at 1,502 MHz peak boost engine clock resulted in 11.54 TFLOPS peak theoretical double precision (FP64), 46.1 TFLOPS peak theoretical single precision matrix (FP32), 23.1 TFLOPS peak theoretical single precision (FP32), 184.6 TFLOPS peak theoretical half precision (FP16) floating-point performance. Published results on the NVidia Ampere A100 (80GB) GPU accelerator, boost engine clock of 1410 MHz, resulted in 19.5 TFLOPS peak double precision tensor cores (FP64 Tensor Core), 9.7 TFLOPS peak double precision (FP64). 19.5 TFLOPS peak single precision (FP32), 78 TFLOPS peak half precision (FP16), 312 TFLOPS peak half precision (FP16 Tensor Flow), 39 TFLOPS peak Bfloat 16 (BF16), 312 TFLOPS peak Bfloat16 format precision (BF16 Tensor Flow), theoretical floating-point performance. The TF32 data format is not IEEE compliant and not included in this comparison. https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/nvidia-ampere-architecture-whitepaper.pdf, page 15, Table 1. MI200-01

- Calculations conducted by AMD Performance Labs as of Sep 21, 2021, for the AMD Instinct™ MI250X and MI250 (128GB HBM2e) OAM accelerators designed with AMD CDNA™ 2 6nm FinFet process technology at 1,600 MHz peak memory clock resulted in 3.2768 TFLOPS peak theoretical memory bandwidth performance. MI250/MI250X memory bus interface is 4,096 bits times 2 die and memory data rate is 3.20 Gbps for total memory bandwidth of 3.2768 TB/s ((3.20 Gbps*(4,096 bits*2))/8). The highest published results on the NVidia Ampere A100 (80GB) SXM GPU accelerator resulted in 2.039 TB/s GPU memory bandwidth performance. https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/a100/pdf/nvidia-a100-datasheet-us-nvidia-1758950-r4-web.pdf MI200-07

- Calculations conducted by AMD Performance Labs as of Sep 21, 2021, for the AMD Instinct™ MI250X and MI250 accelerators (OAM) designed with AMD CDNA™ 2 6nm FinFet process technology at 1,600 MHz peak memory clock resulted in 128GB HBMe memory capacity. Published specifications on the NVidia Ampere A100 (80GB) SXM and A100 accelerators (PCIe®) showed 80GB memory capacity. Results found at: https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/a100/pdf/nvidia-a100-datasheet-us-nvidia-1758950-r4-web.pdf MI200-18

- Testing Conducted by AMD performance lab 11.2.2022 using HPCG 3.0 comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x, 2x, and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, SBIOS M12, Ubuntu 20.04.4, Host ROCm 5.2.0 vs. 2P AMD EPYC™ 7742 server with 1x, 2x, and 4x Nvidia Ampere A100 80GB SXM 400W GPUs, SBIOS 0.34, Ubuntu 20.04.4, CUDA 11.6. HPCG 3.0 Container: nvcr.io/nvidia/hpc-benchmarks:21.4-hpcg at https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-70A.

- Testing Conducted by AMD performance lab 11.14.2022 using HPL comparing two systems. 2P EPYC™ 7763 powered server, SMT disabled, with 1x, 2x, and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, host ROCm 5.2.0 rocHPL6.0.0. AMD HPL container is not yet available on Infinity Hub. vs. 2P AMD EPYC™ 7742 server, SMT enabled, with 1x, 2x, and4x Nvidia Ampere A100 80GB SXM 400W GPUs, CUDA 11.6 and Driver Version 510.47.03. HPL Container (nvcr.io/nvidia/hpc-benchmarks:21.4-hpl) obtained from https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-69A.

- HPL-AI comparison based on AMD internal testing as of 11/2/2022 measuring the HPL-AI benchmark performance (TFLOPS) using a server with 2x EPYC 7763 with 4x MI250 128MB HBM2e running host ROCm 5.2.0, HPL-AI-AMD v1.0.0 versus a server with 2x EPYC 7742 with 4x A100 SXM 80GB HBM2e running CUDA 11.6, HPL-AI-NVIDIA v2.0.0, container nvcr.io/nvidia/hpc-benchmarks:21.4-hpl. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-81.

- Testing Conducted by AMD performance lab on 11/25/2022 using PyFR TGV and NACA 0021 comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, SBIOS M12, Ubuntu 20.04.4, Host ROCm 5.2.0 vs. 2P AMD EPYC™ 7742 server, SMT enabled, with 1x Nvidia Ampere A100 80GB SXM 400W GPUs, SBIOS 0.34, Ubuntu 20.04.4, CUDA 11.6. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-82.

- Testing Conducted by AMD performance lab on 04/14/2023 using OpenFOAM v2206 on a 2P EPYC 7763 CPU production server with 1x, 2x, and 4x AMD Instinct™ MI250 GPUs (128GB, 560W) with AMD Infinity Fabric™ technology enabled, ROCm™5.3.3, Ubuntu® 20.04.4 vs a 2P EPYC 7742 CPU production server with 1x, 2x, and 4x Nvidia A100 80GB SXM GPUs (400W) with NVLink technology enabled, CUDA® 11.8, Ubuntu 20.04.4. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations.

- Testing Conducted by AMD performance lab 8.26.22 using AMBER: Cellulose_production_NPT_4fs, Cellulose_production_NVE_4fs, FactorIX_production_NPT_4fs, FactorIX_production_NVE_4fs, STMV_production_NPT_4fs, STMV_production_NVE_4fs, JAC_production_NPT_4fs and JAC production_NVE_4fs. Comparing two systems: 2P EPYC™ 7763 powered server with 1x A MD Instinct™ MI250 (128GB HBM2e) 560W GPU, ROCm 5.2.0, Amber container 22.amd_100 vs. 2P EPYC™ 7742 powered server with 1x N vidia A100 SXM (80GB HBM2e) 400W GPU, MPS enabled (2 instances), CUDA 11.6 . MI200-73.

- Testing Conducted by AMD performance lab 10.18.22 using Gromacs STMV comparing two systems: 2P EPYC™ 7763 powered server with 4x AMD Instinct™ MI250 (128GB HBM2e) 560W GPU with Infinity Fabric™ technology, ROCm™ 5.2.0, Gromacs container 2022.3.amd1_174 vs. Nvidia public claims https://developer.nvidia.com/hpc-application-performance. (Gromacs 2022.2). Dual EPYC 7742 with 4x Nvidia Ampere A100 SXM 80GB GPUs. Server manufacturers may vary configurations, yielding different results. Performance may vary based factors including use of latest drivers and optimizations. MI200-74.

- Testing Conducted by AMD performance lab 109.0322.22 using LAMMPS: EAM, LJ, ReaxFF and Tersoff comparing two systems: 2P EPYC™ 7763 powered server with 4x AMD Instinct™ MI250 (128GB HBM2e) 560W GPU, ROCm 5.2.0, LAMMPS container 2021.5.14_121amdih/lammps:2022.5.04_130 vs. Nvidia public claims http://web.archive.org/web/20220718053400/https://developer.nvidia.com/hpc-application-performance. (stable 23Jun2022 update 1). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-77.

- Testing Conducted by AMD performance lab 9.13.22 using NAMD: STMV_NVE, APOA1_NVE comparing two systems: 2P EPYC™ 7763 powered server with 1x, 2x and 4x AMD Instinct™ MI250 (128GB HBM2e) 560W GPU with AMD Infinity Fabric™ technology, ROCm 5.2.0, NAMD container namd3:3.0a9 vs. Nvidia public claims of performance of 2P EPYC 7742 Server with 1x, 2x, and 4x Nvidia Ampere A100 80GB SXM GPU https://developer.nvidia.com/hpc-application-performance. (v2.15a AVX-512). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-76.

- Testing Conducted on 11.14.2022 by AMD performance lab on a 2P socket AMD EPYC™ ‘7763 CPU Supermicro 4124 with 8x AMD Instinct™ MI210 GPU (PCIe® 64GB,300W), AMD Infinity Fabric™ technology enabled. Ubuntu 18.04.6 LTS, Host ROCm 5.2.0, rocHPL 6.0.0. Results calculated from medians of five runs Vs. Testing Conducted by AMD performance on 2P socket AMD EPYC™ 7763 Supermicro 4124 with 8x NVIDIA A100 GPU (PCIe 80GB 300W), Ubuntu 18.04.6 LTS, CUDA 11.6, HPL Nvidia container image 21.4-HPL. All results measured on systems configured with 8 GPUs; 2 pairs of 4xMI210 GPUs connected by a 4-way Infinity Fabric™ link bridge; 4 Pairs of 2xA100 80GB PCIe GPUs connected by a 2-way NVLink bridges. Information on HPL: https://www.netlib.org/benchmark/hpl/. AMD HPL Container Details: HPL Container not yet available on Infinity Hub. Nvidia HPL Container Detail: https://ngc.nvidia.com/catalog/containers/nvidia:hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-49A.

- HPL-AI comparison based on AMD internal testing as of 11/2/2022 measuring the HPL-AI benchmark performance (TFLOPS) using a server with 2x EPYC 7763 with 8x MI210 64MB HBM2e with Infinity Fabric technology running host ROCm 5.2.0, HPL-AI-AMD v1.0.0; AMD HPL-AI container not yet available on Infinity Hub versus a server with 2x EPYC 7763 with 8x A100 PCIe 80GB HBM2e running CUDA 11.6, HPL-AI-NVIDIA v2.0.0, container nvcr.io/nvidia/hpc-benchmarks:21.4-hpl.Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-83.

- Testing Conducted on 10.03.2022 by AMD performance lab on a 2P socket AMD EPYC™ ‘7763 CPU Supermicro 4124 with 4x and 8x AMD Instinct™ MI210 GPUs (PCIe® 64GB,300W) with AMD Infinity Fabric™ technology enabled. SBIOS2.2, Ubuntu® 18.04.6 LTS, host ROCm™ 5.2.0. LAMMPS container amdih-2022.5.04_130 (ROCm 5.1) versus Nvidia public claims for 4x and 8x A100 PCIe 80GB GPUs http://web.archive.org/web/20220718053400/https://developer.nvidia.com/hpc-application-performance. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-47A.

- Top 500 list, June 2023

- World’s fastest data center GPU is the AMD Instinct™ MI250X. Calculations conducted by AMD Performance Labs as of Sep 15, 2021, for the AMD Instinct™ MI250X (128GB HBM2e OAM module) accelerator at 1,700 MHz peak boost engine clock resulted in 95.7 TFLOPS peak theoretical double precision (FP64 Matrix), 47.9 TFLOPS peak theoretical double precision (FP64), 95.7 TFLOPS peak theoretical single precision matrix (FP32 Matrix), 47.9 TFLOPS peak theoretical single precision (FP32), 383.0 TFLOPS peak theoretical half precision (FP16), and 383.0 TFLOPS peak theoretical Bfloat16 format precision (BF16) floating-point performance. Calculations conducted by AMD Performance Labs as of Sep 18, 2020 for the AMD Instinct™ MI100 (32GB HBM2 PCIe® card) accelerator at 1,502 MHz peak boost engine clock resulted in 11.54 TFLOPS peak theoretical double precision (FP64), 46.1 TFLOPS peak theoretical single precision matrix (FP32), 23.1 TFLOPS peak theoretical single precision (FP32), 184.6 TFLOPS peak theoretical half precision (FP16) floating-point performance. Published results on the NVidia Ampere A100 (80GB) GPU accelerator, boost engine clock of 1410 MHz, resulted in 19.5 TFLOPS peak double precision tensor cores (FP64 Tensor Core), 9.7 TFLOPS peak double precision (FP64). 19.5 TFLOPS peak single precision (FP32), 78 TFLOPS peak half precision (FP16), 312 TFLOPS peak half precision (FP16 Tensor Flow), 39 TFLOPS peak Bfloat 16 (BF16), 312 TFLOPS peak Bfloat16 format precision (BF16 Tensor Flow), theoretical floating-point performance. The TF32 data format is not IEEE compliant and not included in this comparison. https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/nvidia-ampere-architecture-whitepaper.pdf, page 15, Table 1. MI200-01

- Calculations conducted by AMD Performance Labs as of Sep 21, 2021, for the AMD Instinct™ MI250X and MI250 (128GB HBM2e) OAM accelerators designed with AMD CDNA™ 2 6nm FinFet process technology at 1,600 MHz peak memory clock resulted in 3.2768 TFLOPS peak theoretical memory bandwidth performance. MI250/MI250X memory bus interface is 4,096 bits times 2 die and memory data rate is 3.20 Gbps for total memory bandwidth of 3.2768 TB/s ((3.20 Gbps*(4,096 bits*2))/8). The highest published results on the NVidia Ampere A100 (80GB) SXM GPU accelerator resulted in 2.039 TB/s GPU memory bandwidth performance. https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/a100/pdf/nvidia-a100-datasheet-us-nvidia-1758950-r4-web.pdf MI200-07

- Calculations conducted by AMD Performance Labs as of Sep 21, 2021, for the AMD Instinct™ MI250X and MI250 accelerators (OAM) designed with AMD CDNA™ 2 6nm FinFet process technology at 1,600 MHz peak memory clock resulted in 128GB HBMe memory capacity. Published specifications on the NVidia Ampere A100 (80GB) SXM and A100 accelerators (PCIe®) showed 80GB memory capacity. Results found at: https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/a100/pdf/nvidia-a100-datasheet-us-nvidia-1758950-r4-web.pdf MI200-18

- Testing Conducted by AMD performance lab 11.2.2022 using HPCG 3.0 comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x, 2x, and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, SBIOS M12, Ubuntu 20.04.4, Host ROCm 5.2.0 vs. 2P AMD EPYC™ 7742 server with 1x, 2x, and 4x Nvidia Ampere A100 80GB SXM 400W GPUs, SBIOS 0.34, Ubuntu 20.04.4, CUDA 11.6. HPCG 3.0 Container: nvcr.io/nvidia/hpc-benchmarks:21.4-hpcg at https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-70A.

- Testing Conducted by AMD performance lab 11.14.2022 using HPL comparing two systems. 2P EPYC™ 7763 powered server, SMT disabled, with 1x, 2x, and 4x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, host ROCm 5.2.0 rocHPL6.0.0. AMD HPL container is not yet available on Infinity Hub. vs. 2P AMD EPYC™ 7742 server, SMT enabled, with 1x, 2x, and4x Nvidia Ampere A100 80GB SXM 400W GPUs, CUDA 11.6 and Driver Version 510.47.03. HPL Container (nvcr.io/nvidia/hpc-benchmarks:21.4-hpl) obtained from https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-69A.

- HPL-AI comparison based on AMD internal testing as of 11/2/2022 measuring the HPL-AI benchmark performance (TFLOPS) using a server with 2x EPYC 7763 with 4x MI250 128MB HBM2e running host ROCm 5.2.0, HPL-AI-AMD v1.0.0 versus a server with 2x EPYC 7742 with 4x A100 SXM 80GB HBM2e running CUDA 11.6, HPL-AI-NVIDIA v2.0.0, container nvcr.io/nvidia/hpc-benchmarks:21.4-hpl. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-81.

- Testing Conducted by AMD performance lab on 11/25/2022 using PyFR TGV and NACA 0021 comparing two systems: 2P EPYC™ 7763 powered server, SMT disabled, with 1x AMD Instinct™ MI250 (128 GB HBM2e) 560W GPUs, SBIOS M12, Ubuntu 20.04.4, Host ROCm 5.2.0 vs. 2P AMD EPYC™ 7742 server, SMT enabled, with 1x Nvidia Ampere A100 80GB SXM 400W GPUs, SBIOS 0.34, Ubuntu 20.04.4, CUDA 11.6. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-82.

- Testing Conducted by AMD performance lab on 04/14/2023 using OpenFOAM v2206 on a 2P EPYC 7763 CPU production server with 1x, 2x, and 4x AMD Instinct™ MI250 GPUs (128GB, 560W) with AMD Infinity Fabric™ technology enabled, ROCm™5.3.3, Ubuntu® 20.04.4 vs a 2P EPYC 7742 CPU production server with 1x, 2x, and 4x Nvidia A100 80GB SXM GPUs (400W) with NVLink technology enabled, CUDA® 11.8, Ubuntu 20.04.4. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations.

- Testing Conducted by AMD performance lab 8.26.22 using AMBER: Cellulose_production_NPT_4fs, Cellulose_production_NVE_4fs, FactorIX_production_NPT_4fs, FactorIX_production_NVE_4fs, STMV_production_NPT_4fs, STMV_production_NVE_4fs, JAC_production_NPT_4fs and JAC production_NVE_4fs. Comparing two systems: 2P EPYC™ 7763 powered server with 1x A MD Instinct™ MI250 (128GB HBM2e) 560W GPU, ROCm 5.2.0, Amber container 22.amd_100 vs. 2P EPYC™ 7742 powered server with 1x N vidia A100 SXM (80GB HBM2e) 400W GPU, MPS enabled (2 instances), CUDA 11.6 . MI200-73.

- Testing Conducted by AMD performance lab 10.18.22 using Gromacs STMV comparing two systems: 2P EPYC™ 7763 powered server with 4x AMD Instinct™ MI250 (128GB HBM2e) 560W GPU with Infinity Fabric™ technology, ROCm™ 5.2.0, Gromacs container 2022.3.amd1_174 vs. Nvidia public claims https://developer.nvidia.com/hpc-application-performance. (Gromacs 2022.2). Dual EPYC 7742 with 4x Nvidia Ampere A100 SXM 80GB GPUs. Server manufacturers may vary configurations, yielding different results. Performance may vary based factors including use of latest drivers and optimizations. MI200-74.

- Testing Conducted by AMD performance lab 109.0322.22 using LAMMPS: EAM, LJ, ReaxFF and Tersoff comparing two systems: 2P EPYC™ 7763 powered server with 4x AMD Instinct™ MI250 (128GB HBM2e) 560W GPU, ROCm 5.2.0, LAMMPS container 2021.5.14_121amdih/lammps:2022.5.04_130 vs. Nvidia public claims http://web.archive.org/web/20220718053400/https://developer.nvidia.com/hpc-application-performance. (stable 23Jun2022 update 1). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-77.

- Testing Conducted by AMD performance lab 9.13.22 using NAMD: STMV_NVE, APOA1_NVE comparing two systems: 2P EPYC™ 7763 powered server with 1x, 2x and 4x AMD Instinct™ MI250 (128GB HBM2e) 560W GPU with AMD Infinity Fabric™ technology, ROCm 5.2.0, NAMD container namd3:3.0a9 vs. Nvidia public claims of performance of 2P EPYC 7742 Server with 1x, 2x, and 4x Nvidia Ampere A100 80GB SXM GPU https://developer.nvidia.com/hpc-application-performance. (v2.15a AVX-512). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-76.

- Testing Conducted on 11.14.2022 by AMD performance lab on a 2P socket AMD EPYC™ ‘7763 CPU Supermicro 4124 with 8x AMD Instinct™ MI210 GPU (PCIe® 64GB,300W), AMD Infinity Fabric™ technology enabled. Ubuntu 18.04.6 LTS, Host ROCm 5.2.0, rocHPL 6.0.0. Results calculated from medians of five runs Vs. Testing Conducted by AMD performance on 2P socket AMD EPYC™ 7763 Supermicro 4124 with 8x NVIDIA A100 GPU (PCIe 80GB 300W), Ubuntu 18.04.6 LTS, CUDA 11.6, HPL Nvidia container image 21.4-HPL. All results measured on systems configured with 8 GPUs; 2 pairs of 4xMI210 GPUs connected by a 4-way Infinity Fabric™ link bridge; 4 Pairs of 2xA100 80GB PCIe GPUs connected by a 2-way NVLink bridges. Information on HPL: https://www.netlib.org/benchmark/hpl/. AMD HPL Container Details: HPL Container not yet available on Infinity Hub. Nvidia HPL Container Detail: https://ngc.nvidia.com/catalog/containers/nvidia:hpc-benchmarks. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-49A.

- HPL-AI comparison based on AMD internal testing as of 11/2/2022 measuring the HPL-AI benchmark performance (TFLOPS) using a server with 2x EPYC 7763 with 8x MI210 64MB HBM2e with Infinity Fabric technology running host ROCm 5.2.0, HPL-AI-AMD v1.0.0; AMD HPL-AI container not yet available on Infinity Hub versus a server with 2x EPYC 7763 with 8x A100 PCIe 80GB HBM2e running CUDA 11.6, HPL-AI-NVIDIA v2.0.0, container nvcr.io/nvidia/hpc-benchmarks:21.4-hpl.Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-83.

- Testing Conducted on 10.03.2022 by AMD performance lab on a 2P socket AMD EPYC™ ‘7763 CPU Supermicro 4124 with 4x and 8x AMD Instinct™ MI210 GPUs (PCIe® 64GB,300W) with AMD Infinity Fabric™ technology enabled. SBIOS2.2, Ubuntu® 18.04.6 LTS, host ROCm™ 5.2.0. LAMMPS container amdih-2022.5.04_130 (ROCm 5.1) versus Nvidia public claims for 4x and 8x A100 PCIe 80GB GPUs http://web.archive.org/web/20220718053400/https://developer.nvidia.com/hpc-application-performance. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. MI200-47A.