Performance Briefs and Tech Docs

Find the technical resources you need to build your infrastructure with AMD EPYC 9005 processors

Advancing AI, Cloud, and Enterprise Computing

AMD EPYC™ 9005 processors provide end-to-end AI performance.

AMD EPYC™ 9005 can match integer performance of legacy hardware with up to 86% fewer racks2, dramatically reducing physical footprint, power consumption, and the number of software licenses needed – freeing up space for new or expanded AI workloads.

Many AI workloads—language models with 13 billion parameters and below, image and fraud analysis, or recommendation systems run efficiently on CPU-only servers that feature AMD EPYC™ 9005 CPUs. Servers running two 5th Gen AMD EPYC 9965 CPUs offer up to 2x inference throughput when compared to previous generation offerings.3

The AMD EPYC™ 9005 family includes options that are optimized to be host-CPUs for GPU-enabled systems to help increase performance on select AI workloads and improve the ROI of each GPU server. For example, a high frequency AMD EPYC 9575F processor powered server with 8x GPUs delivers up to 20% greater system performance than a server with Intel Xeon 8592+ processors as the host CPU with the same 8x GPUs running Llama3.1-70B.4

AMD EPYC 9005 processors deliver exceptional performance while enabling leadership energy efficiency and cost-of-ownership (TCO) value in support of key business imperatives.

AMD EPYC 9005 CPU-powered servers leverage the new “Zen 5” cores to offer compelling mainstream performance metrics, including 2.7x integer performance when compared to leading competitive offerings.5

AMD EPYC™ 9005 processors provide density and performance for cloud workloads. With 192 cores, the top-of-stack AMD EPYC 9965 processor will support 33% more virtual CPUs (vCPUs) than the leading available Intel® Xeon 6E “Sierra Forest” 144 core processor (1 core per vCPU).

Data centers are demanding more energy than ever. AMD EPYC™ 9005 processors continue to provide the energy efficiency and TCO benefits found in previous AMD EPYC generations.

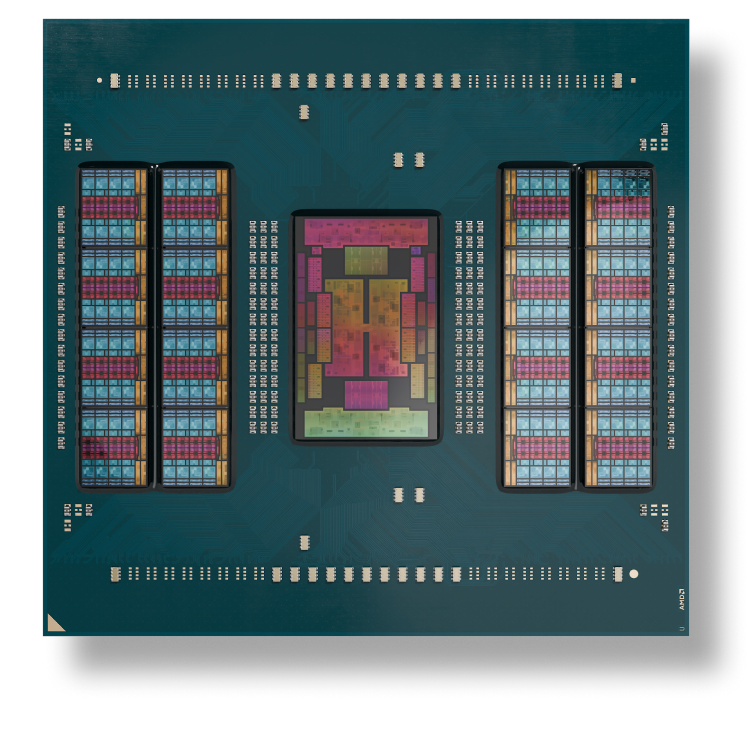

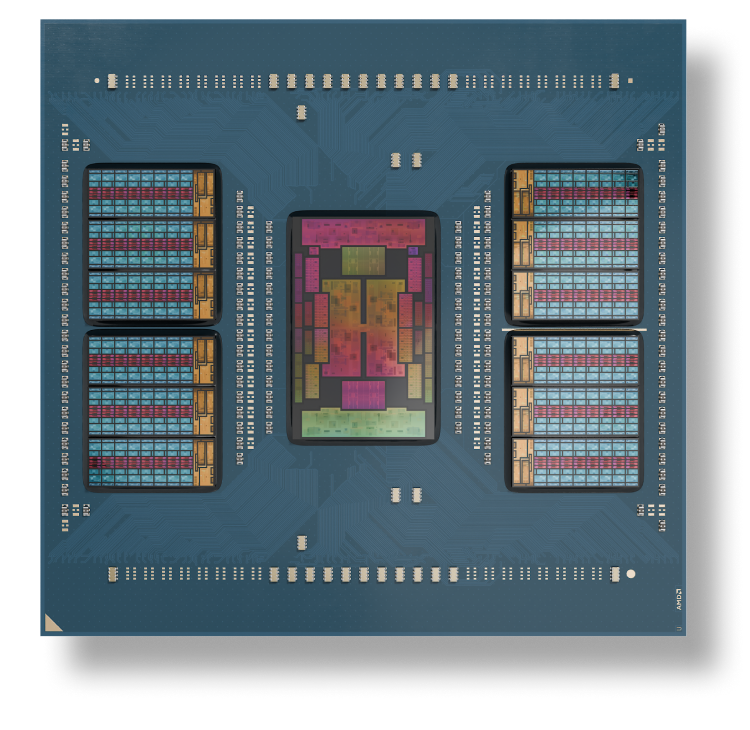

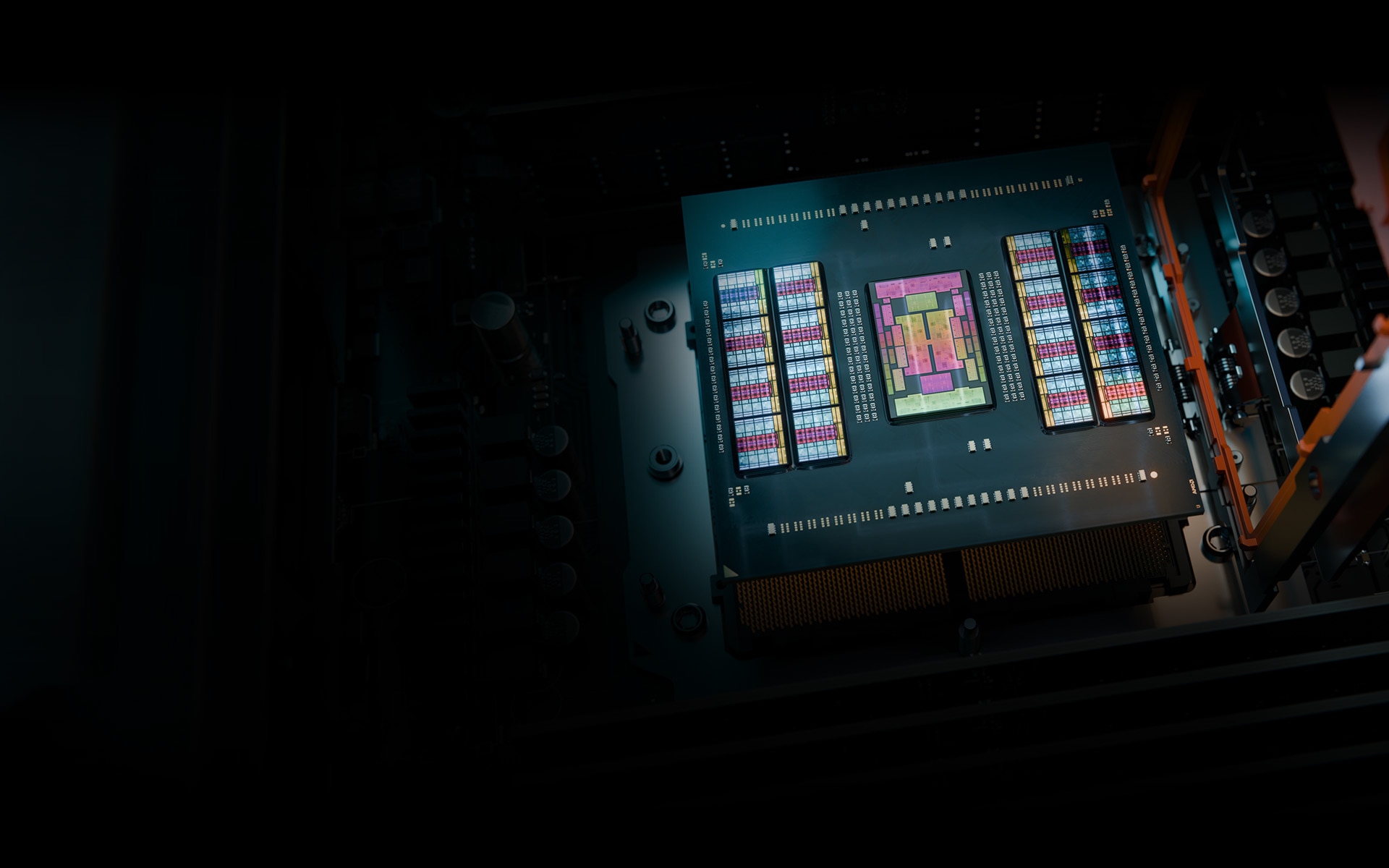

AMD EPYC 9005 Series processors include up to 192 “Zen 5” or “Zen 5c” cores with exceptional memory bandwidth and capacity. The innovative AMD chiplet architecture enables high performance, energy-efficient solutions optimized for your different computing needs.