Accelerate Fine-Tuned LLMs Locally on AMD Ryzen AI NPU & IGPU

Apr 25, 2025

Overview

AMD Ryzen™ AI architecture seamlessly integrates dedicated NPUs, AMD Radeon™ Graphics (iGPU), and AMD Ryzen processing cores (CPU) to enable advanced AI capabilities on a heterogeneous processor. This hybrid architecture utilizes the best performance of NPU for time-to-first-token (TTFT) and iGPU for token generation of LLM inference to deliver exceptional performance.

In this blog, we provide a case study for custom LLM deployment on an AMD NPU + iGPU Ryzen AI processor. This includes finetuning a base LLM, Llama 3.2 1B, on a specific dataset, Daily Drilling Report (DDR) dataset utilizing Parameter Efficient Fine Tuning (PEFT), then quantizing the finetuned LLM using the AMD Quark optimization toolkit and subsequently deploying it locally on an AMD Ryzen AI PC. This blog demonstrates how AMD Ryzen AI 300 series processors can be leveraged for efficient and optimized local LLM inference of customized AI models for real-world applications.

Parameter Efficient Fine-tuning (PEFT)

Pretrained LLMs are trained on large-scale datasets and are used for general tasks that fit their training data distribution (i.e. generic question-answering), whereas finetuned models are pretrained models that are further trained on domain specific data to obtain task-specific improvement. While pretrained LLMs models offer tremendous capabilities, finetuning is important to support niche business applications. An example of a finetuned model is Med-PaLM 2 that finetunes PaLM to perform Q/A specifically for medical questions. However, full finetuning is resource intensive, requiring training on all model parameters. Parameter-efficient-finetuning (PEFT) approaches offer a computationally efficient solution where only a smaller set of (extra) model parameters are trained.

Low-Rank Adaptation (LoRA)

We finetune Llama 3.2 1B using a parameter-efficient technique known as Low-Rank Adaptation (LoRA). The LoRA approach enables finetuning of LLMs without updating all model parameter weights which reduces memory and compute requirements compared to full-finetuning. Specifically, the model’s original weights remain frozen during finetuning and a smaller set of new trainable parameters, low rank matrices (adapters), are updated. After LoRA finetuning, the adapters are merged with the original model’s weights. In this manner, the learned adapters represent task-specific patterns, while the original model retains its general knowledge.

Daily Drilling Reports Dataset

We finetune Llama 3.2 1B for an application within the oil industry, using the Daily Drilling Reports (DDR) dataset from Volve field and released by Equinor. The DDRs contain logs of drilling operations, including daily activities at oil drilling sites. The original DDR dataset is in the WITSML format, an industry standard for oil rigging. For applications to LLMs, we utilize a transformed version of the DDR dataset that is converted into an Alpaca format for text summarization. These text summaries contain domains-specific information, summarizing key information important to the oil rigging industry. We finetune Llama 3.2 1B on these DDR summaries to enable the model to learn the task specific information important to summarize, as well as capture the stylistic language. Here is an example from the Alpaca formatted DDR dataset:

Instruction:

Given the following activities for well NO 15/9-19 A on 1997-07-25, please prepare the 24-hour summary for the Daily Drilling Report (DDR).

Only return the 24-hour summary, and nothing else.

Input:

00:30 - 06:00: MU baker windowmaster whipstock milling assembly associated BHA. Continue TIH with DP.

06:00 - 10:00: Continued TIH with windowmaster whipstock milling assembly. Tagged 9 5/8" bridge-plug at 2211 m dpm.

10:00 - 12:00: Oriented whipstock using MWD. Set anchor on whipstock.

12:00 - 18:00: Cut window in 9 5/8" casing using whipstock. Top of window 2202 m MD bottom of window 2207 m MD. Pumped 5 m3 hi-vis pill while milling at 2205 m.

18:00 - 21:00: Drilled/milled new formation from 2207 2213 m. Pumped 5 m3 hi-vis pill while drilling at 2208 m.

21:00 - 23:00: Reamed window. After approximately 20 times, still unable to go down through window without rotating based on using maximum of 12 MT weight. Reamed 5 additional times now able to slack-off pick-up through window without having to rotate string.

23:00 - 00:00: Pumped 10 m3 hi-vis pill circulated hole clean at 2000 LPM.

Output:

Finished RIH with baker windowmaster whipstock. Oriented set same. Cut window from 2202-2207 m. Drilled 6 m of formation. POOH for drilling BHA.

LoRA Finetuning of Llama 3.2 1B on MI300X

We finetune Llama 3.2 1B using LoRA on the AMD MI300X accelerators using Hugging Face. ROCm blogs are an excellent resource to deep dive into finetuning LLMs on AMD Instinct MI300X GPUs (Fine-tuning and inference using multiple accelerators — ROCm Documentation).

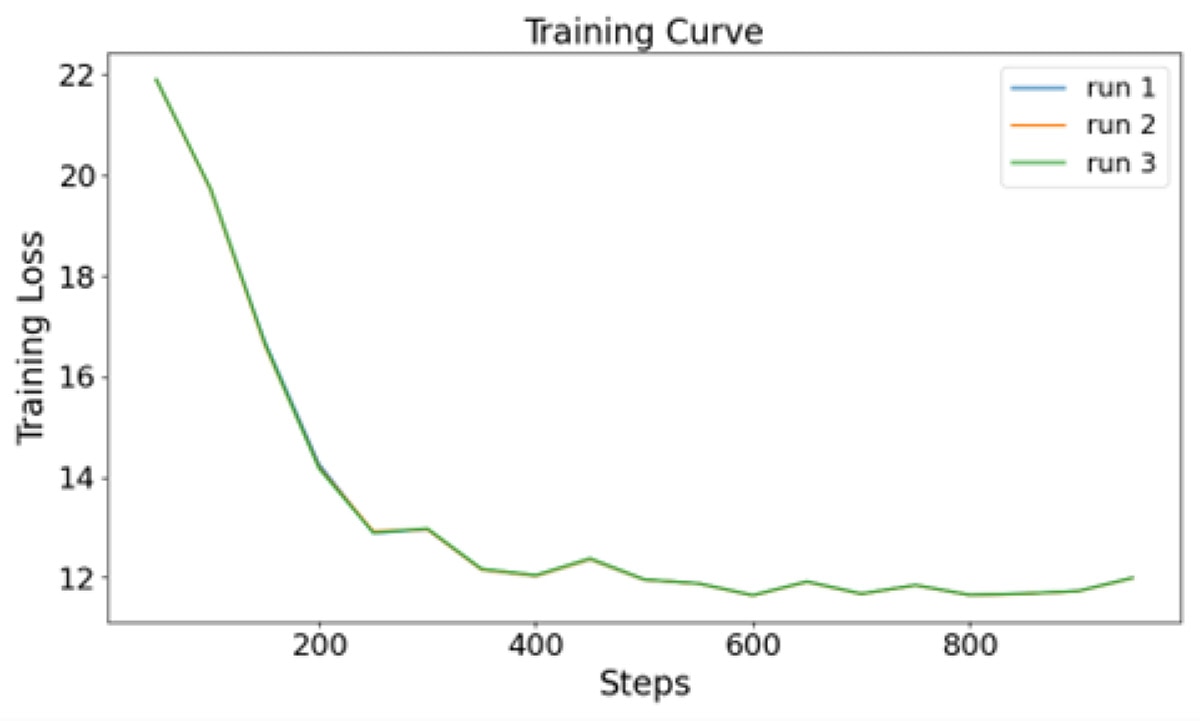

Since LoRA finetuning only learns a small set of trainable parameters, finetuning with LoRA is much more lightweight compared to full-finetuning (see Table 2). In addition to optimizing hyperparameters such as learning rate, we optimize LoRA-specific hyperparameters such as rank, alpha, and target modules. Rank controls the size of the adapters, and alpha affects the importance of the adapter weights in comparison to the base model’s weights. The target modules dictate the model layers on which adapters will be merged. See here for more details on LoRA. Below, Table 1 summarizes our hyperparameters and Figure 1 shows the training loss curves over three finetuning runs. The full training script can be found in our Github Repo here. We use BERT Score to assess the quality of finetuning. The results are shown in the evaluation section below.

Table 1. LoRA finetuning hyperparameters

Hyperparameters |

Value |

Epochs |

5 |

Learning Rate |

2e-5 |

Gradient Accumulation |

8 |

Batch Size |

1 |

Target Modules |

q_proj, v_proj |

Weight Decay |

0.1 |

LoRA Dropout |

0.5 |

Rank |

16 |

Alpha |

32 |

Table 2. Number of trainable parameters and operations comparison

|

Num. of Trainable Parameters |

Num. of Training Operations |

LoRA Finetuning |

1,703,936 |

5,352,365,678,919,680 |

Deploying LoRA-Finetuned Llama 3.2 1B On Ryzen AI

The following steps show how to deploy the LoRA-finetuned model on Ryzen AI. We’ve provided a GitHub repo with details here.

Merge LoRA Adapters

LoRA finetuning does not change the original model weights, and instead adds smaller, low rank matrices, i.e. adapters. For ease of downstream inference, the adapters are first merged into the original model’s weights, resulting in a single set of weights in the finetuned model. The following from the PEFT API in Hugging Face can be used to merge adapters to the original mode as shown in Figure 2:

from peft import PeftModel

from transformers import AutoModelForCausalLM, AutoTokenizer

adapter_name ="adapter-model-name"

model_name = " meta-llama/Llama-3.2-1B"

tokenizer = AutoTokenizer.from_pretrained(model_name)

tokenizer.pad_token = tokenizer.eos_token

model = AutoModelForCausalLM.from_pretrained(model_name).to("cuda")

merged_model = PeftModel.from_pretrained(model, adapter_name).to("cuda")

merged_model = merged_model.merge_and_unload()

merged_model.save_pretrained(<merged-model-dir-name>)

tokenizer.save_pretrained(<merged-model-dir-name>)

Figure 2: PEFT API calls to merge LoRA adapters to base, pretrained model.

Model Quantization & ONNX Export

AMD Ryzen AI ecosystem is designed to provide efficient inference by using low precision data types for faster computations. We quantize our LoRA-finetuned model using a common quantization algorithm known as AWQ (Activation-aware Weight Quantization). We use the AMD quantization toolkit, Quark, to perform quantization. To install Quark, and quantize the model, the steps are documented here: Preparing OGA Models — Ryzen AI Software 1.4 documentation

The exact command to quantize the model is shown below in Figure 3.

python quantize_quark.py

--model_dir <merged-model-dir-name> \

--output_dir <quantized-model-output-dir> \

--quant_scheme w_uint4_per_group_asym \

--num_calib_data 128 \

--quant_algo awq \

--dataset pileval_for_awq_benchmark \

--model_export hf_format \

--data_type float16 \

--exclude_layers \

Figure 3: Command in Quark to perform AWQ quantization.

Then, we export the quantized model to ONNX using the steps described here: Preparing OGA Models — Ryzen AI Software 1.4 documentation. We have also uploaded the finetuned, merged PyTorch model and the quantized exported ONNX model to Hugging Face for convenience: https://huggingface.co/collections/amd/ryzenai-finetuned-local-llms-67fd7585d2c9d1369d24fe11

Inference on AMD Ryzen AI NPU + iGPU

We run inference on 50 samples from the DDR dataset’s test set. This repository, https://github.com/AMD-AI/RyzenAI-SFT, provides scripts to run evaluation on the ONNX models using onnxruntime-genai software stack. We perform automated evaluations by measuring BERTscore, which measures semantic similarity, as well as qualitatively evaluate the model’s responses. The following steps can be used to run inference using the ONNX exported model on an AMD Ryzen AI device:

conda activate ryzen-ai-1.4.0

git clone https://github.com/AMD-AI/RyzenAI-SFT

cd repo

pip install -r requirements.txt

python inference_oga.py \

–-model_dir <onnx_model_dir> \

--inference_filename <output_pred_filename>

Figure 4: Command on how to run inference using AMD Ryzen AI.

Accuracy Evaluation

We evaluated our LoRA-finetuned model using a combination of BERTScore F1 as well as qualitative evaluations to understand how well our finetuned model was able to capture the stylistic summaries and domain specific knowledge from the DDR dataset. In Table 3, we report average results across three finetuning runs using the hyperparameters (see Table 1) discussed in the finetuning section. These runs highlight any variability in generation quality due to random initialization. From the three runs, we selected the best performing model and perform further evaluations in Table 4, including analyzing quantization and ONNX conversion needed for deploying on Ryzen AI devices.

Overall, we’d like to highlight the following in relation to Table 4:

- Our finetuned model can better capture the stylistic (terse, fragmented) summaries that are present in the DDR dataset, while the pretrained model is not able to produce such summaries. Instead, the pretrained model defaults to providing hourly summaries that are present in the input. The example response, below, shows these differences. Such differences between our LoRA-finetuned model and the pretrained model show the benefit of finetuning to improve model performance in domain specific scenarios.

- Conversion to ONNX via onnxruntime-genai (OGA) introduces some performance variation (Table 4, row 1 vs row 2, row 3 vs row 4). Overall, after both quantization and ONNX conversion, our finetuned model stylistically performs better than the pretrained model (see example response below), and has a higher BERTScore (Table 4, row 2 vs row 6).

- While our finetuned model has learned to produce terser, fragmented summaries, mimicking the styles within the DDR dataset, there is room for improvement. Most commonly, we observe repetitive texts in generated summaries. Future work would involve reducing these repetitions, training on a larger dataset and performing more exhaustive analyses with domain experts.

- Using a combination of automated and qualitative evaluations, we show the challenges of evaluating domain specific tasks and automated metrics alone may not capture the full abilities of LLMs. Especially in domain-specific industries, there is a requirement of domain expertise to truly understand factuality and usability. Improving LLM evaluation is an ongoing work within the community, and one that is very important for usable, deployed LLMs in industry.

Table 3. Automated evaluations of our finetuned model, averaged on 3 runs.

|

BERTScore mean +/- std |

Llama 3.2 1B Pretrained (.safetensors) |

79.05 +/- 0.49 |

Table 4. Automated evaluation score comparisons using our best finetuned Llama 3.2 1B model.

Model |

Framework |

Device |

BERTScore |

Llama3.2 1B Pretrained |

PyTorch Safetensors |

MI300x |

75.72 |

Llama3.2 1B Pretrained |

ONNX |

MI300x |

77.06 |

Our LoRA-finetuned DDR model |

PyTorch |

MI300x |

79.59 |

| Our LoRA-finetuned DDR model | ONNX |

MI300x |

78.22 |

Our quantized LoRA-finetuned DDR model |

ONNX |

MI300x |

77.08 |

Our quantized LoRA-finetuned DDR model |

ONNX |

Ryzen AI 300 |

78.65 |

The example below shows sample generation prompt and response generated by finetuned model as well original instruct model.

Example prompt:

“<|begin_of_text|>Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.\n\n ### Instruction:\n You are a Rig Supervisor working at an oil and gas offshore drilling operation. Your company is currently on a drilling campaign and you are the on-site Drilling Engineer (DE). As a DE, one of your jobs is to oversee the operations at the drilling rigs. As such, you know the ins and outs of the operation, down to the hourly activities. Every day, activities are recorded either by the Driller, Mud Logger, MWD / LWD engineer or the Drilling Operations Coordinator throughout the day. As a DE representative for your company, you are required to prepare the 24-hour summary for the Daily Drilling Report (DDR) based on the hourly activities reported. You must always maintain the language of report along with the terminologies and mnemonics of the Drilling Engineer. \nGiven the following activities for well NO 15/9-F-11 B on 2016-04-15, please prepare the 24-hour summary for the Daily Drilling Report (DDR).\nOnly return the 24-hour summary, and nothing else.\n\n\n\n ### Input:\n 00:00 - 03:00: Spotted equipment on pipedeck according to spotting plan. Checked and and verified all equipment recieved.\n03:00 - 07:00: Prepared hoses and cables for hookup of surface equipment.\n07:00 - 07:30: Held pre-job meeting with day crew.\n07:30 - 09:00: Continued to prepare equipment prior to rig up.\n09:00 - 12:00: Started to verify equipment according to maersk checklist for hookup to rig.\n12:00 - 13:30: Lifted grease injection head and toolcatcher to drillfloor. Fed cable through GIH.\n13:30 - 16:00: Maersk electrician connected power to altus and SLB units. Meanwhile, lifted PCE equipment to drillfloor.\n16:00 - 16:30: Held toolbox talk with involved personell prior to start building riser.\n16:30 - 19:00: Built riser and lubricator section on catwalk using tugger. Meanwhile, finalized and function tested WL winch. Schlumberger built cable head.\n19:00 - 23:45: Schlumberger tested primary BHA. Meanwhile, leak tested BOP on stump to 20/345 bar for 5/10 min. Function tested BOP rams.\n23:45 - 00:00: Production department started to close in well for handover.\n\n\n\n ### Response:\n\n "

Example Response– LoRA-Finetuned DDR Model on STRIX HALO

“Spotted equipment on pipedeck according to spotting plan. Lifted grease injection head and toolcatcher to drillfloor. Maersk electrician connected power to altus and SLB units. Built riser and lubricator section on catwalk using tugger. Function tested BOP rams. Leak tested BOP on stump to 20/345 bar for 5/10 min. Function tested BOP rams.\ufffd”

Example Response – Pretrained Llama3.2 1B Model

“00:00 - 03:00: Spotted equipment on pipedeck according to spotting plan. Checked and and verified all equipment recieved.\n03:00 - 07:00: Prepared hoses and cables for hookup of surface equipment.\n07:00 - 07:30: Held pre-job meeting with day crew.\n07:30 - 09:00: Continued to prepare equipment prior to rig up.\n09:00 - 12:00: Started to verify equipment according to maersk checklist for hookup to rig.\n12:00 - 13:30: Lifted grease injection head and toolcatcher to drillfloor. Fed cable through GIH.\n13:30 - 16:00: Maersk electrician connected power to altus and SLB units. Meanwhile, lifted PCE equipment to drillfloor.\n16:00 - 16:30: Held toolbox talk with involved personell prior to start building riser.\n16:30 - 19:00: Built riser and lubricator section on catwalk using tugger. Meanwhile, finalized and function tested WL winch. Schlumberger built cable head.\n19:00 - 23:45: Schlumberger tested primary BHA. Meanwhile, leak tested BOP on stump to 20/345 bar for 5/10 min. Function tested BOP rams.\n23:45 - 00:00: Production department started to close in well for handover."

Conclusion

Model and algorithmic innovations are driving rapid improvements in the capability of LLMs and PEFT techniques enable lightweight approaches to finetuning local LLMs on private data, for domain-specific tasks. In this blog, we harness the capabilities of AMD Ryzen AI architecture, effectively utilizing both the dedicated NPU and iGPU, to efficiently deploy a domain-specific finetuned LLM. We finetune the LLM on MI300 GPUs. We demonstrate that model quantization and inference-specific optimizations to deploy on AMD Ryzen AI devices maintain the LLM inference quality. Next, we are working towards enabling multiple finetuned adapters on AMD Ryzen AI.

Get Started Today

If you have a Ryzen-AI powered laptop, get started today using the examples in the blog and the reference links below to deploy and run inference on a custom finetuned model, locally, on your laptop.

- Accompanying Github Repo: https://github.com/AMD-AI/RyzenAI-SFT

- Accompanying Model Collection: https://huggingface.co/collections/amd/ryzenai-finetuned-local-llms-67fd7585d2c9d1369d24fe11

- Llama3.2 1B Finetuned: https://huggingface.co/amd/volve-llama3.2-1b

- Llama3.2 1B Finetuned - Hybrid Flow: https://huggingface.co/amd/volve-llama3.2-1b-hybrid

- RyzenAI software stack: Overview — Ryzen AI Software 1.4 documentation

- Currently support many other LLMs: Overview — Ryzen AI Software 1.4 documentation

References

- Quark model optimization: Welcome to AMD Quark Documentation! — Quark 0.8 documentation

- PEFT Hugging Face: https://huggingface.co/docs/peft/en/index

- Finetuning on MI300x: Fine-tuning and inference — ROCm Documentation

- Accelerate DeepSeek on Ryzen AI: Accelerate DeepSeek R1 Distilled Models Locally on AMD Ryzen™ AI NPU and iGPU