AI Inference Performance Validation on AMD Instinct™ MI300X Accelerators Using vLLM Docker Image

Nov 22, 2024

Overview

AMD has a fully optimized vLLM docker image designed for efficient inferencing of Large Language Models (LLMs) on AMD Instinct™ MI300X accelerators. The pre-built docker image optimized with recommended performance settings, offers an efficient solution for validating benchmark performance on MI300X accelerators.

Why Use the vLLM Docker Image?

The vLLM docker image contains ROCm™ 6.2.1, vLLM 0.6.4, and Pytorch 2.5.0 that implements key ease of use optimizations.

Optimizations:

- Manages all ROCm dependencies, enabling developers to quickly validate expected inference performance.

- Integrates high-performance composable kernel-compatible flash attention v2 custom kernels and modules into vLLM to improve performance.

- Skinny GEMM optimization for low batch decoding enabling matrix multiplication processes faster and more efficient when managing small batches.

- Pre-configured ready to use tunable ops for FP16/FP8 for faster and more efficient processing for deep learning and numerical simulations application.

- Triton-compatible Flash Attention V2 combining efficiency of the Flash Attention with the optimization power of the Triton framework, for faster and scalable attention computation.

- ROCm compatible custom paged attention by breaking large sequence of data into manageable “pages” to reduce memory overhead and improve efficiency.

- Latest HIPBLASLT library to perform the best linear algebra operations on FP8 data types.

In this blog, we will guide users to quickly validate the expected inference performance numbers on Instinct MI300X accelerators.

Reproducing the Benchmark Results

To run the benchmarking test, we have simplified the process for developers into three simple steps. Depending on the developers need, we have two options to reproduce the results: Model Automation and Dashboarding (MAD) or standalone benchmarking for inference. MAD benchmarking helps developers gather comprehensive performance metrics from a running docker imager. Standalone benchmarking is used when you need to tweak or debug the code or docker during runtime.

MAD-Integrated Benchmarking: Latency and Offline Throughput

Installation

Step 1: Download the docker image on the host machine.

docker pull rocm/vllm:rocm6.2_mi300_ubuntu20.04_py3.9_vllm_0.6.4

For MAD Integrated Benchmarking

Step 2: Clone the ROCm MAD repository.

git clone https://github.com/ROCm/MAD

cd MAD

pip install -r requirements.txt

Run the Performance Benchmark Test

Step 3: Run a performance benchmark test (example: Llama3.1 8B).

export MAD_SECRETS_HFTOKEN="your personal Hugging Face token to access gated models"

python3 tools/run_models.py --tags pyt_vllm_llama-3.1-8b --keep-model-dir --live-output --timeout 28800

The latency and throughput reports of the model are collected in the following path:

~/run_directory/reports_float16/summary/Meta-Llama-3.1-8B-Instruct_latency_report.csv

~/run_directory/reports_float16/summary/Meta-Llama-3.1-8B-Instruct_throughput_report.csv

To measure the performance of other models, please change the –tags arguments with these:

Model name |

|

pyt_vllm_llama-3.1-8b |

pyt_vllm_llama-3.1-8b_fp8 |

pyt_vllm_llama-3.1-70b |

pyt_vllm_llama-3.1-70b_fp8 |

pyt_vllm_llama-3.1-405b |

pyt_vllm_llama-3.1-405b_fp8 |

pyt_vllm_llama-2-7b |

|

pyt_vllm_llama-2-70b |

|

pyt_vllm_mixtral-8x7b |

pyt_vllm_mixtral-8x7b_fp8 |

pyt_vllm_mixtral-8x22b |

pyt_vllm_mixtral-8x22b_fp8 |

pyt_vllm_mistral-7b |

|

pyt_vllm_qwen2-7b |

|

pyt_vllm_qwen2-72b |

|

pyt_vllm_jais-13b |

|

pyt_vllm_jais-30b |

|

Standalone Benchmarking: Latency and Offline Throughput

Installation

Step 1: Download the docker image on the host machine.

docker pull rocm/vllm:rocm6.2_mi300_ubuntu20.04_py3.9_vllm_0.6.4

docker run -it --device=/dev/kfd --device=/dev/dri --group-add video --shm-size 128G --security-opt seccomp=unconfined --security-opt apparmor=unconfined --cap-add=SYS_PTRACE -v $(pwd):/workspace --env HUGGINGFACE_HUB_CACHE=/workspace --name vllm_v0.6.4 rocm/vllm:rocm6.2_mi300_ubuntu20.04_py3.9_vllm_0.6.4

Clone the ROCm MAD Repository Inside the Docker Image

Step 2: Now, clone the ROCm MAD repository inside the docker image.

git clone https://github.com/ROCm/MAD

cd MAD/scripts/vllm

Run the Performance Benchmark Test

Step 3: Run a performance benchmark test (example: Llama3.1 8B).

export HF_TOKEN="your personal Hugging Face token to access gated models"

./vllm_benchmark_report.sh -s all -m meta-llama/Meta-Llama-3.1-8B-Instruct -g 1 -d float16

The latency and throughput reports of the model are collected in the following path:

~/reports_float16/summary/Meta-Llama-3.1-8B-Instruct_latency_report.csv

~/reports_float16/summary/Meta-Llama-3.1-8B-Instruct_throughput_report.csv

To measure the performance of other models under different conditions, please change the –s -m -g – d arguments of ./vllm_benchmark_report.sh -s $test_option -m $model_repo -g $num_gpu -d $datatype with these:

Name |

Options |

Description |

$test_option |

latency |

Measure decoding token latency |

throughput |

Measure token generation throughput |

|

all |

Measure both throughput and latency |

|

$model_repo |

meta-llama/Meta-Llama-3.1-8B-Instruct |

Llama 3.1 8B |

(float16) |

meta-llama/Meta-Llama-3.1-70B-Instruct |

Llama 3.1 70B |

meta-llama/Meta-Llama-3.1-405B-Instruct |

Llama 3.1 405B |

|

meta-llama/Llama-2-7b-chat-hf |

Llama 2 7B |

|

meta-llama/Llama-2-70b-chat-hf |

Llama 2 70B |

|

mistralai/Mixtral-8x7B-Instruct-v0.1 |

Mixtral 8x7B |

|

mistralai/Mixtral-8x22B-Instruct-v0.1 |

Mixtral 8x22B |

|

mistralai/Mistral-7B-Instruct-v0.3 |

Mistral 7B |

|

Qwen/Qwen2-7B-Instruct |

Qwen2 7B |

|

Qwen/Qwen2-72B-Instruct |

Qwen2 72B |

|

core42/jais-13b-chat |

JAIS 13B |

|

core42/jais-30b-chat-v3 |

JAIS 30B |

|

$model_repo |

amd/Meta-Llama-3.1-8B-Instruct-FP8-KV |

Llama 3.1 8B |

(float8) |

amd/Meta-Llama-3.1-70B-Instruct-FP8-KV |

Llama 3.1 70B |

amd/Meta-Llama-3.1-405B-Instruct-FP8-KV |

Llama 3.1 405B |

|

amd/Mixtral-8x7B-Instruct-v0.1-FP8-KV |

Mixtral 8x7B |

|

amd/Mixtral-8x22B-Instruct-v0.1-FP8-KV |

Mixtral 8x22B |

|

$num_gpu |

1 or 8 |

Number of GPUs |

$datatype |

float16, float8 |

Data type |

Benchmarking Conditions

Developers can effortlessly switch between various model types to obtain either a complete set of results or focus on specific outputs to evaluate the model's performance. When assessing performance, it is important to consider both latency and throughput. Latency measures the time taken for the model to process a single request; from the moment the input token is provided until the output token is received. In contrast, offline throughput measures the generated output tokens of multiple requests with different input and output combinations. Users also can change these conditions in ./vllm_benchmark_report.sh

Preconfigured latency measurement conditions

Scenario |

Batch size |

Input length |

Output length |

Short prefill |

1 |

128 |

1 |

2 |

128 |

1 |

|

4 |

128 |

1 |

|

8 |

128 |

1 |

|

16 |

128 |

1 |

|

32 |

128 |

1 |

|

64 |

128 |

1 |

|

128 |

128 |

1 |

|

256 |

128 |

1 |

|

Long prefill |

1 |

2048 |

1 |

2 |

2048 |

1 |

|

4 |

2048 |

1 |

|

8 |

2048 |

1 |

|

16 |

2048 |

1 |

|

32 |

2048 |

1 |

|

64 |

2048 |

1 |

|

128 |

2048 |

1 |

|

256 |

2048 |

1 |

|

Decode |

1 |

1 |

128 |

2 |

1 |

128 |

|

4 |

1 |

128 |

|

8 |

1 |

128 |

|

16 |

1 |

128 |

|

32 |

1 |

128 |

|

64 |

1 |

128 |

|

128 |

1 |

128 |

|

256 |

1 |

128 |

Preconfigured throughput measurement conditions

Requests |

Input length |

Output length |

30000 |

128 |

128 |

3000 |

2048 |

128 |

3000 |

128 |

2048 |

1500 |

2048 |

2048 |

Building an Interactive Chatbot

Setting up the vLLM Backend Server

Step 1: Download the docker image and the api_server code on the host machine.

docker pull rocm/vllm:rocm6.2_mi300_ubuntu20.04_py3.9_vllm_0.6.4

wget https://raw.githubusercontent.com/ROCm/vllm/a466f09d7f20ca073f21e3f64b8c9487e4c4ff4b/vllm/entrypoints/sync_openai/api_server.py

Step 2: Run the vLLM server on 4 GPUs (example: Llama3.1 405B FP8).

DOCKER_IMG=rocm/vllm:rocm6.2_mi300_ubuntu20.04_py3.9_vllm_0.6.4

MODEL=amd/Meta-Llama-3.1-405B-Instruct-FP8-KV

PORT=8080

docker run --rm --device=/dev/kfd --device=/dev/dri --group-add video --shm-size 128G -p $PORT:8011 --security-opt seccomp=unconfined --security-opt apparmor=unconfined --cap-add=SYS_PTRACE -v $(pwd):/workspace --env HUGGINGFACE_HUB_CACHE=/workspace --env VLLM_USE_TRITON_FLASH_ATTN=0 --env PYTORCH_TUNABLEOP_ENABLED=1 --env HF_TOKEN=$HF_TOKEN $DOCKER_IMG python3 /workspace/api_server.py \

--model $MODEL \

--swap-space 16 \

--disable-log-requests \

--dtype float16 \

--tensor-parallel-size 4 \

--host 0.0.0.0 \

--port 8011 \

--num-scheduler-steps 1 \

--distributed-executor-backend "mp"

Step 3: Wait until the server is ready.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8011 (Press CTRL+C to quit)

Access the vLLM Server from the Client

Step 1: Open new terminal on the host server and access the server from command line.

curl http://localhost:8080/v1/chat/completions \l http://localhost:8080/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "amd/Meta-Llama-3.1-405B-Instruct-FP8-KV",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Who won the world series in 2020?"}

]

}'

This gives the following response from the vLLM server.

{"id":"chatcmpl-ce25f1a0b9a84b2b834af904e513ff41","object":"chat.completion","created":1730892805,"model":"amd/Meta-Llama-3.1-405B-Instruct-FP8-KV","choices":[{"index":0,"message":{"role":"assistant","content":"The Los Angeles Dodgers won the 2020 World Series. They defeated the Tampa Bay Rays in the series 4 games to 2, winning the final game on October 27, 2020.","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":null}],"usage":{"prompt_tokens":52,"total_tokens":94,"completion_tokens":42},"prompt_logprobs":null}

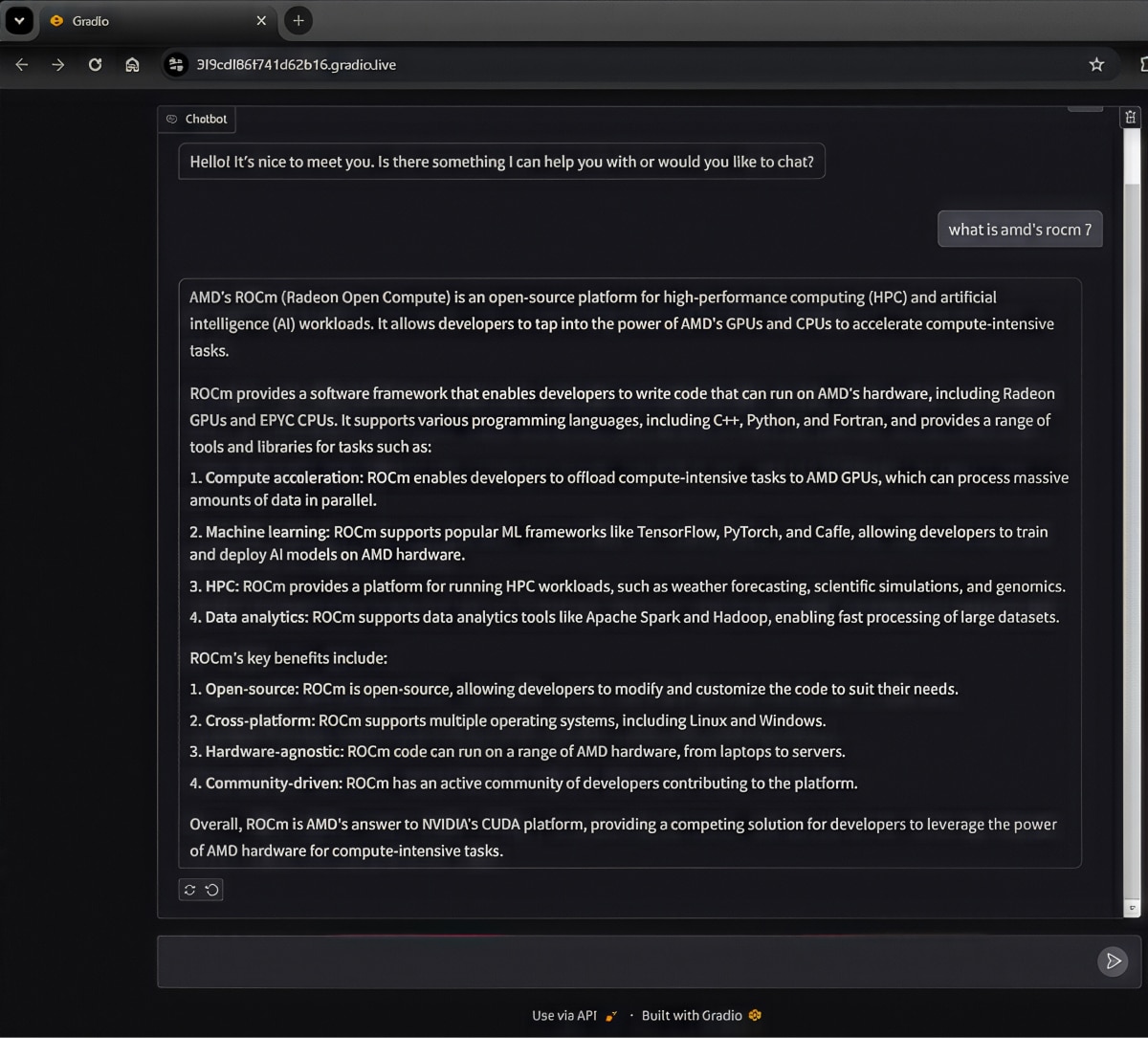

Step 2: Set up the gradio UXUI on the host server

wget https://raw.githubusercontent.com/ROCm/vllm/a466f09d7f20ca073f21e3f64b8c9487e4c4ff4b/examples/gradio_openai_chatbot_webserver.py

pip install gradio openai

python3 gradio_openai_chatbot_webserver.py --model-url http://localhost:8080/v1 --model amd/Meta-Llama-3.1-405B-Instruct-FP8-KV --temp 0.9

And this will create a public URL. The URL https://3f9cdf86f741d62b16.gradio.live will be different.

* Running on local URL: http://127.0.0.1:8001

* Running on public URL: https://3f9cdf86f741d62b16.gradio.live

Open the URL https://3f9cdf86f741d62b16.gradio.live on the web browser

What’s Next?

AMD collaborates with innovators like vLLM and other partners to deliver performance and ease of use across diverse workloads. This approach empowers developers to drive innovation, optimize applications, and scale efficiently while benefiting from a flexible, community-driven environment.

Use the above docker image to validate the benchmark AI inference performance numbers on Instinct MI300X accelerators. Additionally, to learn how Firework AI, an AMD partner, used the vLLM docker image for inferencing and benchmarking, read FireAttention V3: Enabling AMD as a Viable Alternative for GPU Inference

Additional resources

- For an overview of the optional performance features of vLLM with ROCm software, visit https://github.com/ROCm/vllm/blob/main/ROCm_performance.md.

- To learn more about the options for latency and throughput benchmark scripts, visit https://github.com/ROCm/vllm/tree/main/benchmarks.

- To stay ahead of the curve in AI innovation for inference, visit AMD Infinity Hub

- To learn how to run LLM models from Hugging Face or your own model, visit Using ROCm for AI. To learn how to optimize inference on LLMs, visit Fine-tuning LLMs and inference optimization For a list of other ready-made Docker images for ROCm, check out the ROCm Docker image support matrix.