ZenDNN 5.0: Supercharge AI on AMD EPYC™ Server CPUs

Jan 08, 2025

Contributors: Padmini Gopalakrishnan, Priya Savithri Baskaran, Jereshea John Mary

Overview

Nearly half a year has elapsed since the debut of ZenDNN 4.2, a deep neural network acceleration inference library optimized for AMD “Zen” CPU architecture, which revamped the under the hood architecture. ZenDNN 4.2 introduced plugins to PyTorch and TensorFlow, providing a seamless experience for users running deep learning workloads in these frameworks on AMD EPYC™ processor-based servers. During this period, the AMD team has worked hard to deliver additional enhancements and performance optimizations, with a focus on generative large language models running on servers powered by the recently launched 5th Gen AMD EPYC processors.

We are thrilled to introduce ZenDNN 5.0—a powerful upgrade for deep learning on AMD EPYC CPUs, combining optimizations and leading-edge support for modern workloads. We are confident that the enhanced performance and capabilities of ZenDNN 5.0 will inspire and elevate your work.

Release Highlights

ZenDNN 5.0 comes with a slew of new features summarized below:

- Robust Support for 5th Gen AMD EPYC Processors: The ZenDNN 5.0 release focuses on delivering robust support for the new 5th Gen AMD EPYC processor, formerly codenamed “Turin,” along with significant performance enhancements for both generative and non-generative large language models (LLMs) via the ZenDNN Plugin for PyTorch (a.k.a. Zentorch).

- Compatibility with AOCL BLIS 5.0: This release is compatible with AOCL BLIS 5.0 and includes AMD EPYC processor-specific optimizations for matrix multiplication (matmul) operators and related fusions, particularly tailored for BF16 precision.

- Advanced Auto-Tuning for LLMs: ZenDNN 5.0 features a specialized auto-tuning algorithm - BF16:0 - designed to optimize generative LLMs such as Llama2, Llama3, Phi2, Phi3, Qwen, ChatGLM, and GPT.

- Support for INT4 Weight-Only Quantization (WOQ): We also introduced support for weight-only quantization (WOQ) with INT4 weights – through the advanced Activation-Aware Weight Quantization (AWQ) algorithm - and BF16 activations, allowing seamless integration with models optimized by the AMD Quark quantizer.

- New API for Generative LLMs: Additional performance boosts for generative LLMs are accessible through the

zentorch.llm.optimize()function in the ZenDNN PyTorch plug-in, which provides AMD EPYC processor-specific enhancements on top of the x86 optimizations available inipex.llm.optimize(). - Optimized Scalar Dot Product Attention (SDPA): Includes a specialized SDPA operator with KV cache optimizations tailored to the cache hierarchy of AMD EPYC processors.

- BF16 Precision for Recommender Systems: BF16 precision support is now available for Recommender System models, along with graph optimization and pattern matching improvements within the ZenDNN Plugin for PyTorch.

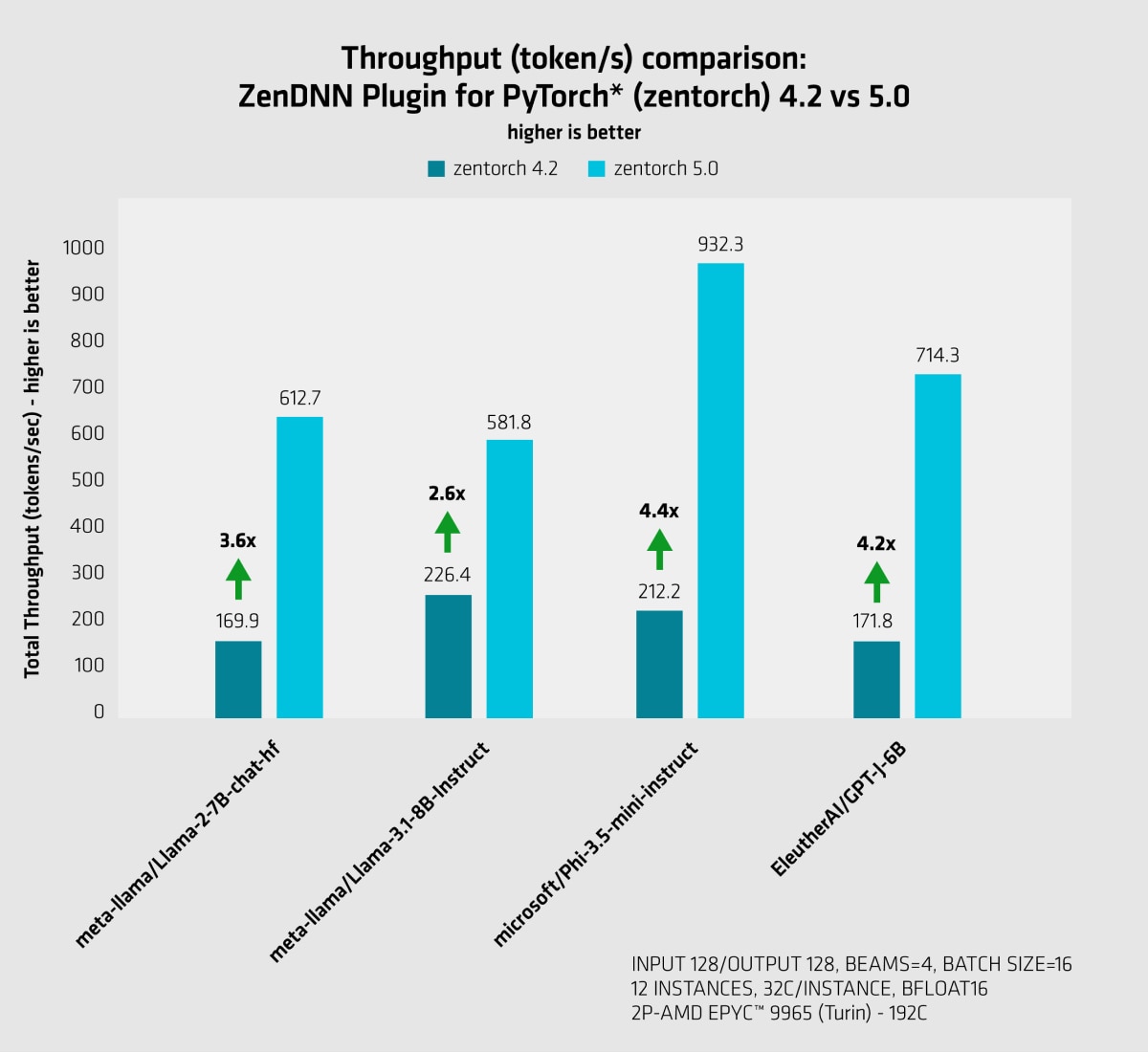

400% Performance Uplift

We began this blog by emphasizing the focused efforts of AMD engineers in developing ZenDNN 5.0. However, results speak louder than words. To demonstrate the work we’ve done, we tested a range of models using both the previous ZenDNN 4.2 release and the new ZenDNN 5.0 on a 5th Gen AMD EPYC processor, featuring a whopping 192 cores per socket. Leveraging this core density, we conducted multi-instance inferences, allocating 32 cores per instance, and using `numactl` to physically bind each instance to dedicated core batches. Detailed specifications for the hardware setup and software environment are provided in the footnotes of this blog.

The result? On average an exceptional 400% performance uplift [ZD-057]1 from the ZenDNN 4.2 baseline. It is impressive, isn’t it?

Run configurations: See Footnote

Run configurations: See Footnote

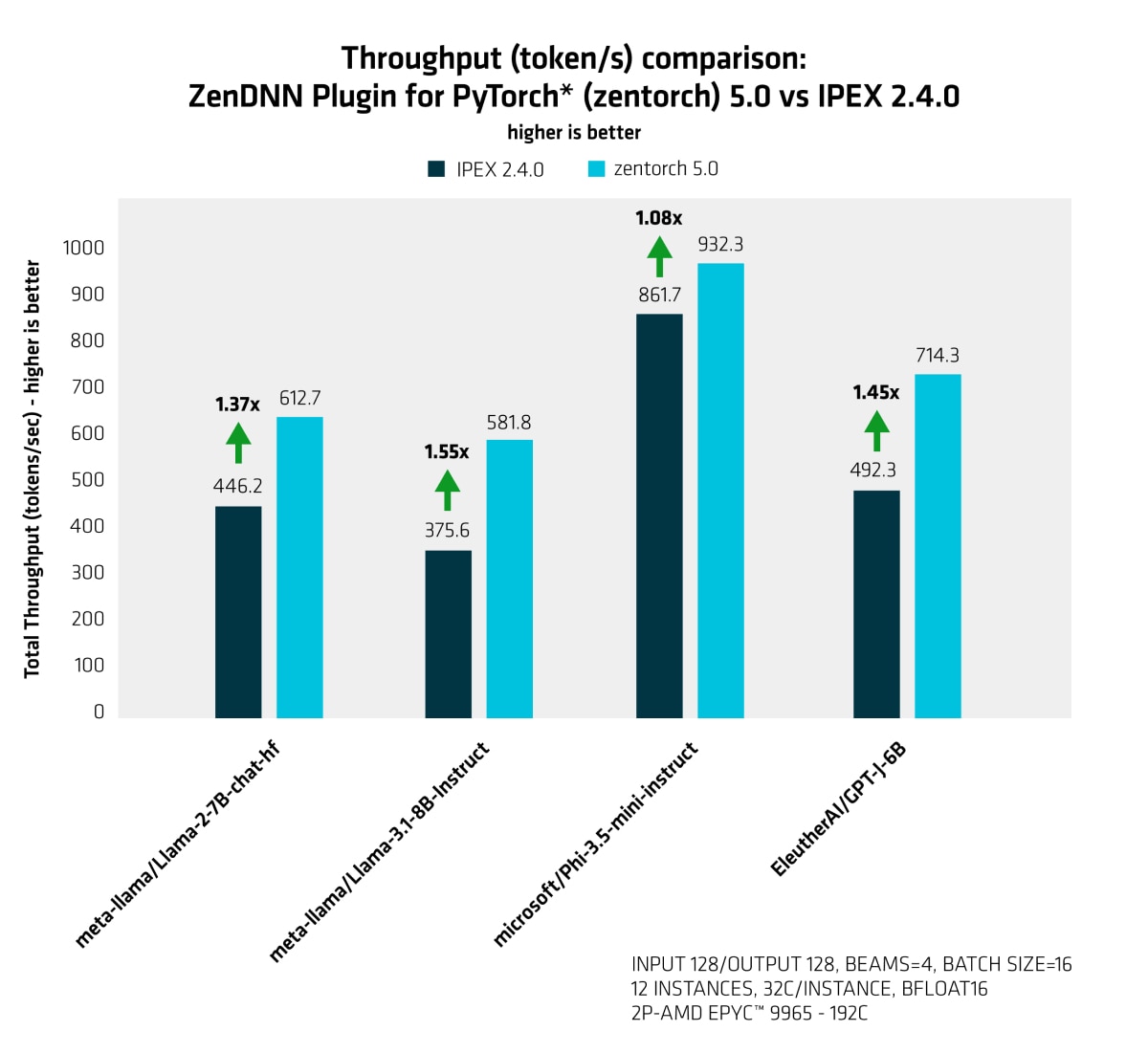

During the development of ZenDNN 5.0, we benchmarked against Intel Extension for PyTorch version 2.4.0, which was the latest available version at the time. The tests were conducted on identical hardware and included comparisons across several models. Key results are presented below.

Maximizing Performance Gains for Hugging Face Generative Large Language Models (LLMs)

The ZenDNN 5.0 Plugin for PyTorch introduces a new method - zentorch.llm.optimize - providing targeted optimizations specifically for generative LLMs. To leverage these optimizations, it is as easy as three lines of code change, with one of them being importing the zentorch plugin.

The release of the ZenDNN 5.0 Plugin for PyTorch introduces a new powerful API - zentorch.llm.optimize - tailored for optimizing Hugging Face generative LLMs. This method delivers targeted performance enhancements with minimal effort, requiring just a few lines of code:

import torch

import zentorch

[…]

model = zentorch.llm.optimize(model, torch.bfloat16)

model = torch.compile(model, backend='zentorch')

with torch.no_grad():

output = model(input)

[…]

The code snippet above demonstrates the simplicity of integrating Zentorch optimizations. A similar pattern applies when using model.forward or model.generate. For a comprehensive overview of implementation details and full examples, refer to the recommendations and examples sections in the Zentorch user guide.

Checking Supported Models

To verify the list of generative models currently supported by zentorch.llm.optimize, you can execute the following command:

python -c 'import zentorch; print("\n".join([f"{i+1:3}. {item}" for i, item in enumerate(zentorch.llm.SUPPORTED_MODELS)]))'

With every new release of ZenDNN, we will keep updating the list of supported models.

Making AI Efficient: Exploring INT4 Quantization Support

With the release of the ZenDNN 5.0 Plugin for PyTorch, support for INT4 weight-only quantization for generative LLMs has been introduced. ZenDNN 5.0, provides native support for models quantized with AMD Quark, which has been designed for efficiently quantizing Hugging Face models.

This guide provides a concise overview of the steps to get started with INT4 quantization using AMD Quark. For more detailed documentation, refer to the official AMD Quark website.

Quick Primer to AMD Quark

- Step 1: Install AMD Quark

- Download AMD Quark and install the wheel file in your Python environment.

- Note: At the time of writing, version Quark 0.5.0 was used for testing.

- Step 2: Utilize the Quantization Script

- Within the downloaded package, locate the helper script

quantize_quark.pyin theexamples/torch/language_modeling directory. This script uses AWQ (Activation-aware Weight Quantization) as the quantization algorithm.

- Within the downloaded package, locate the helper script

- Step 3: Execute the Quantization Script

- Run the following command, adjusting the placeholders as needed:

OMP_NUM_THREADS=<physical-cores-num> numactl --physcpubind=<physical-cores-list> python quantize_quark.py --model_dir <hugging_face_model_id> --device cpu --data_type bfloat16 --model_export quark_safetensors --quant_algo awq --quant_scheme w_int4_per_group_sym --group_size -1 --num_calib_data 128 --dataset pileval_for_awq_benchmark --seq_len 128 --output_dir <output_dir> --pack_method order

Key Parameters:

<physical-cores-num>: Total number of physical CPU cores (uselscputo determine this).<hugging_face_model_id>: ID of a Hugging Face model (e.g., meta-llama/Llama-3.1-8B-Instruct).<output_dir>: Path on your system with sufficient disk space to store the INT4 quantized model (output files can consume several gigabytes).

Step 4: Modify the Inference Script

Since Hugging Face currently does not support the AWQ format for CPUs, you need to add a code snippet to your inference script to load the weight-only quantized (WOQ) models:

from transformers import AutoConfig, AutoModelForCausalLM

import torch

import zentorch

[…]

config = AutoConfig.from_pretrained(model_id, trust_remote_code=True, torch_dtype=torch.bfloat16)

model = AutoModelForCausalLM.from_config(config, trust_remote_code=True, torch_dtype=torch.bfloat16)

model = zentorch.load_woq_model(model, safetensor_path)

[…]

safetensor_path: The <output_dir> path containing the quantized safetensor model.

Once the model is loaded, it can be used for inference similarly to the standard cases outlined in the documentation. By following these steps, you can quickly enable INT4 quantization for efficient LLM deployment. For further optimization tips and updates, continue to monitor AMD Quark resources and the Zentorch documentation.

Next Steps

I hope this blog has effectively highlighted enhancements AMD brought to inferencing on EPYC processor-based servers, and inspired you to give it a try. We've walked you through several straightforward steps on how you can seamlessly integrate ZenDNN 5.0 into your workflow. We also provided a quick primer to INT4 WOQ quantization and how to use it with Zentorch. While this blog primarily focuses on the ZenDNN Plugin for PyTorch, you can explore further details on the ZenDNN Plugin for TensorFlow and ONNXRT with ZenDNN integrated on the ZenDNN Developer Hub here:

You can also access in-depth information, including a comprehensive Performance Tuning Guide, in our detailed documentation available here:

Found a Bug?

Let us know and file the issue on Github:

附注

Run configurations:

- ZD-057: Testing conducted internally by AMD as of 11/14/2024.The environment settings for this configuration are as follows: The operating system is Ubuntu 11.4.0-1ubuntu1~22.04, running on an AMD EPYC 9965 192-Core Processor with 2 NUMA nodes. The Python version is 3.11.8, and PyTorch is set as follows: zentorch 4.2 corresponds to version 2.1.2+cpu, while zentorch 5.0 corresponds to version 2.4.0+cpu. IPEX was not needed for zentorch 4.2 but is set to version 2.4.0+cpu for zentorch 5.0. ZenDNN versions include 4.2 and 5.0-rc10, with Transformers library version 4.43.2. Each instance has 32 cores, totaling 12 instances, which equates to 384 cores in use (192 per socket). Datatype: BFloat16, Input sequence: 128, Output Sequence: 128, num_beams=4, batch_size=16.

For zentorch 4.2, Python version 3.11.8 is used, with `torch==2.1.2`, `torchvision==0.16.2`, and `torchaudio==2.1.2`. The Intel extension for PyTorch is set to `intel-extension-for-pytorch==2.1.100`, and OneCCL is configured as `oneccl_bind_pt==2.1.0`. Transformers version 4.43.2 is a source build, customized for Token Timings extraction. The LLVM OpenMP version is `llvm-openmp=18.1.8=hf5423f3_1`, and zentorch is set to `zentorch-4.2.0-cp311-cp311-manylinux2014_x86_64.whl`.

For zentorch 5.0, Python remains at version 3.11.8, with updated versions of `torch==2.4.0`, `torchvision==0.19.0`, and `torchaudio==2.4.0`. The Intel extension for PyTorch is updated to `intel-extension-for-pytorch==2.4.0`, and OneCCL to `oneccl_bind_pt==2.4.0`. Transformers version 4.43.2 is installed via pip. LLVM OpenMP remains the same as `llvm-openmp=18.1.8=hf5423f3_1`, and zentorch is updated to `zentorch-5.0.0-cp311-cp311-manylinux_2_28_x86_64.whl`.

For ZenDNN variables, ZENDNN_WEIGHT_CACHING is set to 1, ZENDNN_PRIMITIVE_CACHE_CAPACITY is set to 1024, and ZENDNN_MATMUL_ALGO is set to FP32:3 and BF16:0. Key Memory Platform (KMP) variables include KMP_REDUCTION_BARRIER_PATTERN, KMP_PLAIN_BARRIER_PATTERN, and KMP_FORKJOIN_BARRIER_PATTERN all set to "dist,dist"; KMP_BLOCKTIME is 1; KMP_TPAUSE is 0; and KMP_AFFINITY is set to "granularity=fine,compact,1,0".

pMemory management uses JeMalloc with LD_PRELOAD paths set to /usr/local/lib/libjemalloc.so:/home/amd/anaconda3/envs/turin_42/lib/libiomp5.so and MALLOC_CONF configured with oversize_threshold:1, background_thread:true, metadata_thp:auto, dirty_decay_ms:-1, and muzzy_decay_ms:-1. Core-specific settings include OMP_NUM_THREADS at 32. Additional OpenMP variables are OMP_WAIT_POLICY set to ACTIVE, OMP_DYNAMIC set to FALSE, and OMP_NUM_THREADS also set to 32.

Test: Model | zentorch 4.2.0 | zentorch 5.0.0 | IPEX 2.4.0 | zentorch 5.0.0 vs zentorch 4.2.0 | zentorch 5.0.0 vs IPEX 2.4.0

meta-llama/Llama-2-7B-chat-hf | 169.9 | 612.7 | 446.2 | 3.61 | 1.37

meta-llama/Llama-3.1-8B-Instruct | 226.4 | 581.8 | 375.6 | 2.57 | 1.55

microsoft/Phi-3.5-mini-instruct | 212.2 | 932.3 | 861.7 | 4.39 | 1.08

facebook/opt-1.3b | 652.7 | 1990.6 | 1708.4 | 3.05 | 1.17

EleutherAI/GPT-J-6B | 171.8 | 714.3 | 492.3 | 4.16 | 1.45

Run configurations:

- ZD-057: Testing conducted internally by AMD as of 11/14/2024.The environment settings for this configuration are as follows: The operating system is Ubuntu 11.4.0-1ubuntu1~22.04, running on an AMD EPYC 9965 192-Core Processor with 2 NUMA nodes. The Python version is 3.11.8, and PyTorch is set as follows: zentorch 4.2 corresponds to version 2.1.2+cpu, while zentorch 5.0 corresponds to version 2.4.0+cpu. IPEX was not needed for zentorch 4.2 but is set to version 2.4.0+cpu for zentorch 5.0. ZenDNN versions include 4.2 and 5.0-rc10, with Transformers library version 4.43.2. Each instance has 32 cores, totaling 12 instances, which equates to 384 cores in use (192 per socket). Datatype: BFloat16, Input sequence: 128, Output Sequence: 128, num_beams=4, batch_size=16.

For zentorch 4.2, Python version 3.11.8 is used, with `torch==2.1.2`, `torchvision==0.16.2`, and `torchaudio==2.1.2`. The Intel extension for PyTorch is set to `intel-extension-for-pytorch==2.1.100`, and OneCCL is configured as `oneccl_bind_pt==2.1.0`. Transformers version 4.43.2 is a source build, customized for Token Timings extraction. The LLVM OpenMP version is `llvm-openmp=18.1.8=hf5423f3_1`, and zentorch is set to `zentorch-4.2.0-cp311-cp311-manylinux2014_x86_64.whl`.

For zentorch 5.0, Python remains at version 3.11.8, with updated versions of `torch==2.4.0`, `torchvision==0.19.0`, and `torchaudio==2.4.0`. The Intel extension for PyTorch is updated to `intel-extension-for-pytorch==2.4.0`, and OneCCL to `oneccl_bind_pt==2.4.0`. Transformers version 4.43.2 is installed via pip. LLVM OpenMP remains the same as `llvm-openmp=18.1.8=hf5423f3_1`, and zentorch is updated to `zentorch-5.0.0-cp311-cp311-manylinux_2_28_x86_64.whl`.

For ZenDNN variables, ZENDNN_WEIGHT_CACHING is set to 1, ZENDNN_PRIMITIVE_CACHE_CAPACITY is set to 1024, and ZENDNN_MATMUL_ALGO is set to FP32:3 and BF16:0. Key Memory Platform (KMP) variables include KMP_REDUCTION_BARRIER_PATTERN, KMP_PLAIN_BARRIER_PATTERN, and KMP_FORKJOIN_BARRIER_PATTERN all set to "dist,dist"; KMP_BLOCKTIME is 1; KMP_TPAUSE is 0; and KMP_AFFINITY is set to "granularity=fine,compact,1,0".

pMemory management uses JeMalloc with LD_PRELOAD paths set to /usr/local/lib/libjemalloc.so:/home/amd/anaconda3/envs/turin_42/lib/libiomp5.so and MALLOC_CONF configured with oversize_threshold:1, background_thread:true, metadata_thp:auto, dirty_decay_ms:-1, and muzzy_decay_ms:-1. Core-specific settings include OMP_NUM_THREADS at 32. Additional OpenMP variables are OMP_WAIT_POLICY set to ACTIVE, OMP_DYNAMIC set to FALSE, and OMP_NUM_THREADS also set to 32.

Test: Model | zentorch 4.2.0 | zentorch 5.0.0 | IPEX 2.4.0 | zentorch 5.0.0 vs zentorch 4.2.0 | zentorch 5.0.0 vs IPEX 2.4.0

meta-llama/Llama-2-7B-chat-hf | 169.9 | 612.7 | 446.2 | 3.61 | 1.37

meta-llama/Llama-3.1-8B-Instruct | 226.4 | 581.8 | 375.6 | 2.57 | 1.55

microsoft/Phi-3.5-mini-instruct | 212.2 | 932.3 | 861.7 | 4.39 | 1.08

facebook/opt-1.3b | 652.7 | 1990.6 | 1708.4 | 3.05 | 1.17

EleutherAI/GPT-J-6B | 171.8 | 714.3 | 492.3 | 4.16 | 1.45